Ming-Yu Liu and Arun Mallya were on a video call when one of them started to break up, then freeze.

It’s an irksome reality of life in the pandemic that most of us have shared. But unlike most of us, Liu and Mallya could do something about it.

They are AI researchers at NVIDIA and specialists in computer vision. Working with colleague Ting-Chun Wang, they realized they could use a neural network in place of the software called a video codec typically used to compress and decompress video for transmission over the net.

Their work enables a video call with one-tenth the network bandwidth users typically need. It promises to reduce bandwidth consumption by orders of magnitude in the future.

“We want to provide a better experience for video communications with AI so even people who only have access to extremely low bandwidth can still upgrade from voice to video calls,” said Mallya.

Better Connections Thanks to GANs

The technique works even when callers are wearing a hat, glasses, headphones or a mask. And just for fun, they spiced up their demo with a couple bells and whistles so users can change their hair styles or clothes digitally or create an avatar.

A more serious feature in the works (shown at top) uses the neural network to align the position of users’ faces for a more natural experience. Callers watch their video feeds, but they appear to be looking directly at their cameras, enhancing the feeling of a face-to-face connection.

“With computer vision techniques, we can locate a person’s head over a wide range of angles, and we think this will help people have more natural conversations,” said Wang.

Say hello to the latest way AI is making virtual life more real.

How AI-Assisted Video Calls Work

The mechanism behind AI-assisted video calls is simple.

A sender first transmits a reference image of the caller, just like today’s systems that typically use a compressed video stream. Then, rather than sending a fat stream of pixel-packed images, it sends data on the locations of a few key points around the user’s eyes, nose and mouth.

A generative adversarial network on the receiver’s side uses the initial image and the facial key points to reconstruct subsequent images on a local GPU. As a result, much less data is sent over the network.

Liu’s work in GANs hit the spotlight last year with GauGAN, an AI tool that turns anyone’s doodles into photorealistic works of art. GauGAN has already been used to create more than a million images and is available at the AI Playground.

“The pandemic motivated us because everyone is doing video conferencing now, so we explored how we can ease the bandwidth bottlenecks so providers can serve more people at the same time,” said Liu.

GPUs Bust Bandwidth Bottlenecks

The approach is part of an industry trend of shifting network bottlenecks into computational tasks that can be more easily tackled with local or cloud resources.

“These days lots of companies want to turn bandwidth problems into compute problems because it’s often hard to add more bandwidth and easier to add more compute,” said Andrew Page, a director of advanced products in NVIDIA’s media group.

AI Instruments Tune Video Services

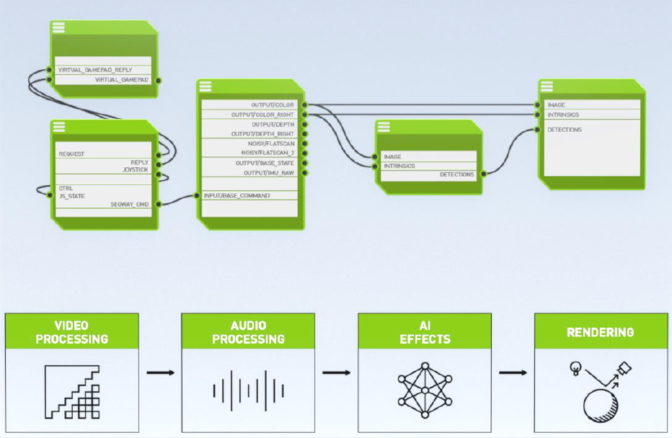

GAN video compression is one of several capabilities coming to NVIDIA Maxine, a cloud-AI video-streaming platform to enhance video conferencing and calls. It packs audio, video and conversational AI features in a single toolkit that supports a broad range of devices.

Announced this week at GTC, Maxine lets service providers deliver video at super resolution with real-time translation, background noise removal and context-aware closed captioning. Users can enjoy features such as face alignment, support for virtual assistants and realistic animation of avatars.

“Video conferencing is going through a renaissance,” said Page. “Through the pandemic, we’ve all lived through its warts, but video is here to stay now as a part of our lives going forward because we are visual creatures.”

Maxine harnesses the power of NVIDIA GPUs with Tensor Cores running software such as NVIDIA Riva, an SDK for conversational AI that delivers a suite of speech and text capabilities. Together, they deliver AI capabilities that are useful today and serve as building blocks for tomorrow’s video products and services.

Learn more about NVIDIA Research. And watch NVIDIA CEO Jensen Huang recap all the news at GTC in the video below.

It’s not too late to get access to hundreds of live and on-demand talks at GTC. Register now through Oct. 9 using promo code CMB4KN to get 20 percent off.