Generative AI is taking root at national and corporate labs, accelerating high-performance computing for business and science.

Researchers at Sandia National Laboratories aim to automatically generate code in Kokkos, a parallel programming language designed for use across many of the world’s largest supercomputers.

It’s an ambitious effort. The specialized language, developed by researchers from several national labs, handles the nuances of running tasks across tens of thousands of processors.

Sandia is employing retrieval-augmented generation (RAG) to create and link a Kokkos database with AI models. As researchers experiment with different RAG approaches, initial tests show promising results.

Cloud-based services like NeMo Retriever are among the RAG options the scientists will evaluate.

“NVIDIA provides a rich set of tools to help us significantly accelerate the work of our HPC software developers,” said Robert Hoekstra, a senior manager of extreme scale computing at Sandia.

Building copilots via model tuning and RAG is just a start. Researchers eventually aim to employ foundation models trained with scientific data from fields such as climate, biology and material science.

Getting Ahead of the Storm

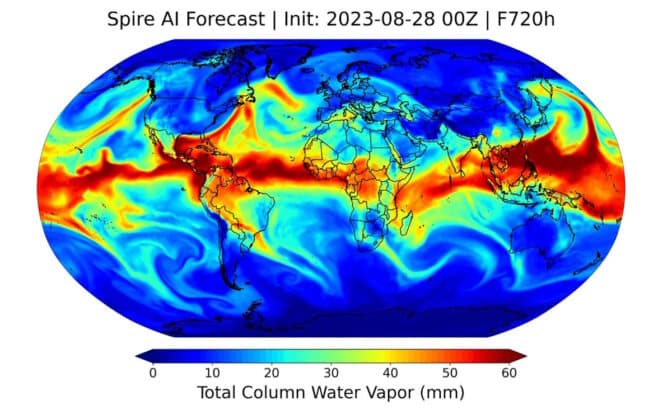

Researchers and companies in weather forecasting are embracing CorrDiff, a generative AI model that’s part of NVIDIA Earth-2, a set of services and software for weather and climate research.

CorrDiff can scale the 25km resolution of traditional atmosphere models down to 2 kilometers and expand by more than 100x the number of forecasts that can be combined to improve confidence in predictions.

“It’s a promising innovation … We plan to leverage such models in our global and regional AI forecasts for richer insights,” said Tom Gowan, machine learning and modeling lead for Spire, a company in Vienna, Va., that collects data from its own network of tiny satellites.

Generative AI enables faster, more accurate forecasts, he said in a recent interview.

“It really feels like a big jump in meteorology,” he added. “And by partnering with NVIDIA, we have access to the world’s best GPUs that are the most reliable, fastest and most efficient ones for both training and inference.”

Switzerland-based Meteomatics recently announced it also plans to use NVIDIA’s generative AI platform for its weather forecasting business.

“Our work with NVIDIA will help energy companies maximize their renewable energy operations and increase their profitability with quick and accurate insight into weather fluctuations,” said Martin Fengler, founder and CEO of Meteomatics.

Generating Genes to Improve Healthcare

At Argonne National Laboratory, scientists are using the technology to generate gene sequences that help them better understand the virus behind COVID-19. Their award-winning models, called GenSLMs, spawned simulations that closely resemble real-world variants of SARS-CoV-2.

“Understanding how different parts of the genome are co-evolving gives us clues about how the virus may develop new vulnerabilities or new forms of resistance,” Arvind Ramanathan, a lead researcher, said in a blog.

GenSLMs were trained on more than 110 million genome sequences with NVIDIA A100 Tensor Core GPU-powered supercomputers, including Argonne’s Polaris system, the U.S. Department of Energy’s Perlmutter and NVIDIA’s Selene.

Microsoft Proposes Novel Materials

Microsoft Research showed how generative AI can accelerate work in materials science.

Their MatterGen model generates novel, stable materials that exhibit desired properties. The approach enables specifying chemical, magnetic, electronic, mechanical and other desired properties.

“We believe MatterGen is an important step forward in AI for materials design,” the Microsoft Research team wrote of the model they trained on Azure AI infrastructure with NVIDIA A100 GPUs.

Companies such as Carbon3D are already finding opportunities, applying generative AI to materials science in commercial 3D printing operations.

It’s just the beginning of what researchers will be able to do for HPC and science with generative AI. The NVIDIA H200 Tensor Core GPUs available now and the upcoming NVIDIA Blackwell Architecture GPUs will take their work to new levels.

Learn more about tools like NVIDIA Modulus, a key component in the Earth-2 platform for building AI models that obey the laws of physics, and NVIDIA Megatron-Core, a NeMo library to tune and train large language models.