Intelligent vehicles require intelligent development.

That’s why NVIDIA has built a complete AI-powered portfolio — from data centers to in-vehicle computers — that enables software-defined autonomous vehicles. And this month during GTC Digital, we’re providing an inside look at how this development process works, plus how we’re approaching safer, more efficient transportation.

Autonomous vehicles must be able to operate in thousands of conditions around the world to be truly driverless. The key to reaching this level of capability is mountains of data.

To put that in perspective, a fleet of just 50 vehicles driving six hours a day generates about 1.6 petabytes of sensor data a day. If all that data were stored on standard 1GB flash drives, they’d cover more than 100 football fields. This data must then be curated and labeled to train the deep neural networks (DNNs) that will run in the car, performing a variety of dedicated functions, such as object detection and localization.

The infrastructure to train and test this software must include high-performance supercomputers to handle these enormous data needs. To run efficiently, the system must be able to intelligently curate and organize this data. Finally, it must be traceable — making it easy to find and fix bugs in the process — and repeatable, going over the same scenario over and over again to ensure a DNN’s proficiency.

As part of the GTC Digital series, we present this complete development and training infrastructure as well as some of the DNNs it has produced, driving progress toward deploying the car of the future.

Born and Raised in the Data Center

While today’s vehicles are put together on the factory floor assembly line, autonomous vehicles are born in the data center. In a GTC digital session, Clemént Farabet, vice president of AI Infrastructure at NVIDIA, details this high-performance, end-to-end platform for autonomous vehicle development.

The NVIDIA internal AI infrastructure includes NVIDIA DGX servers that store and process the petabytes of driving data. For comprehensive training, developers must work with five to 10 billion frames to develop and then evaluate a DNN’s performance.

High-performance data center GPUs help speed up the time it takes to process this data. In addition, Farabet’s team optimizes development times using advanced learning methods such as active learning.

Rather than rely solely on humans to curate and label driving data for DNN training, active learning makes it possible for the DNN to choose the data it needs to learn from. A dedicated neural network goes through a pool of frames, flagging those in which it demonstrates uncertainty. The flagged frames are then labeled manually and used to train the DNN, ensuring that it’s learning from the exact data that’s new or confusing.

Once trained, these DNNs can then be tested and validated on the NVIDIA DRIVE Constellation simulation platform. The cloud-based solution enables millions of miles to be driven in virtual environments across a broad range of scenarios — from routine driving to rare or even dangerous situations — with greater efficiency, cost-effectiveness and safety than what is possible in the real world.

DRIVE Constellation’s high-fidelity simulation ensures these DNNs can be tested over and over, in every possible scenario and every possible condition before operating on public roads.

When combined with data center training, simulation allows developers to constantly improve upon their software in an automated, traceable and repeatable development process.

- Watch on-demand: NVIDIA’s AI Infrastructure for Self-Driving Cars

DNNs at the Edge

Once trained and validated, these DNNs can then operate in the car.

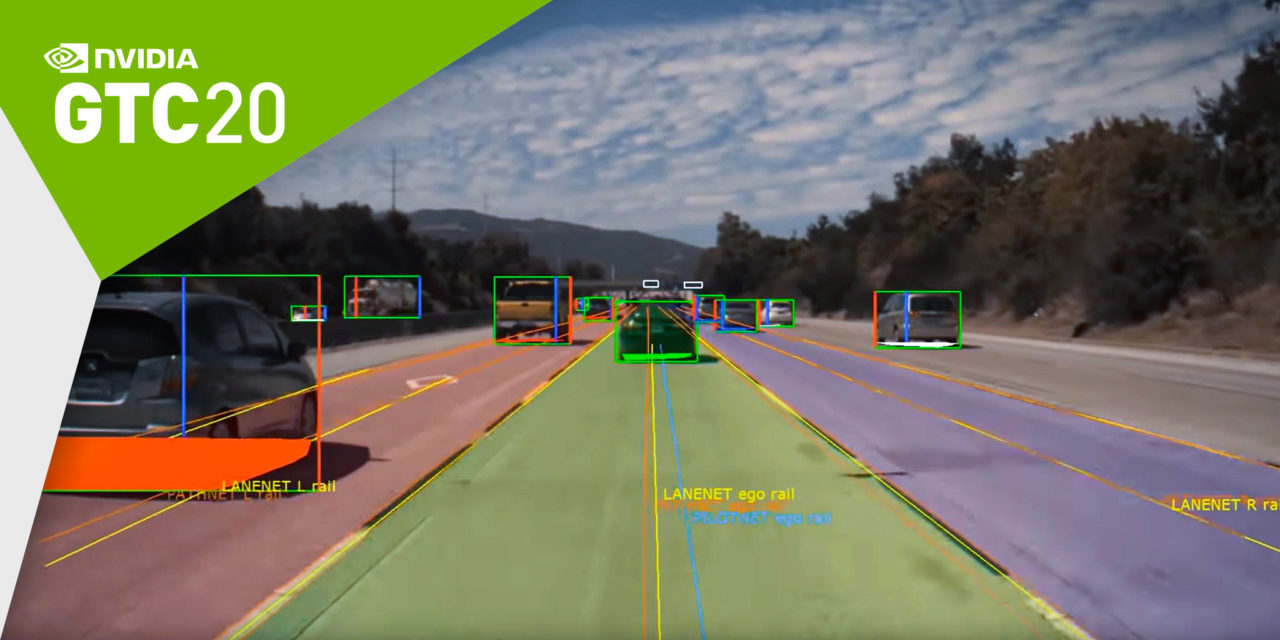

During GTC Digital, Neda Cvijetic, NVIDIA senior manager of autonomous vehicles and host of the DRIVE Labs video series, gave an inside look at a sampling of self-driving DNNs we’ve developed.

Autonomous vehicles run an array of DNNs covering perception, mapping and localization to operate safely. To humans, these tasks seem straightforward, however, they’re all complex processes that require intelligent approaches to be performed successfully.

For example, to classify road objects, pedestrians and drivable space, one DNN uses a process known as panoptic segmentation, which can identify a scene with pixel-level accuracy.

To help it perceive parking spaces in a variety of environments, developers taught the ParkNet DNN to identify a spot as a four-sided polygon rather than a rectangle, so it could discern slanted spaces as well as their entry points.

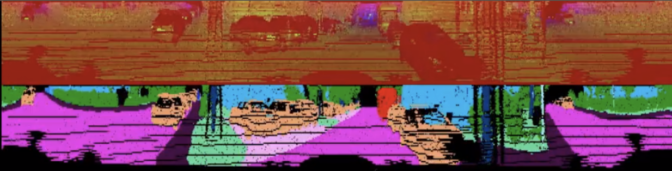

And our LidarNet DNN addresses challenges in processing lidar data for localization by fusing multiple perspectives for accurate and complete perception information.

By combining these and other DNNs and running them on high-performance in-vehicle compute, such as the NVIDIA DRIVE AGX platform, an autonomous vehicle can perform comprehensive perception and planning and control without a human driver.

- Watch on-demand: NVIDIA DRIVE Labs: An Inside Look at Autonomous Vehicle Software

The GTC Digital site hosts these and other free sessions, with new content from NVIDIA experts and the DRIVE ecosystem added every Thursday until April 23. Stay up to date and register here.