NVIDIA’s AI platform is available to any forward-thinking business — and it’s easier to use than ever.

Launched today, NVIDIA AI Enterprise 5.0 includes NVIDIA microservices, downloadable software containers for deploying generative AI applications and accelerated computing. It’s available from leading cloud service providers, system builders and software vendors — and it’s in use at customers such as Uber.

“Our adoption of NVIDIA AI Enterprise inference software is important for meeting the high performance our users expect,” said Albert Greenberg, vice president of platform engineering at Uber. “Uber prides itself on being at the forefront of adopting and using the latest, most advanced AI innovations to deliver a customer service platform that sets the industry standard for effectiveness and excellence.”

Microservices Speed App Development

Developers are turning to microservices as an efficient way to build modern enterprise applications at a global scale. Working from a browser, they use cloud APIs, or application programming interfaces, to compose apps that can run on systems and serve users worldwide.

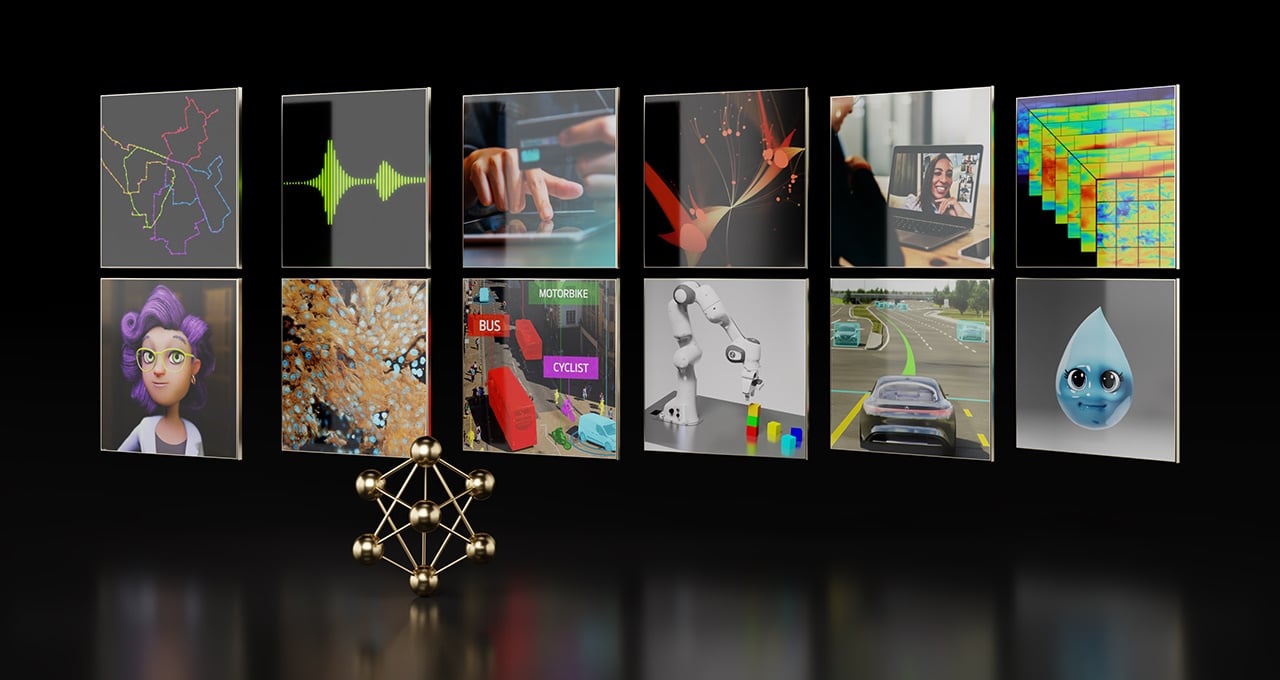

NVIDIA AI Enterprise 5.0 now includes a wide range of microservices — NVIDIA NIM for deploying AI models in production and the NVIDIA CUDA-X collection of microservices which includes NVIDIA cuOpt.

NIM microservices optimize inference for dozens of popular AI models from NVIDIA and its partner ecosystem.

Powered by NVIDIA inference software — including Triton Inference Server, TensorRT, and TensorRT-LLM — NIM slashes deployment times from weeks to minutes. It provides security and manageability based on industry standards as well as compatibility with enterprise-grade management tools.

NVIDIA cuOpt is a GPU-accelerated AI microservice that’s set world records for route optimization and can empower dynamic decision-making that reduces cost, time and carbon footprint. It’s one of the CUDA-X microservices that help industries put AI into production.

More capabilities are in the works. For example, NVIDIA RAG LLM operator — now in early access and described in more detail here — will move co-pilots and other generative AI applications that use retrieval-augmented generation from pilot to production without rewriting any code.

NVIDIA microservices are being adopted by leading application and cybersecurity platform providers including CrowdStrike, SAP and ServiceNow.

More Tools and Features

Three other updates in version 5.0 are worth noting.

The platform now packs NVIDIA AI Workbench, a developer toolkit for quickly downloading, customizing, and running generative AI projects. The software is now generally available and supported with an NVIDIA AI Enterprise license.

Version 5.0 also now supports Red Hat OpenStack Platform, the environment most Fortune 500 companies use for creating private and public cloud services. Maintained by Red Hat, it provides developers a familiar option for building virtual computing environments. IBM Consulting will help customers deploy these new capabilities.

In addition, version 5.0 expands support to cover a wide range of the latest NVIDIA GPUs, networking hardware and virtualization software.

Available to Run Anywhere

The enhanced NVIDIA AI platform is easier to access than ever.

NIM and CUDA-X microservices and all the 5.0 features will be available soon on the AWS, Google Cloud, Microsoft Azure and Oracle Cloud marketplaces.

For those who prefer to run code in their own data centers, VMware Private AI Foundation with NVIDIA will support the software, so it can be deployed in the virtualized data centers of Broadcom’s customers.

Companies have the option of running NVIDIA AI Enterprise on Red Hat OpenShift, allowing them to deploy on bare-metal or virtualized environments. It’s also supported on Canonical’s Charmed Kubernetes as well as Ubuntu.

In addition, the AI platform will be part of the software available on HPE ProLiant servers from Hewlett Packard Enterprise (HPE). HPE’s enterprise computing solution for generative AI handles inference and model fine-tuning using NVIDIA AI Enterprise.

In addition, NVIDIA Inception member Anyscale, Dataiku and DataRobot — three leading providers of the software for managing machine learning operations — will support NIM on their platforms. They join an NVIDIA ecosystem of hundreds of MLOps partners, including Microsoft Azure Machine Learning, Dataloop AI, Domino Data Lab and Weights & Biases.

However they access it, NVIDIA AI Enterprise 5.0 users can benefit from software that’s secure, production-ready and optimized for performance. It can be flexibly deployed for applications in the data center, the cloud, on workstations or at the network’s edge.

NVIDIA AI Enterprise is available through leading system providers, including Cisco, Dell Technologies, HP, HPE, Lenovo and Supermicro.

Hear Success Stories at GTC

Users will share their experiences with the software at NVIDIA GTC, a global AI conference, running March 18-21 at the San Jose Convention Center.

For example, ServiceNow chief digital information officer Chris Bedi will speak on a panel about harnessing generative AI’s potential. In a separate talk, ServiceNow vice president of AI Products Jeremy Barnes will share on using NVIDIA AI Enterprise to achieve maximum developer productivity.

Executives from BlackRock, Medtronic, SAP and Uber will discuss their work in finance, healthcare, enterprise software, and business operations using the NVIDIA AI platform.

In addition, executives from ControlExpert, a global application provider for car insurance companies based in Germany, will share how they developed an AI-powered claims management solution using NVIDIA AI Enterprise software.

They’re among a growing set of companies that benefit from NVIDIA’s work evaluating hundreds of internal and external generative AI projects — all integrated into a single package that’s been tested for stability and security.

And get the full picture from NVIDIA CEO and founder Jensen Huang in his GTC keynote.

See notice regarding software product information.