An AI agent is only as accurate, relevant and timely as the data that powers it.

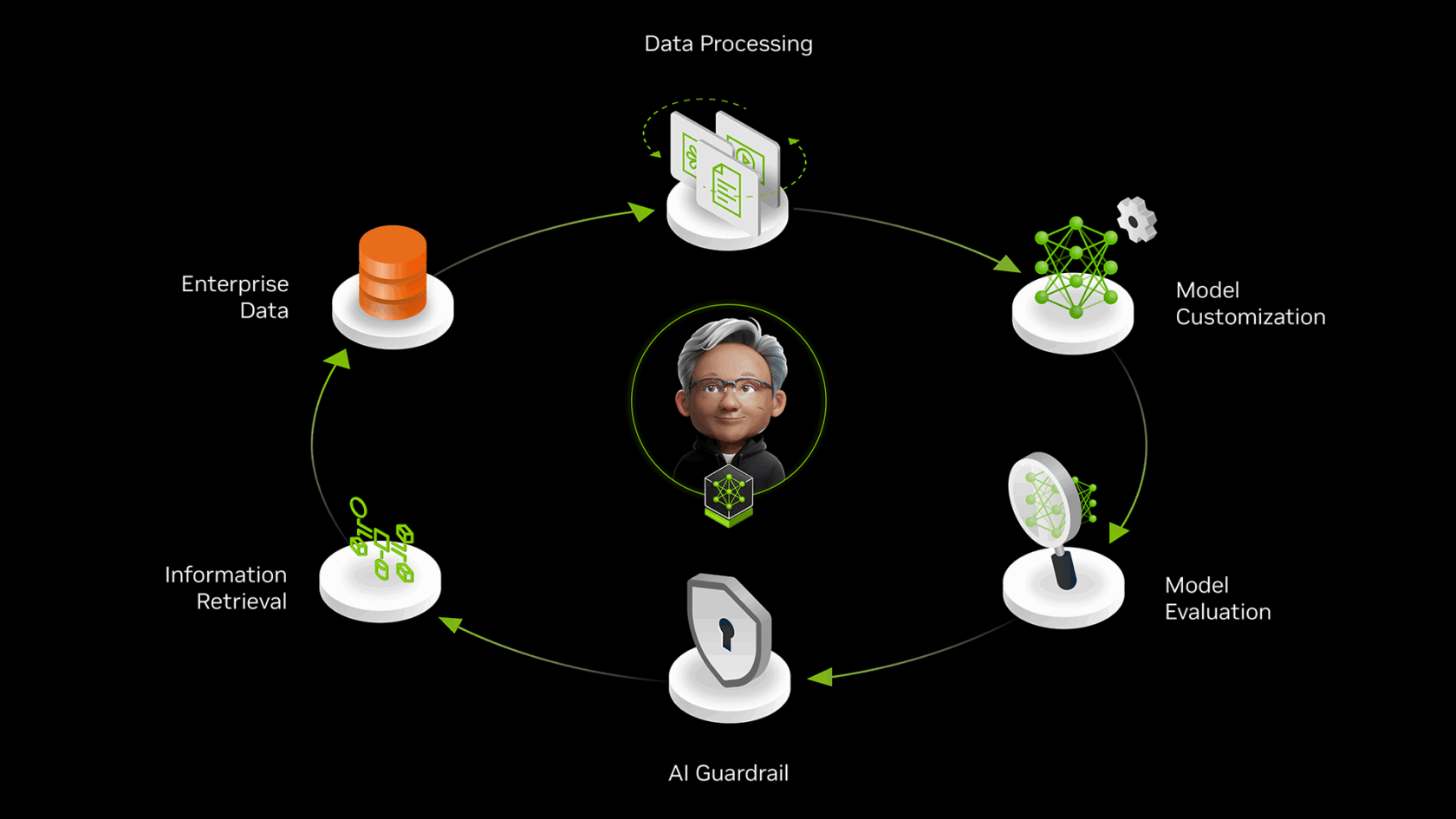

Now generally available, NVIDIA NeMo microservices are helping enterprise IT quickly build AI teammates that tap into data flywheels to scale employee productivity. The microservices provide an end-to-end developer platform for creating state-of-the-art agentic AI systems and continually optimizing them with data flywheels informed by inference and business data, as well as user preferences.

With a data flywheel, enterprise IT can onboard AI agents as digital teammates. These agents can tap into user interactions and data generated during AI inference to continuously improve model performance — turning usage into insight and insight into action.

Building Powerful Data Flywheels for Agentic AI

Without a constant stream of high-quality inputs — from databases, user interactions or real-world signals — an agent’s understanding can weaken, making responses less reliable and agents less productive.

Maintaining and improving the models that power AI agents in production requires three types of data: inference data to gather insights and adapt to evolving data patterns, up-to-date business data to provide intelligence, and user feedback data to advise if the model and application are performing as expected. NeMo microservices help developers tap into these three data types.

NeMo microservices speed AI agent development with end-to-end tools for curating, customizing, evaluating and guardrailing the models that drive their agents.

NVIDIA NeMo microservices — including NeMo Customizer, NeMo Evaluator and NeMo Guardrails — can be used alongside NeMo Retriever and NeMo Curator to ease enterprises’ experiences building, optimizing and scaling AI agents through custom enterprise data flywheels. For example:

- NeMo Customizer accelerates large language model fine-tuning, delivering up to 1.8x higher training throughput. This high-performance, scalable microservice uses popular post-training techniques including supervised fine-tuning and low-rank adaptation.

- NeMo Evaluator simplifies the evaluation of AI models and workflows on custom and industry benchmarks with just five application programming interface (API) calls.

- NeMo Guardrails improves compliance protection by up to 1.4x with only half a second of additional latency, helping organizations implement robust safety and security measures that align with organizational policies and guidelines.

With NeMo microservices, developers can build data flywheels that boost AI agent accuracy and efficiency. Deployed through the NVIDIA AI Enterprise software platform, NeMo microservices are easy to operate and can run on any accelerated computing infrastructure, on premises or in the cloud, with enterprise-grade security, stability and support.

The microservices have become generally available at a time when enterprises are building large-scale multi-agent systems, where hundreds of specialized agents — with distinct goals and workflows — collaborate to tackle complex tasks as digital teammates, working alongside employees to assist, augment and accelerate work across functions.

This enterprise-wide impact positions AI agents as a trillion-dollar opportunity — with applications spanning automated fraud detection, shopping assistants, predictive machine maintenance and document review — and underscores the critical role data flywheels play in transforming business data into actionable insights.

Industry Pioneers Boost AI Agent Accuracy With NeMo Microservices

NVIDIA partners and industry pioneers are using NeMo microservices to build responsive AI agent platforms so that digital teammates can help get more done.

Working with Arize and Quantiphi, AT&T has built an advanced AI-powered agent using NVIDIA NeMo, designed to process a knowledge base of nearly 10,000 documents, refreshed weekly. The scalable, high-performance AI agent is fine-tuned for three key business priorities: speed, cost efficiency and accuracy — all increasingly critical as adoption scales.

AT&T boosted AI agent accuracy by up to 40% using NeMo Customizer and Evaluator by fine-tuning a Mistral 7B model to help deliver personalized services, prevent fraud and optimize network performance.

BlackRock is working with NeMo microservices for agentic AI capabilities in its Aladdin tech platform, which unifies the investment management process through a common data language.

Teaming with Galileo, Cisco’s Outshift team is using NVIDIA NeMo microservices to power a coding assistant that delivers 40% fewer tool selection errors and achieves up to 10x faster response times.

Nasdaq is accelerating its Nasdaq Gen AI Platform with NeMo Retriever microservices and NVIDIA NIM microservices. NeMo Retriever enhanced the platform’s search capabilities, leading to up to 30% improved accuracy and response times, in addition to cost savings.

Broad Model and Partner Ecosystem Support for NeMo Microservices

NeMo microservices support a broad range of popular open models, including Llama, the Microsoft Phi family of small language models, Google Gemma, Mistral and Llama Nemotron Ultra, currently the top open model on scientific reasoning, coding and complex math benchmarks.

Meta has tapped NVIDIA NeMo microservices through new connectors for Meta Llamastack. Users can access the same capabilities — including Customizer, Evaluator and Guardrails — via APIs, enabling them to run the full suite of agent-building workflows within their environment.

“With Llamastack integration, agent builders can implement data flywheels powered by NeMo microservices,” said Raghotham Murthy, software engineer, GenAI, at Meta. “This allows them to continuously optimize models to improve accuracy, boost efficiency and reduce total cost of ownership.”

Leading AI software providers such as Cloudera, Datadog, Dataiku, DataRobot, DataStax, SuperAnnotate, Weights & Biases and more have integrated NeMo microservices into their platforms. Developers can use NeMo microservices in popular AI frameworks including CrewAI, Haystack by deepset, LangChain, LlamaIndex and Llamastack.

Enterprises can build data flywheels with NeMo Retriever microservices using NVIDIA AI Data Platform offerings from NVIDIA-Certified Storage partners including DDN, Dell Technologies, Hewlett Packard Enterprise, Hitachi Vantara, IBM, NetApp, Nutanix, Pure Storage, VAST Data and WEKA.

Leading enterprise platforms including Amdocs, Cadence, Cohesity, SAP, ServiceNow and Synopsys are using NeMo Retriever microservices in their AI agent solutions.

Enterprises can run AI agents on NVIDIA-accelerated infrastructure, networking and software from leading system providers including Cisco, Dell, Hewlett Packard Enterprise and Lenovo.

Consulting giants including Accenture, Deloitte and EY are building AI agent platforms for enterprises using NeMo microservices.

Developers can download NeMo microservices from the NVIDIA NGC catalog. The microservices can be deployed as part of NVIDIA AI Enterprise with extended-life software branches for API stability, proactive security remediation and enterprise-grade support.