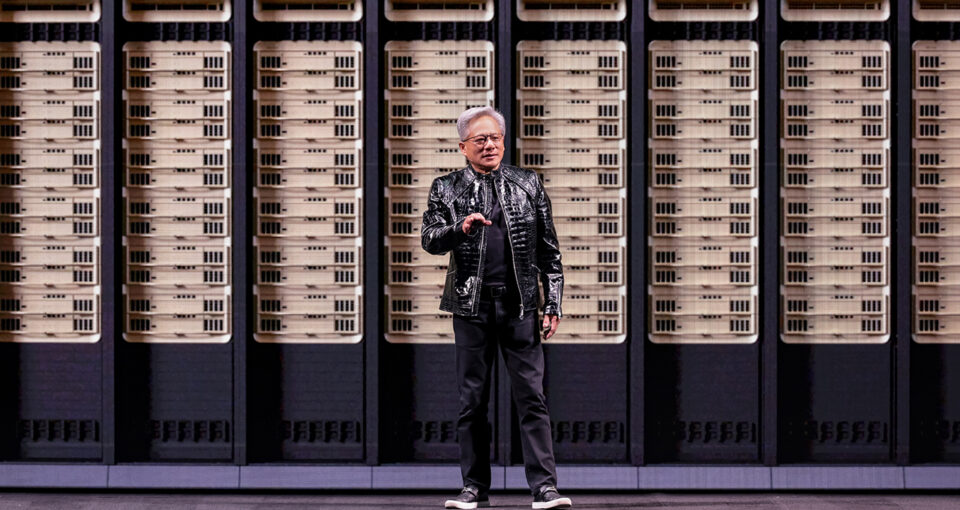

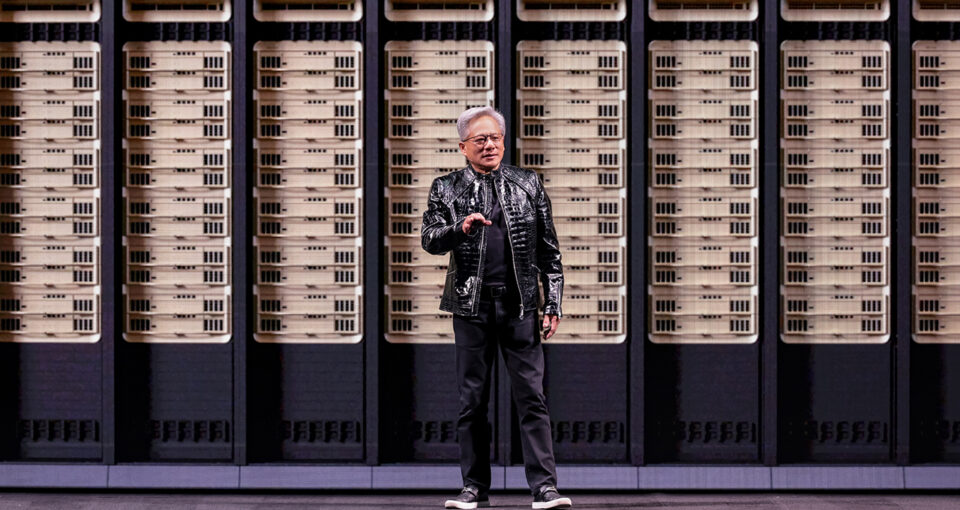

NVIDIA founder and CEO Jensen Huang took the stage at the Fontainebleau Las Vegas to open CES 2026,… Read Article

NVIDIA founder and CEO Jensen Huang took the stage at the Fontainebleau Las Vegas to open CES 2026,… Read Article

AI is powering breakthroughs across industries, helping enterprises operate with greater intelligence and speed. As AI factories scale, the next generation of enterprise AI depends on infrastructure that can efficiently… Read Article

NVIDIA DGX SuperPOD is paving the way for large-scale system deployments built on the NVIDIA Rubin platform — the next leap forward in AI computing. At the CES trade show… Read Article

Open-source AI is accelerating innovation across industries, and NVIDIA DGX Spark and DGX Station are built to help developers turn innovation into impact. NVIDIA today unveiled at the CES trade… Read Article

The NVIDIA RTX PRO 5000 72GB Blackwell GPU is now generally available, bringing robust agentic and generative AI capabilities powered by the NVIDIA Blackwell architecture to more desktops and professionals… Read Article

The Hao AI Lab research team at the University of California San Diego — at the forefront of pioneering AI model innovation — recently received an NVIDIA DGX B200 system… Read Article

NVIDIA today announced it has acquired SchedMD — the leading developer of Slurm, an open-source workload management system for high-performance computing (HPC) and AI — to help strengthen the open-source… Read Article

Unveiling what it describes as the most capable model series yet for professional knowledge work, OpenAI launched GPT-5.2 today. The model was trained and deployed on NVIDIA infrastructure, including NVIDIA… Read Article

As the scale and complexity of AI infrastructure grows, data center operators need continuous visibility into factors including performance, temperature and power usage. These insights enable data center operators to… Read Article