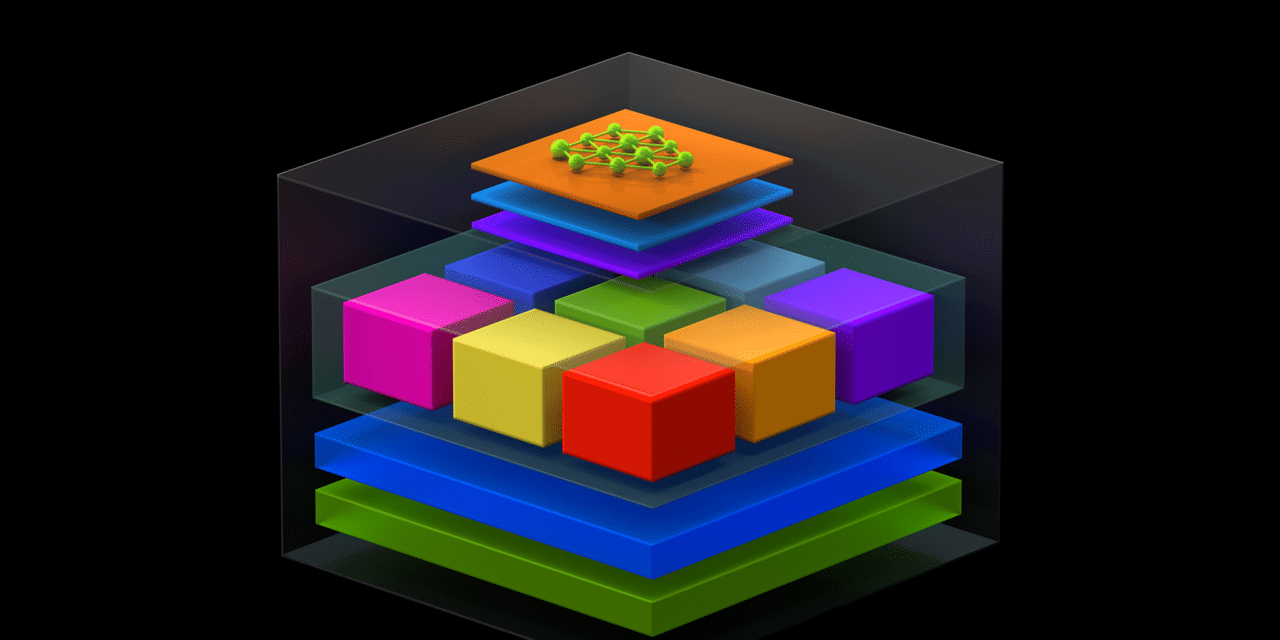

If data is the new oil, then AI is the refinery. The raw data enterprises create and gather is like black crude — it’s real value shines after it’s processed into gasoline and other consumable products.

AI transforms the vast deposits of data organizations possess to help them extract insights that improve customer experiences, build new business models, drive operational efficiencies and fuel competitive advantage.

NVIDIA’s GPU computing platform, including NGC, NGC-Ready systems and NGC Support services, powers the AI refinery. NGC is the software hub that provides GPU-optimized frameworks, pre-trained models and toolkits to train and deploy AI in production.

And today we’re expanding NGC to help developers securely build AI faster with toolkits and SDKs and share and deploy with a private registry.

NVIDIA AI Toolkits and SDKs Simplify Training, Inference and Deployment

NVIDIA AI toolkits provide libraries and tools to train, fine-tune, optimize and deploy pre-trained NGC models across a broad domain of industries and AI applications. They include:

- An AI-assisted annotation tool to help users label their datasets for training.

- A TAO toolkit to fine-tune pre-trained models with user data, saving the cost of training from scratch.

- Federated learning that preserves privacy by allowing users to collaborate and train AI models without sharing private data between clients.

- The NeMo toolkit to quickly build state-of-the-art models for speech recognition and natural language processing.

- The Service Maker toolkit that exposes trained models as a gRPC service that can be scaled and easily deployed on a Kubernetes service.

Models built using the toolkits can be integrated inside client applications and deployed in production with the software development kits offered by NVIDIA. Leveraging TensorRT and the Triton Inference Server as foundational building blocks, the deployment SDKs span industries, including conversational AI with NVIDIA Riva, recommender systems with NVIDIA Merlin, medical imaging with NVIDIA Clara, video analytics with NVIDIA Metropolis and NVIDIA DeepStream, robotics with NVIDIA Isaac Sim and 5G acceleration with NVIDIA Aerial.

Safeguarding IP with NGC Private Registry

Custom models built using these AI toolkits are a company’s highly guarded intellectual property. Organizations will want to ensure that they can be securely shared, modified and deployed into production environments.

That’s why we’ve extended NGC with a private registry that allows organizations to sign, store, manage and share custom-built containers and models securely within teams across their enterprise.

The registry is equipped with several new security capabilities. Containers pushed to the private registry are automatically scanned for common vulnerabilities and exposures (CVEs). By flagging containers with critical or high CVEs, enterprises can remedy issues early in the development stages, ensuring secure software is released in production.

The private registry will also soon allow publishers and developers to sign their containers. This will give software users the confidence that they’re consuming content that is authentic, intact and from a verified source.

As data scientists collaborate across their organizations and iterate on training, they produce many models, each with unique parameters. The NGC model-versioning system makes it easy to capture these unique variables, discover the appropriate models and, ultimately, deploy the right one into production.

Securely Deploy AI Models at the Edge

Enabling the smart everything revolution, AI models are increasingly being deployed at the edge, closer to the point of action. However, edge locations often don’t have the IT personnel or security protocols present in a data center, which poses potential security challenges.

Announced yesterday, the EGX A100 has a confidential AI enclave that uses a new GPU security engine to load encrypted AI models, further preventing the theft of valuable IP. NGC private registry will enable developers to encrypt AI models and transfer them securely to the edge. Model encryption at rest and in transit from the private registry to the GPU memory reduces attacks on IP encoded in AI models running at the edge.

The private registry ultimately provides users with a cloud-hosted platform to secure their custom containers and models that they will deploy at the edge.

Run Seamlessly on x86, Arm and POWER Systems

AI workflows span the data center, cloud and the edge and may need to run on different CPU architectures. To enable flexibility, we’ve upgraded NGC with multi-architecture support.

This allows popular runtimes, including Docker, cri-o, containerD and Singularity, to automatically select and run an image variant that matches the system architecture, simplifying the deployment process across heterogeneous environments.

In addition to x86- and Arm-based containers, NGC now supports POWER architecture thanks to IBM bringing its Visual Insights software onto the registry. IBM Visual Insights makes computer vision with deep learning more accessible to business users, accelerating AI vision deployments and increasing productivity.

Get Started with NGC Today

Quickly build your AI solutions with GPU-optimized software tools from NGC, share and collaborate with the private registry, and securely deploy across on-premises, cloud and edge systems. Get started at ngc.nvidia.com.