Whether advancing science, building self-driving cars or gathering business insight from mountains of data, data scientists, researchers and developers need powerful GPU compute. They also need the right software tools.

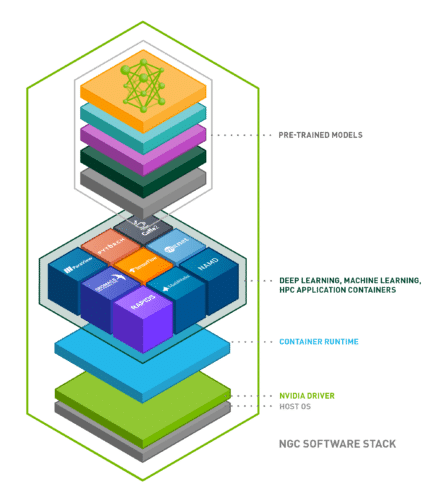

AI is complex and building models can be time consuming. So container technology plays a vital role in simplifying complex deployments and workflows.

At GTC 2019, we’ve supercharged NGC — a hub of essential software for deep learning, machine learning, HPC and more — with pre-trained AI models, model training scripts and industry-specific software stacks.

With these new tools, no matter your skill level, you can quickly and easily realize value with AI.

NGC Takes Care of the Plumbing, So You Can Focus on Doing Your Business

Data scientists’ time is expensive, and the compute resources they need to develop models are in high demand. If they spend hours and even days compiling a framework from the source just to find errors, that’s a loss of productivity, revenue and competitive edge.

Thousands of data scientists and developers have pulled performance-optimized deep learning framework containers like TensorFlow and PyTorch, updated monthly, from NGC because they can bypass time-consuming and error-prone deployment steps and instead focus on building their solutions.

NGC lowers the barrier to entry for companies that want to engage in the latest trends in computing. And for those already engaged, it lets them deliver greater value, faster.

Accelerate AI Projects with Pre-Trained Models and Training Scripts

Many AI applications have common needs: classification, object detection, language translation, text-to-speech, recommender engines, sentiment analysis and more. When developing applications or services with these capabilities, it’s much faster to tune a pre-trained model for your use case than to start from scratch.

NGC’s new model registry provides data scientists and researchers with a repository of the most popular AI models, giving them a starting point to retrain, benchmark and rapidly build their AI applications.

NGC enterprise account holders also can upload, share and version their own models across their organizations and teams through a hosted private registry. The model registry is accessible through https://ngc.nvidia.com and a command line interface, so users can deploy it in a hybrid cloud environment and provide their organizations with controlled access to versioned models.

NGC also provides model training scripts with best practices that take advantage of mixed precision powered by the NVIDIA Tensor Cores that enable NVIDIA Turing and Volta GPUs to deliver up to 3x performance speedups in training and inference over previous generations.

By offering models and training scripts that have been tested for accuracy and convergence, NGC provides users with centralization and curation of the most important NVIDIA deep learning assets.

Training and Deployment Stacks for Medical Imaging and Smart Cities

An efficient workflow across industries starts from pre-trained models and then performs transfer learning training with new data. Next, it prunes and optimizes the network, and then deploys to edge devices for inference. The combination of these pre-trained models with transfer learning eliminates the high costs associated with large-scale data collection, labeling and training models from scratch, providing domain experts a jumpstart on their deep learning workflows.

However, the details of the training, optimization and deployment differs dramatically by industry. NGC now provides industry-specific workflows for smart cities and medical imaging.

For smart cities, the NVIDIA TAO Toolkit for Streaming Analytics provides transfer learning tailored to intelligent video analytics workloads, such as object detection and classification from frames of camera video. Then the retrained, optimized and pruned models are deployed to NVIDIA Tesla or Jetson platforms through the NVIDIA DeepStream SDK for smart cities.

For medical imaging, the NVIDIA Clara Train SDK enables medical institutions to start with pre-trained models of MRI scans for organ segmentation and use transfer learning to improve those models based on datasets owned by that institution. Clara Train produces optimized models, which are then deployed using the NVIDIA Clara Deploy SDK to provide enhanced segmentation on new patient scans.

NGC-Ready Systems — Validated Platforms Optimized for AI Workloads

NGC-Ready systems, offered by top system manufacturers around the world, are validated by NVIDIA so data scientists and developers can quickly get their deep learning and machine learning workloads up and running optimally.

Maximum performance systems are powered by NVIDIA V100 GPUs, with 640 Tensor Cores and up to 32GB of memory. For maximum utilization, systems are powered by the new NVIDIA T4 GPUs, which excel across the full range of accelerated workloads — machine learning, deep learning, virtual desktops and HPC. View a list of validated NGC-Ready systems.

Deploy AI Infrastructure with Confidence

The adoption of AI across industries has skyrocketed. This has led IT teams to support new types of workloads, software stacks and hardware for a diverse set of users. While the playing field has changed, the need to minimize system downtime, and keep users productive, remains critical.

To address this concern, we’ve introduced NVIDIA NGC Support Services, which provide enterprise-grade support to ensure NGC-Ready systems run optimally and maximize system utilization and user productivity. These new services provide IT teams with direct access to NVIDIA subject-matter experts to quickly address software issues and minimize system downtime.

NGC Support Services are available through sellers of NGC-Ready systems, with immediate availability from Cisco for its NGC-Ready validated NVIDIA V100 system, Cisco UCS C480 ML. HPE will offer the services for the HPE ProLiant DL380 Gen10 server as a validated NGC-Ready NVIDIA T4 server in June. Several other OEMs are expected to begin selling the services in the coming months.

Get Started with NGC Today

Pull and run the NGC containers and pre-trained models at no charge on GPU-powered systems or cloud instances at ngc.nvidia.com.