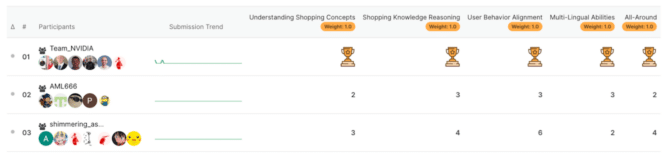

Team NVIDIA has triumphed at the Amazon KDD Cup 2024, securing first place Friday across all five competition tracks.

The team — consisting of NVIDIANs Ahmet Erdem, Benedikt Schifferer, Chris Deotte, Gilberto Titericz, Ivan Sorokin and Simon Jegou — demonstrated its prowess in generative AI, winning in categories that included text generation, multiple-choice questions, name entity recognition, ranking, and retrieval.

The competition, themed “Multi-Task Online Shopping Challenge for LLMs,” asked participants to solve various challenges using limited datasets.

“The new trend in LLM competitions is that they don’t give you training data,” said Deotte, a senior data scientist at NVIDIA. “They give you 96 example questions — not enough to train a model — so we came up with 500,000 questions on our own.”

Deotte explained that the NVIDIA team generated a variety of questions by writing some themselves, using a large language model to create others, and transforming existing e-commerce datasets.

“Once we had our questions, it was straightforward to use existing frameworks to fine-tune a language model,” he said.

The competition organizers hid the test questions to ensure participants couldn’t exploit previously known answers. This approach encourages models that generalize well to any question about e-commerce, proving the model’s capability to handle real-world scenarios effectively.

Despite these constraints, Team NVIDIA’s innovative approach outperformed all competitors by using Qwen2-72B, a just-released LLM with 72 billion parameters, fine-tuned on eight NVIDIA A100 Tensor Core GPUs, and employing QLoRA, a technique for fine-tuning models with datasets.

About the KDD Cup 2024

The KDD Cup, organized by the Association for Computing Machinery’s Special Interest Group on Knowledge Discovery and Data Mining, or ACM SIGKDD, is a prestigious annual competition that promotes research and development in the field.

This year’s challenge, hosted by Amazon, focused on mimicking the complexities of online shopping with the goal of making it a more intuitive and satisfying experience using large language models. Organizers utilized the test dataset ShopBench — a benchmark that replicates the massive challenge for online shopping with 57 tasks and about 20,000 questions derived from real-world Amazon shopping data — to evaluate participants’ models.

The ShopBench benchmark focused on four key shopping skills, along with a fifth “all-in-one” challenge:

- Shopping Concept Understanding: Decoding complex shopping concepts and terminologies.

- Shopping Knowledge Reasoning: Making informed decisions with shopping knowledge.

- User Behavior Alignment: Understanding dynamic customer behavior.

- Multilingual Abilities: Shopping across languages.

- All-Around: Solving all tasks from the previous tracks in a unified solution.

NVIDIA’s Winning Solution

NVIDIA’s winning solution involved creating a single model for each track.

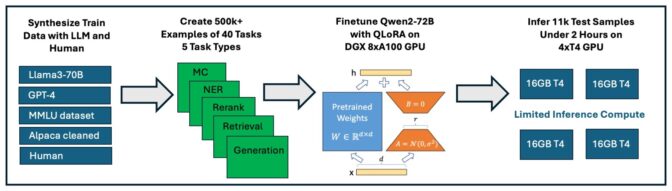

The team fine-tuned the just-released Qwen2-72B model using eight NVIDIA A100 Tensor Core GPUs for approximately 24 hours. The GPUs provided fast and efficient processing, significantly reducing the time required for fine-tuning.

First, the team generated training datasets based on the provided examples and synthesized additional data using Llama 3 70B hosted on build.nvidia.com.

Next, they employed QLoRA (Quantized Low-Rank Adaptation), a training process using the data created in step one. QLoRA modifies a smaller subset of the model’s weights, allowing efficient training and fine-tuning.

The model was then quantized — making it smaller and able to run on a system with a smaller hard drive and less memory — with AWQ 4-bit and used the vLLM inference library to predict the test datasets on four NVIDIA T4 Tensor Core GPUs within the time constraints.

This approach secured the top spot in each individual track and the overall first place in the competition—a clean sweep for NVIDIA for the second year in a row.

The team plans to submit a detailed paper on its solution next month and plans to present its findings at KDD 2024 in Barcelona.