The roots of many of NVIDIA’s landmark innovations — the foundational technology that powers AI, accelerated computing, real-time ray tracing and seamlessly connected data centers — can be found in the company’s research organization, a global team of around 400 experts in fields including computer architecture, generative AI, graphics and robotics.

Established in 2006 and led since 2009 by Bill Dally, former chair of Stanford University’s computer science department, NVIDIA Research is unique among corporate research organizations — set up with a mission to pursue complex technological challenges while having a profound impact on the company and the world.

“We make a deliberate effort to do great research while being relevant to the company,” said Dally, chief scientist and senior vice president of NVIDIA Research. “It’s easy to do one or the other. It’s hard to do both.”

Dally is among NVIDIA Research leaders sharing the group’s innovations at NVIDIA GTC, the premier developer conference at the heart of AI, taking place this week in San Jose, California.

“We make a deliberate effort to do great research while being relevant to the company.” — Bill Dally, chief scientist and senior vice president

While many research organizations may describe their mission as pursuing projects with a longer time horizon than those of a product team, NVIDIA researchers seek out projects with a larger “risk horizon” — and a huge potential payoff if they succeed.

“Our mission is to do the right thing for the company. It’s not about building a trophy case of best paper awards or a museum of famous researchers,” said David Luebke, vice president of graphics research and NVIDIA’s first researcher. “We are a small group of people who are privileged to be able to work on ideas that could fail. And so it is incumbent upon us to not waste that opportunity and to do our best on projects that, if they succeed, will make a big difference.”

Innovating as One Team

One of NVIDIA’s core values is “one team” — a deep commitment to collaboration that helps researchers work closely with product teams and industry stakeholders to transform their ideas into real-world impact.

“Everybody at NVIDIA is incentivized to figure out how to work together because the accelerated computing work that NVIDIA does requires full-stack optimization,” said Bryan Catanzaro, vice president of applied deep learning research at NVIDIA. “You can’t do that if each piece of technology exists in isolation and everybody’s staying in silos. You have to work together as one team to achieve acceleration.”

When evaluating potential projects, NVIDIA researchers consider whether the challenge is a better fit for a research or product team, whether the work merits publication at a top conference, and whether there’s a clear potential benefit to NVIDIA. If they decide to pursue the project, they do so while engaging with key stakeholders.

“We are a small group of people who are privileged to be able to work on ideas that could fail. And so it is incumbent upon us to not waste that opportunity.” — David Luebke, vice president of graphics research

“We work with people to make something real, and often, in the process, we discover that the great ideas we had in the lab don’t actually work in the real world,” Catanzaro said. “It’s a tight collaboration where the research team needs to be humble enough to learn from the rest of the company what they need to do to make their ideas work.”

The team shares much of its work through papers, technical conferences and open-source platforms like GitHub and Hugging Face. But its focus remains on industry impact.

“We think of publishing as a really important side effect of what we do, but it’s not the point of what we do,” Luebke said.

NVIDIA Research’s first effort was focused on ray tracing, which after a decade of sustained work led directly to the launch of NVIDIA RTX and redefined real-time computer graphics. The organization now includes teams specializing in chip design, networking, programming systems, large language models, physics-based simulation, climate science, humanoid robotics and self-driving cars — and continues expanding to tackle additional areas of study and tap expertise across the globe.

“You have to work together as one team to achieve acceleration.” — Bryan Catanzaro, vice president of applied deep learning research

Transforming NVIDIA — and the Industry

NVIDIA Research didn’t just lay the groundwork for some of the company’s most well-known products — its innovations have propelled and enabled today’s era of AI and accelerated computing.

It began with CUDA, a parallel computing software platform and programming model that enables researchers to tap GPU acceleration for myriad applications. Launched in 2006, CUDA made it easy for developers to harness the parallel processing power of GPUs to speed up scientific simulations, gaming applications and the creation of AI models.

“Developing CUDA was the single most transformative thing for NVIDIA,” Luebke said. “It happened before we had a formal research group, but it happened because we hired top researchers and had them work with top architects.”

Making Ray Tracing a Reality

Once NVIDIA Research was founded, its members began working on GPU-accelerated ray tracing, spending years developing the algorithms and the hardware to make it possible. In 2009, the project — led by the late Steven Parker, a real-time ray tracing pioneer who was vice president of professional graphics at NVIDIA — reached the product stage with the NVIDIA OptiX application framework, detailed in a 2010 SIGGRAPH paper.

The researchers’ work expanded and, in collaboration with NVIDIA’s architecture group, eventually led to the development of NVIDIA RTX ray-tracing technology, including RT Cores that enabled real-time ray tracing for gamers and professional creators.

Unveiled in 2018, NVIDIA RTX also marked the launch of another NVIDIA Research innovation: NVIDIA DLSS, or Deep Learning Super Sampling. With DLSS, the graphics pipeline no longer needs to draw all the pixels in a video. Instead, it draws a fraction of the pixels and gives an AI pipeline the information needed to create the image in crisp, high resolution.

Accelerating AI for Virtually Any Application

NVIDIA’s research contributions in AI software kicked off with the NVIDIA cuDNN library for GPU-accelerated neural networks, which was developed as a research project when the deep learning field was still in its initial stages — then released as a product in 2014.

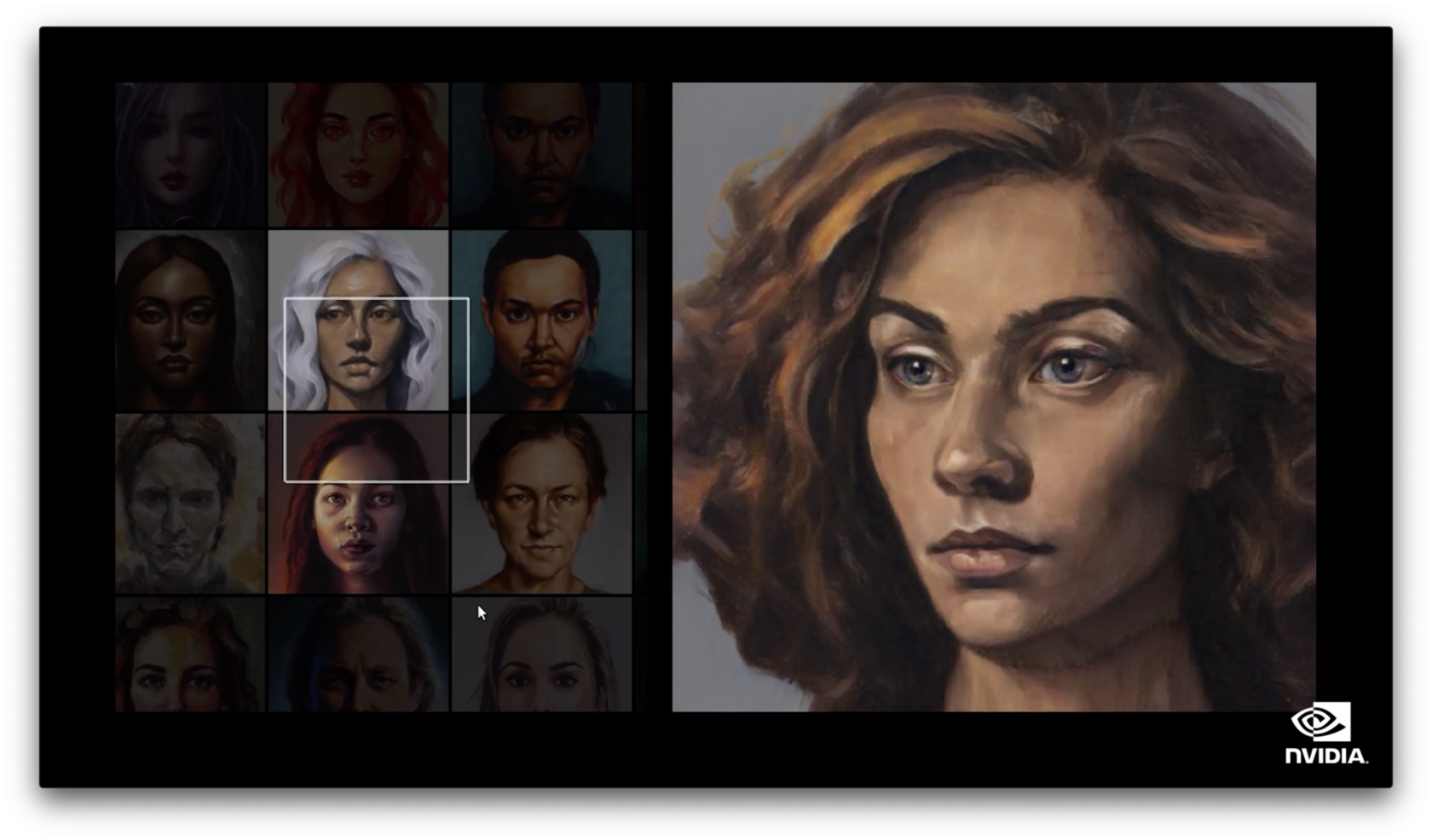

As deep learning soared in popularity and evolved into generative AI, NVIDIA Research was at the forefront — exemplified by NVIDIA StyleGAN, a groundbreaking visual generative AI model that demonstrated how neural networks could rapidly generate photorealistic imagery.

While generative adversarial networks, or GANs, were first introduced in 2014, “StyleGAN was the first model to generate visuals that could completely pass muster as a photograph,” Luebke said. “It was a watershed moment.”

NVIDIA researchers introduced a slew of popular GAN models such as the AI painting tool GauGAN, which later developed into the NVIDIA Canvas application. And with the rise of diffusion models, neural radiance fields and Gaussian splatting, they’re still advancing visual generative AI — including in 3D with recent models like 3DGUT.

In the field of large language models, Megatron-LM was an applied research initiative that enabled the efficient training and inference of massive LLMs for language-based tasks such as content generation, translation and conversational AI. It’s integrated into the NVIDIA NeMo platform for developing custom generative AI, which also features speech recognition and speech synthesis models that originated in NVIDIA Research.

Achieving Breakthroughs in Chip Design, Networking, Quantum and More

AI and graphics are only some of the fields NVIDIA Research tackles — several teams are achieving breakthroughs in chip architecture, electronic design automation, programming systems, quantum computing and more.

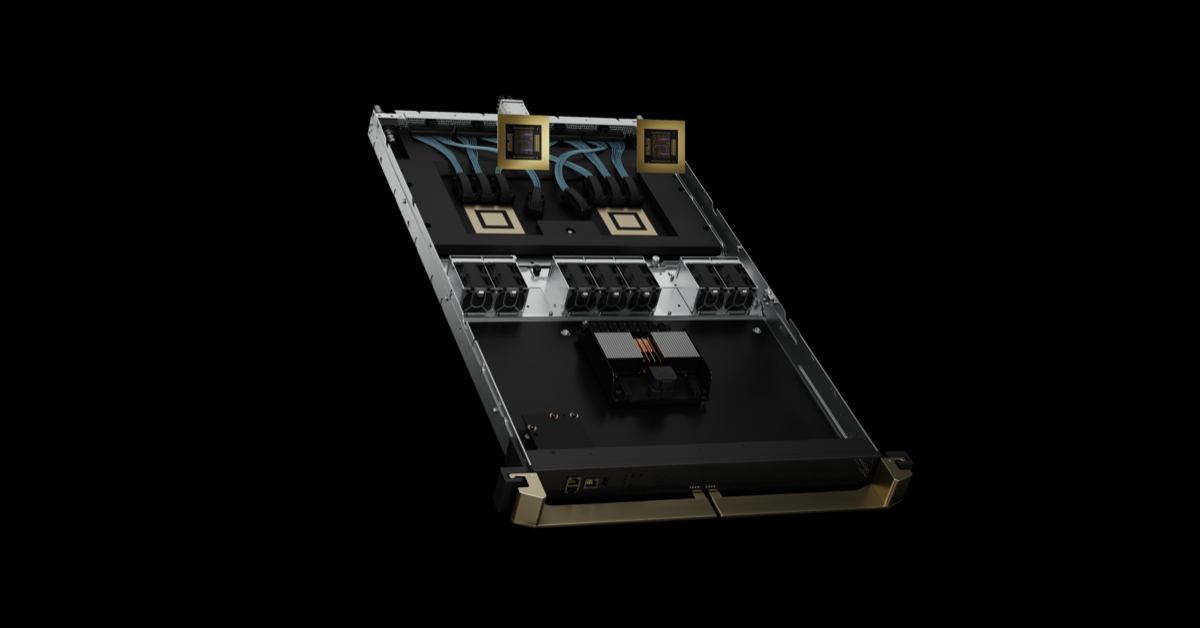

In 2012, Dally submitted a research proposal to the U.S. Department of Energy for a project that would become NVIDIA NVLink and NVSwitch, the high-speed interconnect that enables rapid communication between GPU and CPU processors in accelerated computing systems.

In 2013, the circuit research team published work on chip-to-chip links that introduced a signaling system co-designed with the interconnect to enable a high-speed, low-area and low-power link between dies. The project eventually became the link between the NVIDIA Grace CPU and NVIDIA Hopper GPU.

In 2021, the ASIC and VLSI Research group developed a software-hardware codesign technique for AI accelerators called VS-Quant that enabled many machine learning models to run with 4-bit weights and 4-bit activations at high accuracy. Their work influenced the development of FP4 precision support in the NVIDIA Blackwell architecture.

And unveiled this year at the CES trade show was NVIDIA Cosmos, a platform created by NVIDIA Research to accelerate the development of physical AI for next-generation robots and autonomous vehicles. Read the research paper and check out the AI Podcast episode on Cosmos for details.

Learn more about NVIDIA Research at GTC. Watch the keynote by NVIDIA founder and CEO Jensen Huang below:

See notice regarding software product information.