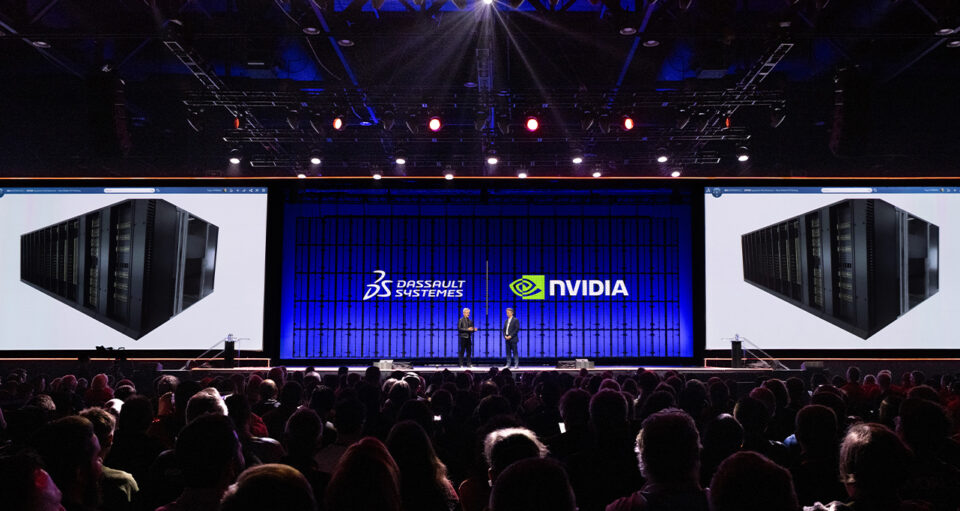

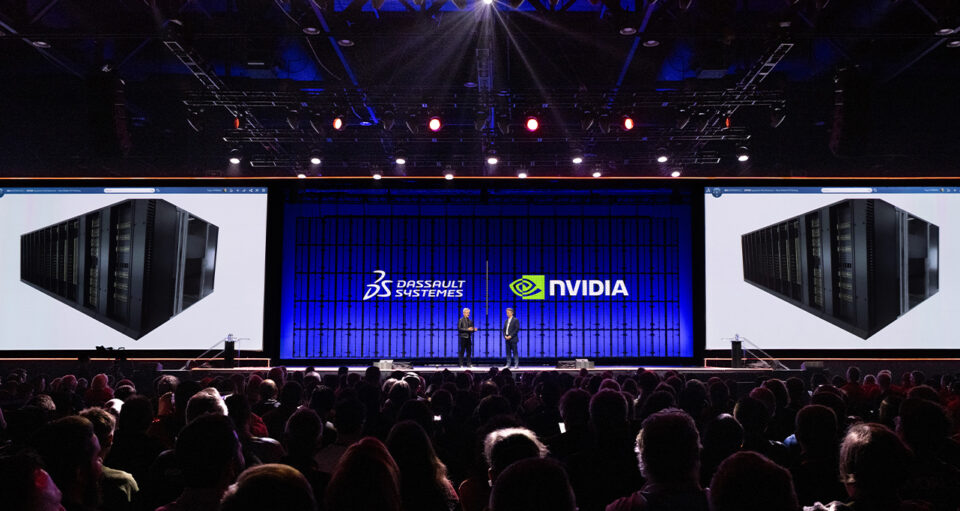

Everything Will Be Represented in a Virtual Twin, NVIDIA CEO Jensen Huang Says at 3DEXPERIENCE World

NVIDIA founder and CEO Jensen Huang and Dassault Systèmes CEO Pascal Daloz announced a partnership to build a… Read Article

NVIDIA founder and CEO Jensen Huang and Dassault Systèmes CEO Pascal Daloz announced a partnership to build a… Read Article

Open source has become essential for driving innovation in robotics and autonomy. By providing access to critical infrastructure — from simulation frameworks to AI models — NVIDIA is enabling collaborative… Read Article

The works of Plato state that when humans have an experience, some level of change occurs in their brain, which is powered by memory — specifically long-term memory. This change… Read Article

The NVIDIA RTX PRO 5000 72GB Blackwell GPU is now generally available, bringing robust agentic and generative AI capabilities powered by the NVIDIA Blackwell architecture to more desktops and professionals… Read Article

Physical AI is moving from research labs into the real world, powering intelligent robots and autonomous vehicles (AVs) — such as robotaxis — that must reliably sense, reason and act… Read Article

Cities worldwide face unprecedented challenges as urban populations surge and infrastructure strains to keep pace…. Read Article

Physical AI models — which power robots, autonomous vehicles and other intelligent machines — must be safe, generalized for dynamic scenarios and capable of perceiving, reasoning and operating in real… Read Article

The future of AI took flight at Starbase, Texas — where NVIDIA CEO Jensen Huang hand-delivered the first DGX Spark to Elon Musk, chief engineer at SpaceX. Amid towering engines… Read Article

Building robots that can effectively operate alongside human workers in factories, hospitals and public spaces presents an enormous technical challenge. These robots require humanlike dexterity, perception, cognition and whole-body coordination… Read Article