NVIDIA Research Shapes Physical AI

Physical AI — the engine behind modern robotics, self-driving cars and smart spaces — relies on a mix of neural graphics, synthetic data generation, physics-based simulation, reinforcement learning and AI reasoning. It’s a combination well-suited to the collective expertise of NVIDIA Research, a global team that for nearly 20 years has advanced the now-converging fields of AI and graphics.

That’s why at SIGGRAPH, the premier computer graphics conference taking place in Vancouver through Thursday, Aug. 14, NVIDIA Research leaders will deliver a special address highlighting the graphics and simulation innovations enabling physical and spatial AI.

“AI is advancing our simulation capabilities, and our simulation capabilities are advancing AI systems,” said Sanja Fidler, vice president of AI research at NVIDIA. “There’s an authentic and powerful coupling between the two fields, and it’s a combination that few have.”

At SIGGRAPH, NVIDIA is unveiling new software libraries for physical AI — including NVIDIA Omniverse NuRec 3D Gaussian splatting libraries for large-scale world reconstruction, updates to the NVIDIA Metropolis platform for vision AI as well as NVIDIA Cosmos and NVIDIA Nemotron reasoning models. Cosmos Reason is a new reasoning vision language model for physical AI that enables robots and vision AI agents to reason like humans using prior knowledge, physics understanding and common sense.

Many of these innovations are rooted in breakthroughs by the company’s global research team, which is presenting over a dozen papers at the show on advancements in neural rendering, real-time path tracing, synthetic data generation and reinforcement learning — capabilities that will feed the next generation of physical AI tools.

How Physical AI Unites Graphics, AI and Robotics

Physical AI development starts with the construction of high-fidelity, physically accurate 3D environments. Without these lifelike virtual environments, developers can’t train advanced physical AI systems such as humanoid robots in simulation, because the skills the robots would learn in virtual training wouldn’t translate well enough to the real world.

Picture an agricultural robot using the exact amount of pressure to pick peaches off trees without bruising them, or a manufacturing robot assembling microscopic electronic components on a machine where every millimeter matters.

“Physical AI needs a virtual environment that feels real, a parallel universe where the robots can safely learn through trial and error,” said Ming-Yu Liu, vice president of research at NVIDIA. “To build this virtual world, we need real-time rendering, computer vision, physical motion simulation, 2D and 3D generative AI, as well as AI reasoning. These are the things that NVIDIA Research has spent nearly two decades to be good at.”

NVIDIA’s legacy of breakthrough research in ray tracing and real-time computer graphics, dating back to the research organization’s inception in 2006, plays a critical role in enabling the realism that physical AI simulations demand. Much of that rendering work, too, is powered by AI models — a field known as neural rendering.

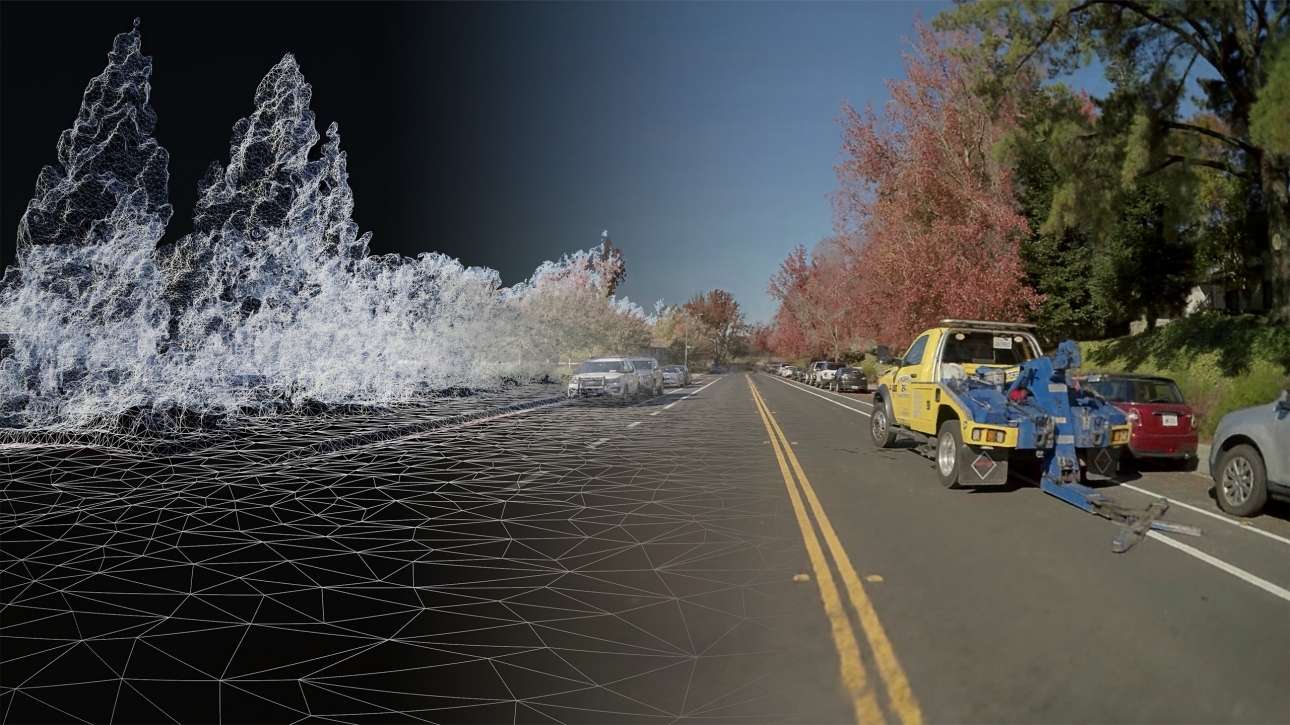

“Our core rendering research fuels the creation of true-to-reality virtual words used to train advanced physical AI systems, while AI is in turn helping us create those 3D worlds from images,” said Aaron Lefohn, vice president of graphics research and head of the Real-Time Graphics Research group at NVIDIA. “We’re now at a point where we can take pictures and videos — an accessible form of media that anyone can capture — and rapidly reconstruct them into virtual 3D environments.”

This foundational work in forward rendering (transforming 3D into 2D) and inverse rendering (turning 2D into 3D) is complemented by years of research and technology innovation in simulating physical motion, including the work of Fidler’s Spatial Intelligence Lab. The lab today unveiled ViPE (Video Pose Engine), a 3D geometric annotation pipeline for videos developed in collaboration with the Dynamic Vision Lab and NVIDIA Isaac team that estimates camera motion and generates detailed depth maps based on footage from amateur recordings, dashcams or cinematic shots.

In generative AI, Liu’s Deep Imagination Research group is among the NVIDIA Research teams pioneering computer vision, transformer and visual generative AI models that enable physical AI systems to understand and predict future states of the world — such as possible outcomes when a car runs a red light or a glass is too close to the edge of a table

These initiatives laid the groundwork for NVIDIA Cosmos, a platform introduced earlier this year to accelerate physical AI development with world foundation models, post-training libraries, and an accelerated data processing and curation pipeline.

NVIDIA Research at SIGGRAPH

NVIDIA researchers are presenting at SIGGRAPH advancements in simulation, AI-powered rendering and 3D content generation with potential applications in virtual world creation, robotics development and autonomous vehicle training.

One paper addresses the challenge of reconstructing physics-aware 3D geometry from 2D images or video. While many models can estimate a 3D object based on video footage, the generated 3D shape often lacks structural stability. Even if it’s a close visual match to a real object, a generated shape may have slightly uneven proportions or missing details that impact its physical realism.

For example, a 3D simulation of a chair built from 2D footage might collapse when dropped into a physically accurate simulation because the AI model is making visual estimations of 3D structure, not ground-truth measurements. The method introduced in this paper helps ensure that the generated 3D shapes replicate real-world physics to avoid this pitfall — supporting the creation of virtual worlds for physical AI training.

Another paper introduces a technique for bringing simulated characters to life with physically accurate motion. The researchers combined a motion generator with a physics-based tracking controller to generate realistic synthetic data for complex movements, such as the stunts of parkour practitioners.

This data can help develop virtual characters or train real-world humanoid robots with agile motor skills that are rarely found in real-world training data, expanding the possible physical feats robots could accomplish to tasks like traversing difficult terrain to support emergency response.

Additional papers tackle the complexity of simulating light and materials.

One project showcases how artists can create AI assistants to enhance the detail of materials. It taps diffusion models and a differentiable physically based renderer to give creators an easy way to modify material texture maps on top of a 3D object representation, enabling them to create richer, more realistic virtual worlds using simple text prompts.

The team demonstrated how the model can be used to quickly add realistic object details, such as signs of weathering or aging, which are time-consuming to create using traditional rendering methods. These objects can populate virtual environments used for creative applications like gaming or for physical applications like training robots and autonomous vehicles in simulation.

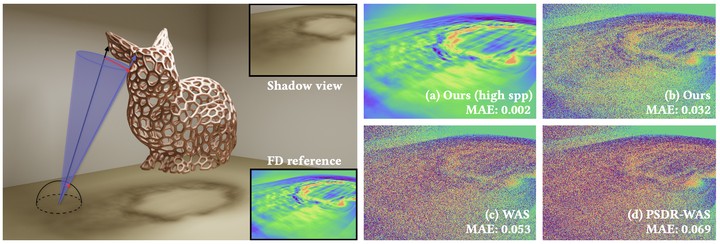

In the field of light simulation, another SIGGRAPH paper addresses a challenge in differentiable rendering, introducing a robust differentiable visibility query that enables faster, more accurate reconstruction of 3D geometry from images and videos.

This paper is an example of NVIDIA Research that ties together forward rendering and inverse rendering, quickly extracting parameters from virtual worlds that are essential for the accurate training of physical AI models on synthetic datasets.

Watch the special address by Fidler, Lefohn and Liu at SIGGRAPH and learn more about how graphics and simulation innovations come together to drive industrial digitalization by joining NVIDIA at the conference, running through Thursday, Aug. 14.