At the European Conference on Computer Vision (ECCV) running this week in Milan, the NVIDIA Research team is demonstrating groundbreaking innovations with 14 accepted publications.

The topics presented range from embodied AI and foundation models to retrieval-augmented generation and neural radiance fields. The majority of the presented work is focused on automotive research, including:

- RealGen: Retrieval-Augmented Generation for Controllable Traffic Scenarios is inspired by the success of retrieval-augmented generation in large language models. RealGen is a novel retrieval-based, in-context learning framework for traffic scenario generation. It synthesizes new scenarios by combining behaviors from multiple retrieved examples.

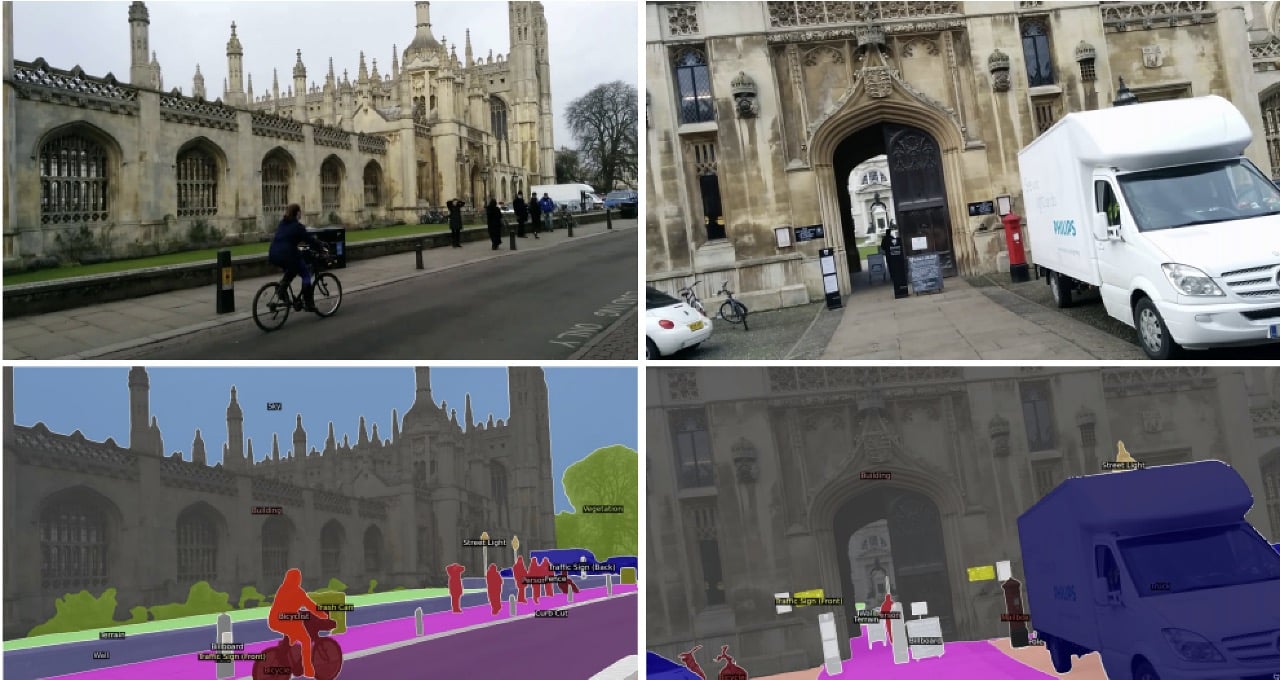

- The NeRFect Match: Exploring NeRF Features for Visual Localization examines visual localization — the task of determining the camera pose of a query image with respect to a 3D environment. The ability to localize an agent in 3D is fundamental to applications such as autonomous driving.

- Dolphins: Multimodal Language Model for Driving introduces Dolphins, a novel vision-language model architected to imbibe human-like driving abilities. Dolphins is adept at processing multimodal inputs comprising video or image data, text instructions and historical control signals to generate informed outputs corresponding to the provided instructions.

In addition, Tsung-Yi Lin, principal research scientist at NVIDIA, has been awarded the Koenderink Prize, a Test of Time award that honors fundamental contributions in computer vision. The prize is awarded biannually for an impactful paper published 10 years ago at ECCV. This year’s prize recognizes the 2014 publication Microsoft COCO: Common Objects in Context, coauthored by Lin.

NVIDIA researchers are co-organizing and speaking in a number of ECCV workshops. Of note is the Workshop on Cooperative Intelligence for Embodied AI, which focuses on cooperative intelligence within multi-agent autonomous systems. The Workshop on Vision-Centric Autonomous Driving covers visual perception and vision-language models for autonomous driving, as well as neural rendering of driving scenes.

Laura Leal-Taixé, senior research manager at NVIDIA, is the general chair of the conference. NVIDIA’s Jan Kautz, vice president of learning and perception research; Jose Alvarez, director of AV applied research; Sanja Fidler, vice president of AI research; and Marco Pavone, director of AV research, are on organizing committees.

See the full list of NVIDIA’s ECCV-accepted publications:

- RealGen: Retrieval-Augmented Generation for Simulating Realistic and Controllable Driving Scenarios

- The NeRFect Match: Exploring NeRF Features for Visual Localization

- Dolphins: Multimodal Language Model for Driving

- Photorealistic Object Insertion With Diffusion-Guided Inverse Rendering

- SEGIC: Unleashing the Emergent Correspondence for In-Context Segmentation

- Better Call SAL: Towards Learning to Segment Anything in Lidar

- Accelerating Online Mapping and Behavior Prediction via Direct BEV Feature Attention

- SPAMming Labels: Efficient Annotations for the Trackers of Tomorrow

- DiffiT: Diffusion Vision Transformers for Image Generation

- LITA: Language Instructed Temporal-Localization Assistant

- Avatar Fingerprinting for Authorized Use of Synthetic Talking-Head Videos

- COIN: Control-Inpainting Diffusion Prior for Human and Camera Motion Estimation

- A Semantic Space Is Worth 256 Language Descriptions: Make Stronger Segmentation Models With Descriptive Properties

- LCM-Lookahead for Encoder-Based Text-to-Image Personalization

Learn more about NVIDIA Research and watch the NVIDIA DRIVE Labs video series.

Image credit: The NeRFect Match: Exploring NeRF Features for Visual Localization.