In Las Vegas’s T-Mobile Arena, fans of the Golden Knights are getting more than just hockey — they’re… Read Article

Robotics

Most Popular

Robots’ Holiday Wishes Come True: NVIDIA Jetson Platform Offers High-Performance Edge AI at Festive Prices

Developers, researchers, hobbyists and students can take a byte out of holiday shopping this season as NVIDIA has unwrapped special discounts on the NVIDIA Jetson family of developer kits for… Read Article

NVIDIA and AWS Expand Full-Stack Partnership, Providing the Secure, High-Performance Compute Platform Vital for Future Innovation

At AWS re:Invent, NVIDIA and Amazon Web Services expanded their strategic collaboration with new technology integrations across interconnect technology, cloud infrastructure, open models and physical AI. As part of this… Read Article

At NeurIPS, NVIDIA Advances Open Model Development for Digital and Physical AI

Researchers worldwide rely on open-source technologies as the foundation of their work. To equip the community with the latest advancements in digital and physical AI, NVIDIA is further expanding its… Read Article

Powering AI Superfactories, NVIDIA and Microsoft Integrate Latest Technologies for Inference, Cybersecurity, Physical AI

Timed with the Microsoft Ignite conference running this week, NVIDIA is expanding its collaboration with Microsoft, including through the adoption of next-generation NVIDIA Spectrum-X Ethernet switches for the new Microsoft… Read Article

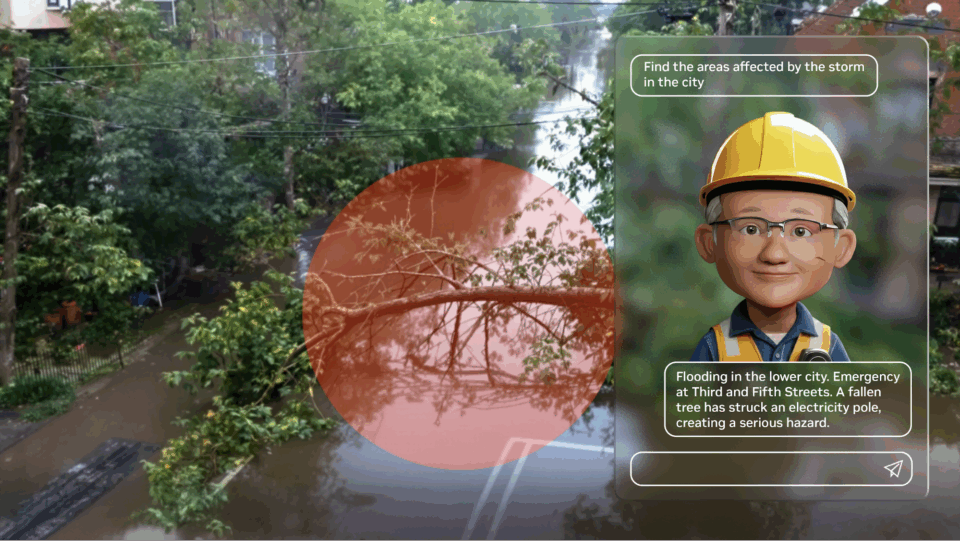

AI On: 3 Ways to Bring Agentic AI to Computer Vision Applications

Editor’s note: This post is part of the AI On blog series, which explores the latest techniques and real-world applications of agentic AI, chatbots and copilots. The series also highlights… Read Article

Into the Omniverse: Open World Foundation Models Generate Synthetic Worlds for Physical AI Development

Physical AI models — which power robots, autonomous vehicles and other intelligent machines — must be safe, generalized for dynamic scenarios and capable of perceiving, reasoning and operating in real… Read Article

NVIDIA GTC Washington, DC: Live Updates on What’s Next in AI

Monday, Oct. 27, 12:30 p.m. ET How Medium-Sized Cities Are Tackling AI Readiness 🔗 A panel discussion today at GTC Washington, D.C., highlighted a public-private initiative to invigorate the economy… Read Article

Fueling Economic Development Across the US: How NVIDIA Is Empowering States, Municipalities and Universities to Drive Innovation

To democratize access to AI technology nationwide, AI education and deployment can’t be limited to a few urban tech hubs — it must reach every community, university and state. That’s… Read Article