In a turducken of a demo, NVIDIA researchers stuffed four AI models into a serving of digital avatar technology for SIGGRAPH 2021’s Real-Time Live showcase — winning the Best in Show award.

The showcase, one of the most anticipated events at the world’s largest computer graphics conference, held virtually this year, celebrates cutting-edge real-time projects spanning game technology, augmented reality and scientific visualization. It featured a lineup of jury-reviewed interactive projects, with presenters hailing from Unity Technologies, Rensselaer Polytechnic Institute, the NYU Future Reality Lab and more.

Broadcasting live from our Silicon Valley headquarters, the NVIDIA Research team presented a collection of AI models that can create lifelike virtual characters for projects such as bandwidth-efficient video conferencing and storytelling.

The demo featured tools to generate digital avatars from a single photo, animate avatars with natural 3D facial motion and convert text to speech.

“Making digital avatars is a notoriously difficult, tedious and expensive process,” said Bryan Catanzaro, vice president of applied deep learning research at NVIDIA, in the presentation. But with AI tools, “there is an easy way to create digital avatars for real people as well as cartoon characters. It can be used for video conferencing, storytelling, virtual assistants and many other applications.”

AI Aces the Interview

In the demo, two NVIDIA research scientists played the part of an interviewer and a prospective hire speaking over video conference. Over the course of the call, the interviewee showed off the capabilities of AI-driven digital avatar technology to communicate with the interviewer.

The researcher playing the part of interviewee relied on an NVIDIA RTX laptop throughout, while the other used a desktop workstation powered by RTX A6000 GPUs. The entire pipeline can also be run on GPUs in the cloud.

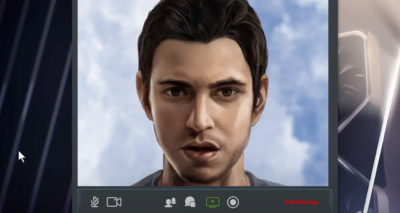

While sitting in a campus coffee shop, wearing a baseball cap and a face mask, the interviewee used the Vid2Vid Cameo model to appear clean-shaven in a collared shirt on the video call (seen in the image above). The AI model creates realistic digital avatars from a single photo of the subject — no 3D scan or specialized training images required.

“The digital avatar creation is instantaneous, so I can quickly create a different avatar by using a different photo,” he said, demonstrating the capability with another two images of himself.

Instead of transmitting a video stream, the researcher’s system sent only his voice — which was then fed into the NVIDIA Omniverse Audio2Face app. Audio2Face generates natural motion of the head, eyes and lips to match audio input in real time on a 3D head model. This facial animation went into Vid2Vid Cameo to synthesize natural-looking motion with the presenter’s digital avatar.

Not just for photorealistic digital avatars, the researcher fed his speech through Audio2Face and Vid2Vid Cameo to voice an animated character, too. Using NVIDIA StyleGAN, he explained, developers can create infinite digital avatars modeled after cartoon characters or paintings.

The models, optimized to run on NVIDIA RTX GPUs, easily deliver video at 30 frames per second. It’s also highly bandwidth efficient, since the presenter is sending only audio data over the network instead of transmitting a high-resolution video feed.

Taking it a step further, the researcher showed that when his coffee shop surroundings got too loud, the RAD-TTS model could convert typed messages into his voice — replacing the audio fed into Audio2Face. The breakthrough text-to-speech, deep learning-based tool can synthesize lifelike speech from arbitrary text inputs in milliseconds.

RAD-TTS can synthesize a variety of voices, helping developers bring book characters to life or even rap songs like “The Real Slim Shady” by Eminem, as the research team showed in the demo’s finale.

SIGGRAPH continues through Aug. 13. Check out the full lineup of NVIDIA events at the conference and catch the premiere of our documentary, “Connecting in the Metaverse: The Making of the GTC Keynote,” on Aug. 11.