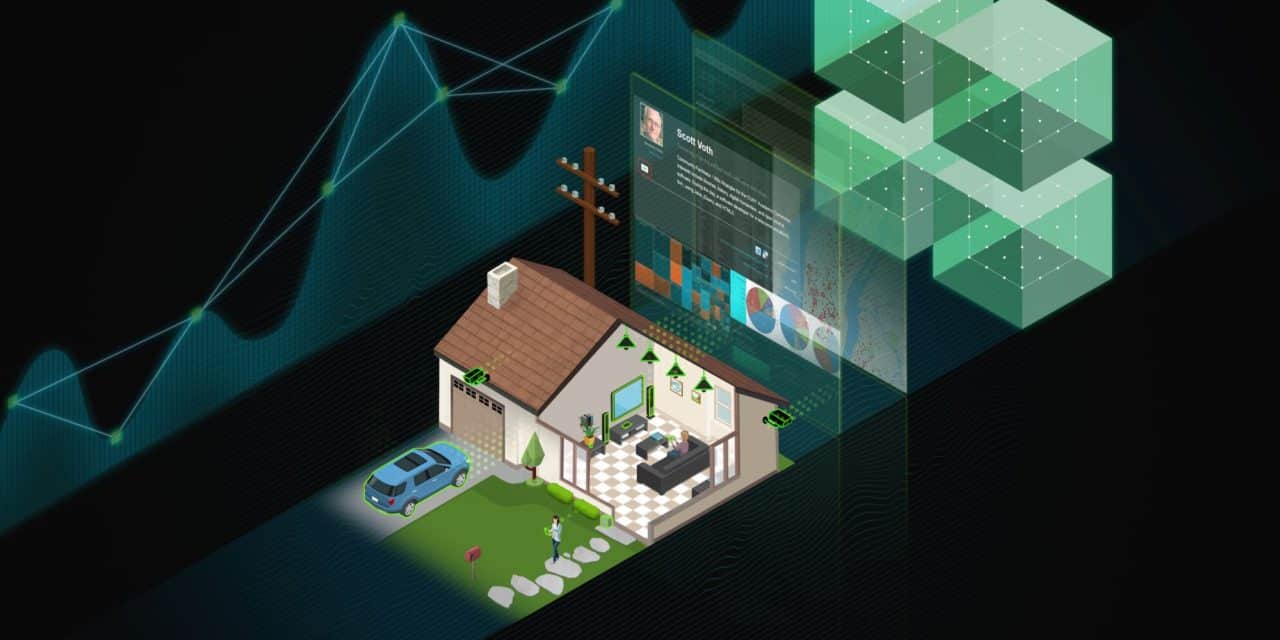

As 5G takes off, the implications for the telecom industry are enormous. AI can help — and there are applications available right now to get telecom providers started.

But plenty of other industries can also benefit from the approaches to dealing with this shift. If data is the ore for many businesses, then AI can help them find the gold within it.

As telecoms reevaluate their IT infrastructure to provide high visibility into network health and the best customer service, they face four key considerations.

The Trend Toward Data-First Automation

Businesses of all sorts, including telecoms, are finding themselves on what some call a data-first automation journey. It’s one that puts data at the forefront of decision-making by combining domain expertise in an accelerated data science environment — and that ultimately uses automation to speed time to data and augment the capabilities of humans.

Telecom operations teams are faced with three important trends:

- 5V has been failing. Volume, value, velocity, variety and veracity are the five characteristics of data. Data at volume has been too expensive to save in its entirety. The value of data has been low because its insights couldn’t be extracted. Velocity and variety have been hard to handle unless money was thrown at the problem. And veracity has often been a function of manual intervention.

- Adopt VAST. (Yes, another acronym.) VAST — volume, agility and spatial temporal — is the antidote to a sagging 5V, according to OmniSci, a GPU-accelerated analytics and visualization company. Data volume is growing — it’s common to see 1 billion rows of data in use cases. Analysts expect agile dashboards and pipelines that enable real-time data ingestion and decision-making. At least 80 percent of data records created today contain spatiotemporal data, and billions of these data points can be visualized in milliseconds.

- AI can’t wait, so move at the speed of light. Identify your customer’s problems; build your product; gather and normalize data; visualize it; and make decisions as fast as possible.

Striving to Stay Relevant

AI can help organizations stay ahead of the competition. It can help keep corner cases from catching them off guard. And it can provide another line of defense, watching out for an organization’s interests around the clock.

But this requires dedication and effort to understand how AI techniques can help solve use cases in a meaningful way, as well as an understanding of potential limitations:

- Building deep learning models is sexy but data is the foundation. Spending time on data — cleaning it, normalizing it, applying some AI to it — can avoid creating a situation of garbage in, garbage out (see here for an example).

- Data isn’t structured the same way as privacy and governance regulations are written. Traditional data protection approaches aren’t improving fast enough to keep up with data consolidation.

- With data used for AI increasing at a rate IT teams aren’t familiar with, many organizations struggle to keep up with their hardware infrastructure needs. They’re scrambling to stay up with hidden costs, increasing workloads and shadow IT.

GPU Acceleration to Save Time, Resources and Money

Instead of spending time waiting for results and using manual labor to do tedious tasks, GPU-accelerated deep learning data preparation solutions, like Datalogue, use deep learning to help automatically extract, understand, transform, and load data.

Using GPU-accelerated machine learning algorithms and libraries, like RAPIDS, can help speed model training times, predict the future, and detect anomalies.

And GPU-accelerated analytics and visualization platforms, like OmniSci, can help visualize billions of rows of data in milliseconds. This gives data scientists and analysts an ability to work at the speed of thought and use their creativity to gain insights out of data and create reports that are timely and valuable.

These tools can answer questions that were previously unanswerable and use massive amounts of data to gain a more holistic view.

Datalogue customers, for example, are seeing a 98 percent reduction in time to data. The investment return is on the order of hundreds of thousands of dollars per month. They’re using Datalogue to generate 100k+ data pipelines on a monthly basis.

OmniSci customers can easily view 10 billion-row datasets in 300 milliseconds, with no indexing or aggregation.

These kinds of comprehensive offerings are already helping customers of Datalogue, OmniSci and NVIDIA save at least $50 million in operational costs.

When to Start: Now. How to Start? Read On

Everyone talks about starting now, but how do you actually start?

- First, identify the fundamental use cases that the organization or its customers find difficult to solve, whether it’s in automation, reporting or governance. Think about how automating certain parts of that workflow with AI would change the way data is used, saved and transformed.

- Clearly define a few of these use cases and prove the concept. Consider how visualization of the results can demonstrate it. And add a stretch use case that extends the usefulness of the new data by applying a predictive model to the dataset or some other advanced discovery model.

- Data is the lifeblood of insights. Experiment quickly, then scale. If you can get 90+ percent there in a week, that’s more valuable than getting to 100 percent in a year. And when designing data processes, think about the problems you’re solving, rather than the data sources.

- Solving problems with data is a team sport. Choose the right tools for the different team members involved, and then optimize the underlying platform to provide the tools and interfaces needed to maximize their contributions.

- Start. More data analytics companies than ever are creating comprehensive offerings, running on NVIDIA GPUs. One of the top infrastructure options is NVIDIA DGX systems, purpose-built AI supercomputers that combine integrated software and hardware to maximize productivity and reduce total costs.

To learn more, watch the on-demand webinar “AI for Network Operations.”

More resources:

- AI for Network Operations solution brief

- AI for Network Operations keynote

- NVIDIA DGX systems

- NVIDIA accelerated data science