Roll out of bed, fire up the laptop, turn on the webcam — and look picture-perfect in every video call, with the help of AI developed by NVIDIA researchers.

Vid2Vid Cameo, one of the deep learning models behind the NVIDIA Maxine software development kit for video conferencing, uses generative adversarial networks (known as GANs) to synthesize realistic talking-head videos using a single 2D image of a person.

To use it, participants submit a reference image — which could be either a real photo of themselves or a cartoon avatar — before joining a video call. During the meeting, the AI model will capture each individual’s real-time motion and apply it to the previously uploaded still image.

That means that by uploading a photo of themselves in formal attire, meeting attendees with mussed hair and pajamas can appear on a call in work-appropriate attire, with AI mapping the user’s facial movements to the reference photo. If the subject is turned to the left, the technology can adjust the viewpoint so the attendee appears to be directly facing the webcam.

Besides helping meeting attendees look their best, this AI technique also shrinks the bandwidth needed for video conferencing by up to 10x, avoiding jitter and lag. It’ll soon be available in the NVIDIA Video Codec SDK as the AI Face Codec.

“Many people have limited internet bandwidth, but still want to have a smooth video call with friends and family,” said NVIDIA researcher Ming-Yu Liu, co-author on the project. “In addition to helping them, the underlying technology could also be used to assist the work of animators, photo editors and game developers.”

Vid2Vid Cameo was presented this week at the prestigious Conference on Computer Vision and Pattern Recognition — one of 28 NVIDIA papers at the virtual event. It’s also available on the AI Playground, where anyone can experience our research demos firsthand.

AI Steals the Show

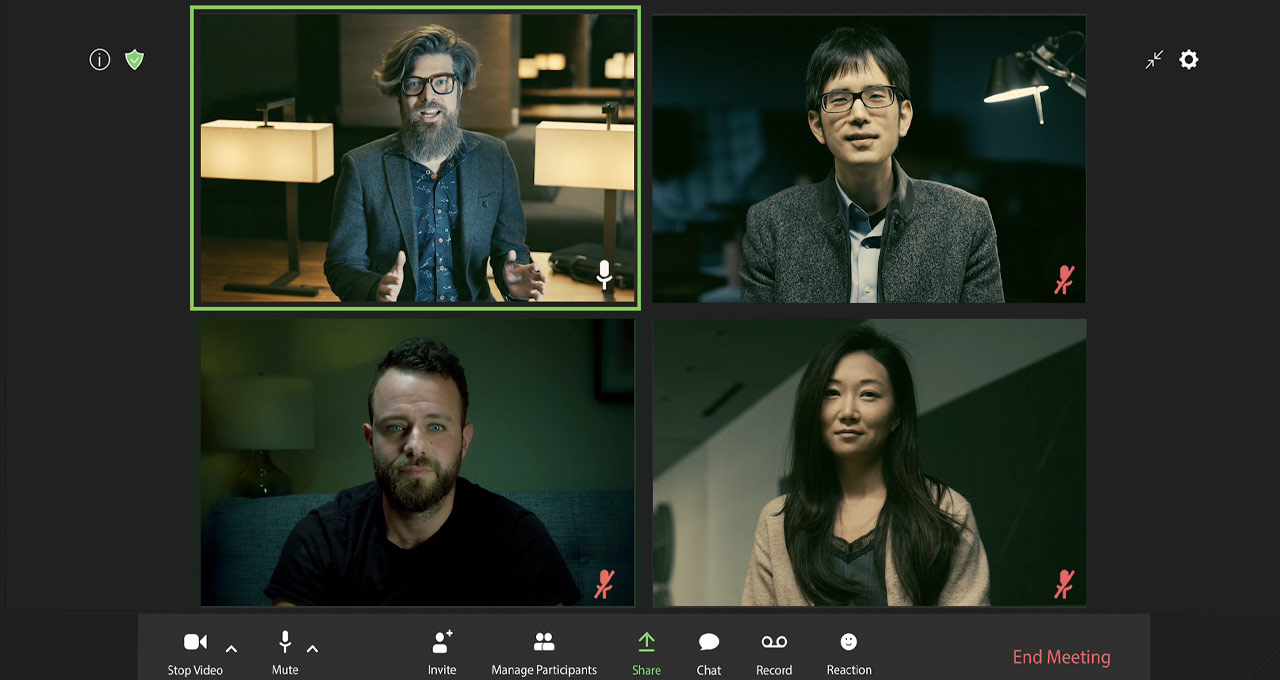

In a nod to classic heist movies (and a hit Netflix show), NVIDIA researchers put their talking-head GAN model through its paces for a virtual meeting. The demo highlights key features of Vid2Vid Cameo, including facial redirection, animated avatars and data compression.

These capabilities are coming soon to the NVIDIA Maxine SDK, which gives developers optimized pretrained models for video, audio and augmented reality effects in video conferencing and live streaming.

Developers can already adopt Maxine AI effects including intelligent noise removal, video upscaling and body pose estimation. The free-to-download SDK can also be paired with the NVIDIA Riva platform for conversational AI applications, including transcription and translation.

Hello from the AI Side

Vid2Vid Cameo requires just two elements to create a realistic AI talking head for video conferencing: a single shot of the person’s appearance and a video stream that dictates how that image should be animated.

Developed on NVIDIA DGX systems, the model was trained using a dataset of 180,000 high-quality talking head videos. The network learned to identify 20 key points that can be used to model facial motion without human annotations. The points encode the location of features including the eyes, mouth and nose.

It then extracts these key points from a reference image of the caller, which could be sent to other video conference participants ahead of time or re-used from previous meetings. This way, instead of sending bulky live video streams from one participant to the other, video conferencing platforms can simply send data on how the speaker’s key facial points are moving.

On the receiver’s side, the GAN model uses this information to synthesize a video that mimics the appearance of the reference image.

By compressing and sending just the head position and key points back and forth, instead of full video streams, this technique can reduce bandwidth needs for video conferences by 10x, providing a smoother user experience. The model can be adjusted to transmit a differing number of key points to adapt to different bandwidth environments without compromising visual quality.

The viewpoint of the resulting talking head video can also be freely adjusted to show the user from a side profile or straight on, as well as from lower or higher camera angles. This feature could also be applied by photo editors working with still images.

NVIDIA researchers found that Vid2Vid Cameo outperforms state-of-the-art models by producing more realistic and sharper results — whether the reference image and the video are from the same person, or when the AI is tasked with transferring movement from one person onto a reference image of another.

The latter feature can be used to apply the facial motions of a speaker to animate a digital avatar in a video conference, or even lend realistic expression and movement to a video game or cartoon character.

The paper behind Vid2Vid Cameo was authored by NVIDIA researchers Ting-Chun Wang, Arun Mallya and Ming-Yu Liu. The NVIDIA Research team consists of more than 200 scientists around the globe, focusing on areas such as AI, computer vision, self-driving cars, robotics and graphics.

Our thanks to actor Edan Moses, who performed the English voiceover of The Professor on “La Casa De Papel/Money Heist” on Netflix, for his contribution to the video above featuring our latest AI research.