Editor’s note: This is the second in our new CUDA Accelerated news series, which showcases the latest software libraries, NVIDIA NIM microservices and tools that help developers, software makers and enterprises use GPUs to accelerate their applications.

The world’s most important and complex problems are demanding more and more compute power.

To get maximum performance and throughput, especially for large generative AI and scientific computing projects, the best approach is to use entire data centers as single compute units by taking advantage of multi-GPU, multi-node parallel processing and distributed computing.

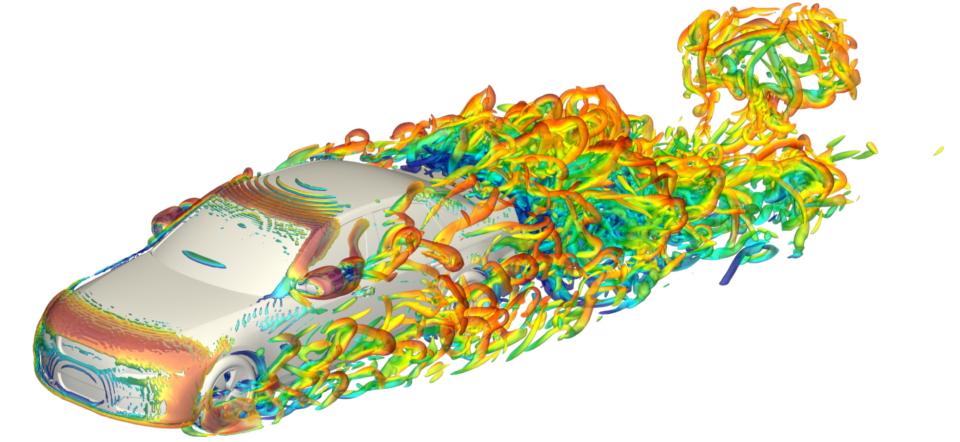

NVIDIA Warp, an open-source framework for accelerated scientific computing in Python, increases large-scale fluid simulations up to 8x faster than before.

The just-released Warp 1.5 supports tile-based programming and increases performance through integration with high-performance NVIDIA MathDx libraries. The integration enables accelerated dense linear algebra for scientific and simulation workloads.

Software developers can use Warp to write efficient and differentiable GPU kernels natively in Python, where Warp now exposes Tensor Core operations to accelerate simulation for robotics, computational fluid dynamics and digital twins.

Warp uses cuBLASDx and cuFFTDx to implement matrix-multiply and fast fourier transform (FFT) tile operations. Combined with Warp’s tile programming model, these NVIDIA device-side math libraries enable seamless fusion of Tensor Core accelerated GEMM, FFT and other tile operations within a single kernel.

With this approach, applications can outperform traditional linear algebra or tensor frameworks by a factor of 4x when requiring dense linear algebra, such as with robot forward dynamics.

Customers such as Autodesk are using NVIDIA Warp to write large-scale fluid simulations up to 8x faster and 5x larger than traditional frameworks.

cuPyNumeric Large-Scale Cluster Library

The NVIDIA cuNumeric accelerated computing library advances scientific discovery by helping researchers scale to powerful computing clusters without modifying their Python code. As data sizes and computational complexities grow, CPU-based programs need help meeting the speed and scale demanded by cutting-edge research.

With the cuPyNumeric library, researchers can now take their data-crunching Python code and effortlessly run it on GPU-powered workstations, cloud servers or massive supercomputers. The faster they can work through their data, the quicker they can make decisions about promising data points, trends worth investigating and adjustments to their experiments.

Once cuPyNumeric is applied, they can run their code on one or thousands of GPUs with zero code changes.

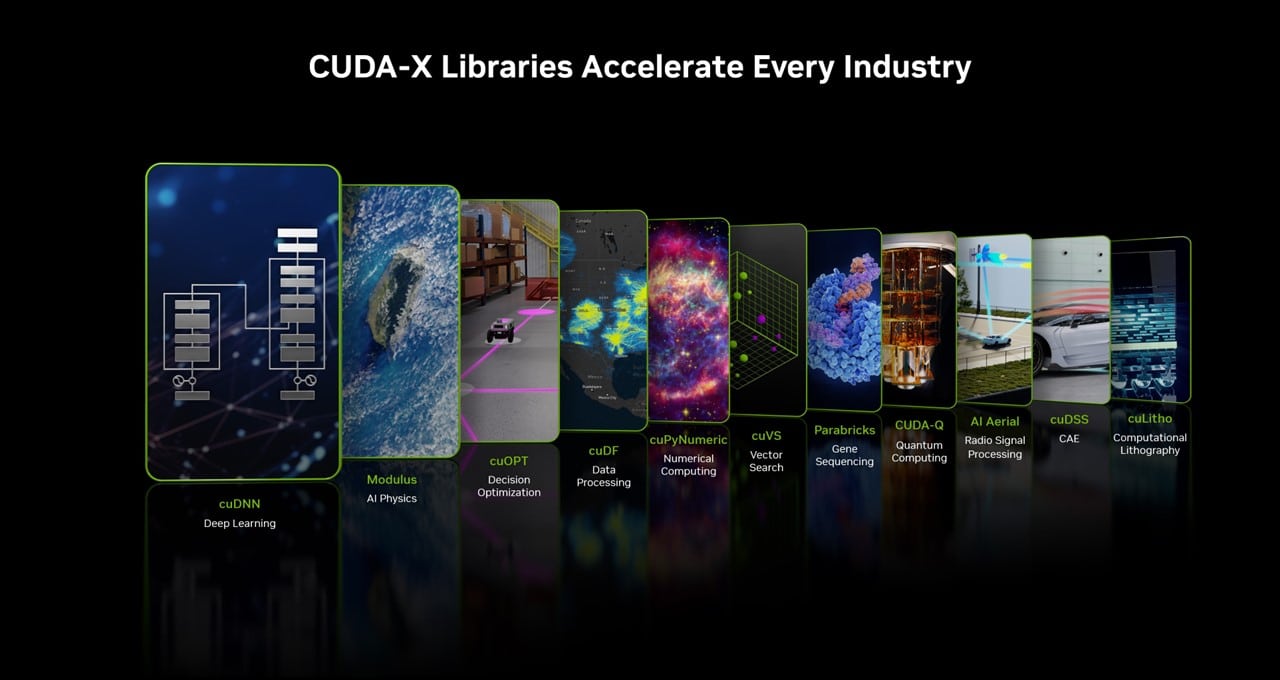

Each CUDA-accelerated library is optimized to harness hardware features specific to NVIDIA GPUs and CPUs. Combined, they encompass the power of the NVIDIA suite of products, services and enabling technologies.

NVIDIA provides over 400 libraries. New updates continue to be added on the CUDA platform roadmap, expanding across diverse use cases:

GPUs can’t simply accelerate software written for general-purpose CPUs. Specialized algorithm software libraries and tools are needed to accelerate specific workloads, especially in scientific computing and distributed computing architectures.

The demand for more computing horsepower grows daily, but without an increased energy bill. Using multi-GPU, multi-node parallel processing and distributed computing is the best way to help solve this demand, while reducing energy.

Learn more about NVIDIA CUDA libraries and microservices for AI.