Cloud-native supercomputing is the next big thing in supercomputing, and it’s here today, ready to tackle the toughest HPC and AI workloads.

The University of Cambridge is building a cloud-native supercomputer in the UK. Two teams of researchers in the U.S. are separately developing key software elements for cloud-native supercomputing.

The Los Alamos National Laboratory, as part of its ongoing collaboration with the UCF Consortium, is helping to deliver capabilities that accelerate data algorithms. Ohio State University is updating Message Passing Interface software to enhance scientific simulations.

NVIDIA is making cloud-native supercomputers available to users worldwide in the form of its latest DGX SuperPOD. It packs key ingredients such as the NVIDIA BlueField-2 data processing unit (DPU) now in production.

So, What Is Cloud-Native Supercomputing?

Like Reese’s treats that wrap peanut butter in chocolate, cloud-native supercomputing combines the best of two worlds.

Cloud-native supercomputers blend the power of high performance computing with the security and ease of use of cloud computing services.

Put another way, cloud-native supercomputing provides an HPC cloud with a system as powerful as a TOP500 supercomputer that multiple users can share securely, without sacrificing the performance of their applications.

What Can Cloud-Native Supercomputers Do?

Cloud-native supercomputers pack two key features.

First, they let multiple users share a supercomputer while ensuring that each user’s workload stays secure and private. It’s a capability known as “multi-tenant isolation” that’s available in today’s commercial cloud computing services. But it’s typically not found in HPC systems used for technical and scientific workloads where raw performance is the top priority and security services once slowed operations.

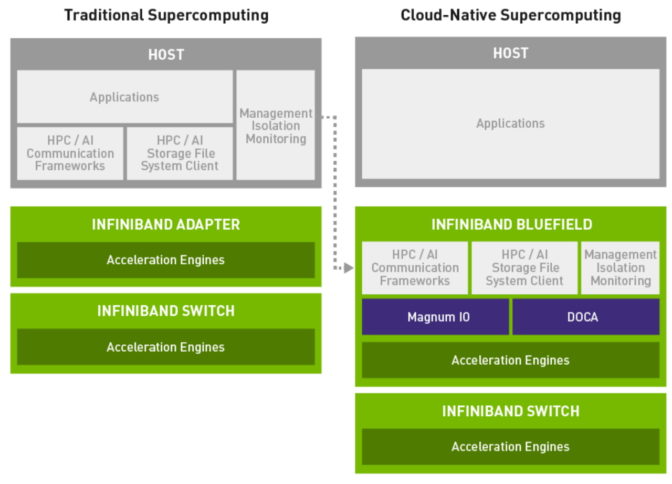

Second, cloud-native supercomputers use DPUs to handle tasks such as storage, security for tenant isolation and systems management. This offloads the CPU to focus on processing tasks, maximizing overall system performance.

The result is a supercomputer that enables native cloud services without a loss in performance. Looking forward, DPUs can handle additional offload tasks, so systems maintain peak efficiency running HPC and AI workloads.

How Do Cloud-Native Supercomputers Work?

Under the hood, today’s supercomputers couple two kinds of brains — CPUs and accelerators, typically GPUs.

Accelerators pack thousands of processing cores to speed parallel operations at the heart of many AI and HPC workloads. CPUs are built for the parts of algorithms that require fast serial processing. But over time they’ve become burdened with growing layers of communications tasks needed to manage increasingly large and complex systems.

Cloud-native supercomputers include a third brain to build faster, more efficient systems. They add DPUs that offload security, communications, storage and other jobs modern systems need to manage.

A Commuter Lane for Supercomputers

In traditional supercomputers, a computing job sometimes has to wait while the CPU handles a communications task. It’s a familiar problem that generates what’s called system noise.

In cloud-native supercomputers, computing and communications flow in parallel. It’s like opening a third lane on a highway to help all traffic flow more smoothly.

Early tests show cloud-native supercomputers can perform HPC jobs 1.4x faster than traditional ones, according to work at the MVAPICH lab at Ohio State, a specialist in HPC communications. The lab also showed cloud-native supercomputers achieve a 100 percent overlap of compute and communications functions, 99 percent higher than existing HPC systems.

Experts Speak on Cloud-Native Supercomputing

That’s why around the world, cloud-native supercomputing is coming online.

“We’re building the first academic cloud-native supercomputer in Europe to offer bare-metal performance with cloud-native InfiniBand services,” said Paul Calleja, director of Research Computing Services at Cambridge University.

“This system, which would rank among the top 100 in the November 2020 TOP500 list, will enable our researchers to optimize their applications using the latest advances in supercomputing architecture,” he added.

HPC specialists are paving the way for further advances in cloud-native supercomputers.

“The UCF consortium of industry and academic leaders is creating the production-grade communication frameworks and open standards needed to enable the future for cloud-native supercomputing,” said Steve Poole, speaking in his role as director of the Unified Communication Framework, whose members include representatives from Arm, IBM, NVIDIA, U.S. national labs and U.S. universities.

“Our tests show cloud-native supercomputers have the architectural efficiencies to lift supercomputers to the next level of HPC performance while enabling new security features,” said Dhabaleswar K. (DK) Panda, a professor of computer science and engineering at Ohio State and lead of its Network-Based Computing Laboratory.

Learn More About Cloud-Native Supercomputers

To learn more, check out the NVIDIA Technical Blog, as well as our technical overview on cloud-native supercomputing. You can also find more information online about the new system at the University of Cambridge and NVIDIA’s new cloud-native supercomputer.

And to get the big picture on the latest advances in HPC, AI and more, watch the GTC keynote.