Editor’s note: This blog was updated on Oct. 13, 2022.

What Is Edge Computing?

Edge computing is the concept of capturing and processing data as close to its source or end user as possible. Typically, the data source is an internet of things (IoT) sensor. The processing is done locally by placing servers or other hardware near the physical location of the data sources to process the data.

Since edge computing processes data locally — on the edge of the network, instead of in the cloud or a centralized data center — it minimizes latency and data transit costs, allowing for real-time feedback and decision-making.

The always-on, instantaneous feedback that edge computing offers is especially critical for applications where human safety is a factor. For example, it’s crucial for self-driving cars, where saving even milliseconds of data processing and response times can be key to avoiding accidents. It’s also critical in hospitals, where doctors rely on accurate, real-time data to treat patients.

While edge computing is particularly important for modern applications such as data science and machine learning, also known as edge AI, it’s not a new concept.

The History of Edge Computing

In fact, edge computing can be traced back to the 1990s, when content delivery networks (CDNs) acted as distributed data centers. At the time, CDNs were limited to caching images and videos, not massive data workloads.

By the 2000s, the explosion of smart devices strained existing IT infrastructure. However, inventions such as peer-to-peer (P2P) networks, where computers are connected and share resources without going through a separate, centralized server computer, alleviated the strain.

By the mid-2000s, large companies started renting computing and data storage resources to end users via public clouds. As cloud-based applications and businesses working from many locations grew in popularity, processing data as efficiently as possible became increasingly important.

All of these technologies have led to our current form of edge computing, in which edge nodes have the capability to deliver low-latency access to data-intensive resources and insights. These capabilities were built on principles from the CDN’s low-latency abilities, P2P networks decentralized platform and the cloud’s scalability and resiliency. Together, these technologies have created a more efficient, resilient and reliable computing framework.

How Does Edge Computing Work?

Edge computing works by processing data as close to its source or end user’s device as possible. It keeps data, applications and computing power away from a centralized network or data center.

Traditionally, data produced by sensors is often either manually reviewed by humans, left unprocessed, or sent to the cloud or a data center for processing, and then sent back to the device. Relying solely on manual reviews results in slow, inefficient processes. And while cloud computing provides computing resources, the data transmission and processing puts a large strain on bandwidth and latency.

Bandwidth is the rate at which data is transferred over the internet. When data is sent to the cloud, it travels through a wide area network, which can be costly due to its global coverage and high bandwidth needs. When processing data at the edge, local area networks can be utilized, resulting in higher bandwidth at lower costs.

Latency is the delay in sending information from one point to the next; it affects response times. It’s reduced when processing at the edge because data produced by sensors and IoT devices no longer needs to be sent to a centralized cloud to be processed.

By bringing computing to the edge, or closer to the source of data, latency is reduced and bandwidth is increased, resulting in faster insights and actions.

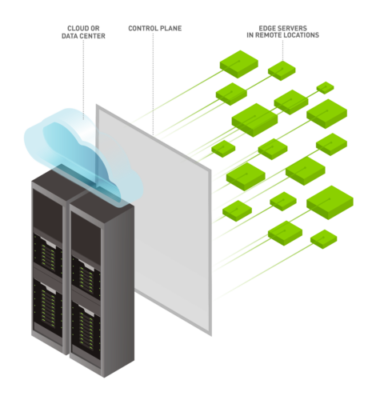

Edge computing can be run on one or multiple servers to close the distance between where data is collected and processed to reduce bottlenecks and accelerate applications. An ideal edge infrastructure also involves a centralized software platform that can remotely manage all edge systems in one interface.

Why Edge Computing? What Are the Benefits of Edge Computing?

The shift to edge computing offers businesses new opportunities to glean insights from their large datasets. The main benefits of edge computing are:

- Lower latency: Latency is reduced when processing at the edge because data produced by sensors and IoT devices no longer need to be sent to a centralized cloud to be processed.

- Reduced bandwidth: When data is sent to the cloud, it travels through a wide area network, which can be costly due to its global coverage and high bandwidth needs. When processing data at the edge, local area networks can be utilized, resulting in higher bandwidth at lower costs.

- Data sovereignty: When data is processed at the location it is collected, edge computing allows organizations to keep all of their data and compute at a suitable location. This results in reduced exposure to cybersecurity attacks and adherence to strict, ever-changing data location laws.

Beyond edge computing, most corporations are looking to benefit from edge AI — the fusion of edge computing and AI. In addition to the benefits above, edge AI offers:

- Intelligence: AI applications are more powerful and flexible than conventional applications that can respond only to inputs that are explicitly anticipated. An AI neural network is not trained on how to answer a specific question, but rather how to answer a particular type of question, even if the question itself is new. This gives the AI algorithm the intelligence to process infinitely diverse inputs, like text, spoken words or video.

- Real-time Insights: Since edge technology analyzes data locally rather than in a faraway cloud delayed by long-distance communications, it responds to users’ needs and can produce inferences in real time.

- Persistent improvement: AI models grow increasingly accurate as they train on more data. When an edge AI application confronts data that it can’t accurately or confidently process, it typically uploads it to the cloud so that the AI algorithm can retrain and learn from it. So the longer a model is in production at the edge, the more accurate the model will become.

With so much value to be gained, businesses are rapidly adopting edge computing. Gartner has predicted that by the end of 2023, 50% of large enterprises will have a documented edge computing strategy, compared to less than 5% in 20201. Learn more about how AI is changing how enterprises manage edge applications.

Why Is Edge Computing Needed Now?

In recent years, edge computing has become increasingly important due to the convergence of IoT and 5G. These technologies are creating the use cases that require organizations to consider edge computing.

With the proliferation of IoT devices came the generation of big data. Organizations suddenly collecting data from every aspect of their businesses realized their applications weren’t built to handle such large volumes of data.

Additionally, they came to realize that the infrastructure for transferring, storing and processing large volumes of data can be extremely expensive and difficult to manage. That may be why only a fraction of data collected from IoT devices is ever processed. In some situations, it’s as low as 25%.

And the problem is compounding. There are 40 billion IoT devices today. Arm predicts there could be 1 trillion IoT devices by 2022. As the number of connected devices grows and the amount of data that needs to be transferred, stored and processed increases, organizations are shifting to edge computing to alleviate the costs required to use the same data in cloud computing models.

5G networks, which can clock in 10x faster than 4G ones, are built to allow each node to serve hundreds of edge devices, increasing the possibilities for AI-enabled services at edge locations.

With edge computing’s powerful, quick and reliable processing power, businesses have the potential to explore new business opportunities, gain real-time insights, increase operational efficiency and improve their user experiences.

What Are the Types of Edge Computing?

The definition of edge computing is broad. Often, edge computing is referred to as any computing outside of a cloud or traditional data center.

While there are different types of edge computing, three primary categories of edge computing include:

- Provider edge: The provider edge is a network of computing resources accessed by the internet. It’s mainly used for delivering services from telecommunication companies, service providers, media companies and other CDN operators.

- Enterprise edge: The enterprise edge is an extension of the enterprise data center, consisting of data centers at remote office sites, micro-data centers, or even racks of servers sitting in a compute closet on a factory floor. As with a traditional, centralized data center, this environment is generally owned and operated by IT. However, there may be space or power limitations at the enterprise edge that change the design of these environments.

- Industrial edge: The industrial edge is also known as the far edge. It generally encompasses smaller compute instances such as one or two small, ruggedized edge servers, or even an embedded system deployed outside a data center environment. Because they run outside of a normal data center, there are a number of unique space, cooling, security, and management challenges.

What Are the Differences and Similarities Between Edge Computing and Cloud Computing?

The main difference between cloud and edge computing is where the processing is located. For edge computing, processing occurs at the edge of a network, closer to the data source, while for cloud computing, processing occurs in the data center.

The chart below details the differences between the two technologies:

| Cloud Computing | Edge Computing |

|---|---|

| Non-time-sensitive data processing | Real-time data processing |

| Reliable internet connection | Remote locations with limited or no internet connectivity |

| Dynamic workloads | Large datasets that are too costly to send to the cloud |

| Data in cloud storage | Highly sensitive data and strict data laws |

Edge and cloud computing have distinct features, and most organizations benefit from using both. A hybrid-cloud architecture allows enterprises to take advantage of the security and manageability of on-premises systems while also using public cloud resources from a service provider.

Cloud-native technologies, such as containerization, can help manage edge computing solutions. IDC predicts that, by 2024, 75% of new operational applications deployed at the edge will leverage containerization to enable a more open and composable architecture necessary for resilient operations.2

What Are Examples of Edge Computing Use Cases in Different Industries?

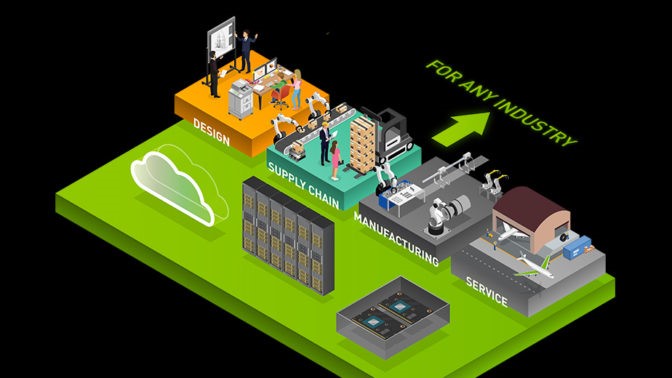

The most adopted form of edge computing is edge AI. This combination of edge computing and AI can bring real-time intelligence to businesses across industries such as retail, healthcare, manufacturing, hospitals and more.

Edge Computing for Retail

In the face of rapidly changing consumer demand, behavior and expectations, the world’s largest retailers enlist edge AI to deliver better experiences for customers.

With edge computing, retailers can boost their agility by:

- Reducing shrinkage: With in-store cameras and sensors using AI at the edge to analyze data, stores can identify and prevent instances of error, waste, damage and theft.

- Improving inventory management: Edge computing applications can use in-store cameras to alert store associates when inventories are low, reducing the incidences of stockouts.

- Streamlining shopping experiences: With edge computing’s fast data processing, retailers can implement voice ordering or product search to improve the customer experience for shoppers.

Learn more about the three pillars of edge AI in retail.

Edge Computing for Smart Cities

Cities, school campuses, stadiums and shopping malls are examples of the many places where edge AI is transforming locations into smart spaces. Edge AI helps make these spaces more operationally efficient, safe and accessible.

Edge computing has been used to transform operations and improve safety around the world in areas such as:

- Reducing traffic congestion: Nota uses vision AI to identify, analyze and optimize traffic. Cities use its offering to improve traffic flow, decrease traffic congestion-related costs and minimize the time drivers spend in traffic.

- Monitoring beach safety: Sightbit’s image-detection application spots dangers at beaches, such as rip currents and hazardous ocean conditions, allowing authorities to efficiently flag safety threats.

- Increasing airline and airport operation efficiency: Assaia created an AI-enabled video analytics application to help airlines and airports make better and quicker decisions around capacity, sustainability and safety.

Download this ebook for more information on how to build smarter, safer spaces with AI.

Edge Computing for Automakers and Manufacturers

Factories, manufacturers and automakers are generating sensor data that can be used in a cross-referenced fashion to improve services.

Some popular use cases for promoting efficiency and productivity in manufacturing include:

- Predictive maintenance: Detecting anomalies early and predicting when machines will fail to avoid downtime.

- Quality control: Detecting defects in products and alerting staff instantly to reduce waste and improve manufacturing efficiency.

- Worker safety: Using a network of cameras and sensors equipped with AI-enabled video analytics to allow manufacturers to identify workers in unsafe conditions and to quickly intervene to prevent accidents.

Edge Computing for Healthcare

The combination of edge computing and AI is reshaping healthcare. Edge AI provides healthcare workers the tools they need to improve operational efficiency, ensure safety and provide the highest-quality care experience possible.

Two popular examples of AI-powered edge computing within the healthcare sector are:

- Operating rooms: AI models built on streaming images and sensors in medical devices are helping with image acquisition and reconstruction, workflow optimizations for diagnosis and therapy planning, measurements of organs and tumors, surgical therapy guidance, and real-time visualization and monitoring during surgeries.

- Hospitals: Smart hospitals are using technologies such as patient monitoring, patient screening, conversational AI, heart rate estimation, radiology scanners and more. Human pose estimation is a popular vision AI task that estimates key points on a person’s body such as eyes, arms and legs. It can be used to help notify staff when a patient moves or falls out of a hospital bed.

View this video to see how hospitals use edge AI to improve care for patients.

NVIDIA Technology at the Edge

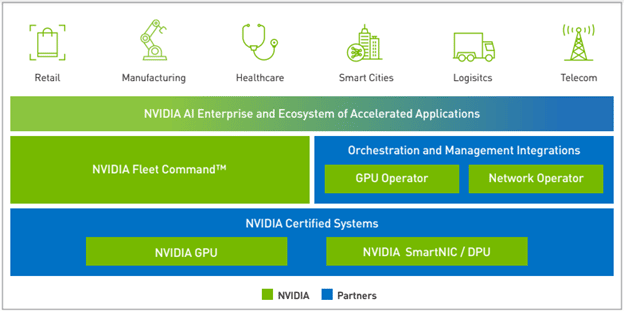

For enterprises to tap into the data generated from the billions of IoT sensors found in retail stores, on city streets and in hospitals, they need edge computing systems that deliver powerful, distributed compute, secure and simple remote management and compatibility with industry-leading technologies.

NVIDIA brings together an ecosystem of data science and AI products to allow enterprises to quickly harness the power of AI at the edge.

Mainstream servers for edge AI: NVIDIA GPUs and BlueField Data Processing Units provide a host of software-defined hardware engines for accelerated networking and security. These hardware engines provide best-in-class performance, with all necessary levels of enterprise data privacy, integrity and reliability built in. NVIDIA-Certified Systems ensure that a server is optimally designed for running modern applications in an enterprise.

Management solutions for edge AI: NVIDIA Fleet Command is a managed platform for container orchestration that streamlines provisioning and deployment of systems and AI applications at the edge. It simplifies the management of distributed computing environments with the scalability and resiliency of the cloud, turning every site into a secure, intelligent location.

For organizations looking to build their own management solution, the NVIDIA GPU Operator will automate the management of all NVIDIA software components needed to provision GPUs. These components include NVIDIA drivers to enable CUDA, a Kubernetes device plugin for GPUs, the NVIDIA container runtime, automatic node labeling and an NVIDIA Data Center GPU Manager-based monitoring agent. NVIDIA also offers a host of other cloud-native technologies to help with edge developments.

Applications for edge AI: To complement these offerings, NVIDIA has also worked with partners to create a whole ecosystem of software development kits, applications and industry frameworks in all areas of accelerated computing. This software is available to be remotely deployed and managed using the NVIDIA NGC software hub. AI and IT teams can get easy access to a wide variety of pretrained AI models and Kubernetes-ready Helm charts to implement into their edge AI systems.

The Future of Edge Computing

According to market research firm IDC’s Edge Computing Spending Guide, worldwide edge spending will grow to $274 billion in 2025, and is expected to continue growing each year with a compounded annual growth rate of 15.6 percent.3

The evolution of AI, IoT and 5G will continue to catalyze the adoption of edge computing. The number of use cases and the types of workloads deployed at the edge will grow. Today, the most prevalent edge use cases revolve around vision AI. However, workload areas such as natural language processing, recommender systems and robotics are rapidly growing opportunities.

The possibilities at the edge are truly limitless.

Learn more about using edge computing and what to consider when deploying AI at the edge.

- Gartner, “Building an Edge Computing Strategy,” G00753920, Sep 2021

- IDC, “IDC FutureScape: Worldwide IT/OT Convergence 2022 Predictions,” Dec 2021

- IDC, “New IDC Spending Guide Forecasts Double-Digit Growth for Investments in Edge Computing,” Doc #prUS48772522, Jan 2022