Artificial intelligence, like any transformative technology, is a work in progress — continually growing in its capabilities and its societal impact. Trustworthy AI initiatives recognize the real-world effects that AI can have on people and society, and aim to channel that power responsibly for positive change.

What Is Trustworthy AI?

Trustworthy AI is an approach to AI development that prioritizes safety and transparency for those who interact with it. Developers of trustworthy AI understand that no model is perfect, and take steps to help customers and the general public understand how the technology was built, its intended use cases and its limitations.

In addition to complying with privacy and consumer protection laws, trustworthy AI models are tested for safety, security and mitigation of unwanted bias. They’re also transparent — providing information such as accuracy benchmarks or a description of the training dataset — to various audiences including regulatory authorities, developers and consumers.

Principles of Trustworthy AI

Trustworthy AI principles are foundational to NVIDIA’s end-to-end AI development. They have a simple goal: to enable trust and transparency in AI and support the work of partners, customers and developers.

Privacy: Complying With Regulations, Safeguarding Data

AI is often described as data hungry. Often, the more data an algorithm is trained on, the more accurate its predictions.

But data has to come from somewhere. To develop trustworthy AI, it’s key to consider not just what data is legally available to use, but what data is socially responsible to use.

Developers of AI models that rely on data such as a person’s image, voice, artistic work or health records should evaluate whether individuals have provided appropriate consent for their personal information to be used in this way.

For institutions like hospitals and banks, building AI models means balancing the responsibility of keeping patient or customer data private while training a robust algorithm. NVIDIA has created technology that enables federated learning, where researchers develop AI models trained on data from multiple institutions without confidential information leaving a company’s private servers.

NVIDIA DGX systems and NVIDIA FLARE software have enabled several federated learning projects in healthcare and financial services, facilitating secure collaboration by multiple data providers on more accurate, generalizable AI models for medical image analysis and fraud detection.

Safety and Security: Avoiding Unintended Harm, Malicious Threats

Once deployed, AI systems have real-world impact, so it’s essential they perform as intended to preserve user safety.

The freedom to use publicly available AI algorithms creates immense possibilities for positive applications, but also means the technology can be used for unintended purposes.

To help mitigate risks, NVIDIA NeMo Guardrails keeps AI language models on track by allowing enterprise developers to set boundaries for their applications. Topical guardrails ensure that chatbots stick to specific subjects. Safety guardrails set limits on the language and data sources the apps use in their responses. Security guardrails seek to prevent malicious use of a large language model that’s connected to third-party applications or application programming interfaces.

NVIDIA Research is working with the DARPA-run SemaFor program to help digital forensics experts identify AI-generated images. Last year, researchers published a novel method for addressing social bias using ChatGPT. They’re also creating methods for avatar fingerprinting — a way to detect if someone is using an AI-animated likeness of another individual without their consent.

To protect data and AI applications from security threats, NVIDIA H100 and H200 Tensor Core GPUs are built with confidential computing, which ensures sensitive data is protected while in use, whether deployed on premises, in the cloud or at the edge. NVIDIA Confidential Computing uses hardware-based security methods to ensure unauthorized entities can’t view or modify data or applications while they’re running — traditionally a time when data is left vulnerable.

Transparency: Making AI Explainable

To create a trustworthy AI model, the algorithm can’t be a black box — its creators, users and stakeholders must be able to understand how the AI works to trust its results.

Transparency in AI is a set of best practices, tools and design principles that helps users and other stakeholders understand how an AI model was trained and how it works. Explainable AI, or XAI, is a subset of transparency covering tools that inform stakeholders how an AI model makes certain predictions and decisions.

Transparency and XAI are crucial to establishing trust in AI systems, but there’s no universal solution to fit every kind of AI model and stakeholder. Finding the right solution involves a systematic approach to identify who the AI affects, analyze the associated risks and implement effective mechanisms to provide information about the AI system.

Retrieval-augmented generation, or RAG, is a technique that advances AI transparency by connecting generative AI services to authoritative external databases, enabling models to cite their sources and provide more accurate answers. NVIDIA is helping developers get started with a RAG workflow that uses the NVIDIA NeMo framework for developing and customizing generative AI models.

NVIDIA is also part of the National Institute of Standards and Technology’s U.S. Artificial Intelligence Safety Institute Consortium, or AISIC, to help create tools and standards for responsible AI development and deployment. As a consortium member, NVIDIA will promote trustworthy AI by leveraging best practices for implementing AI model transparency.

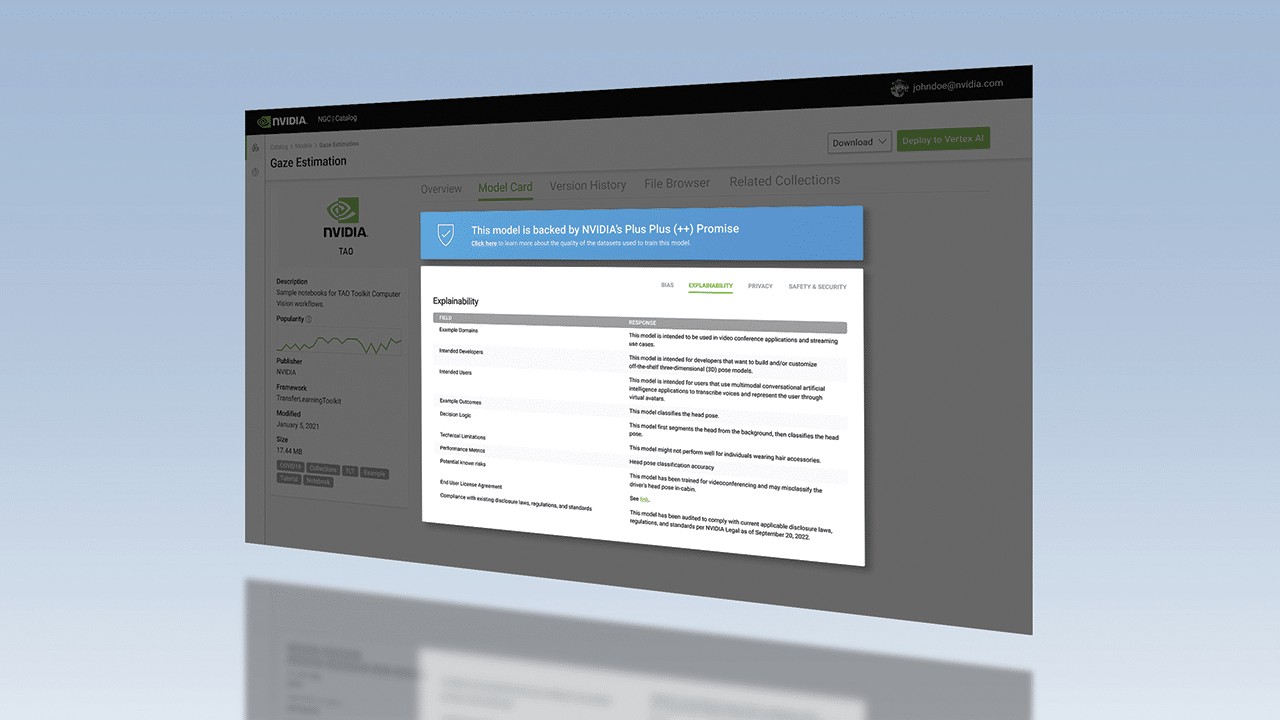

And on NVIDIA’s hub for accelerated software, NGC, model cards offer detailed information about how each AI model works and was built. NVIDIA’s Model Card ++ format describes the datasets, training methods and performance measures used, licensing information, as well as specific ethical considerations.

Nondiscrimination: Minimizing Bias

AI models are trained by humans, often using data that is limited by size, scope and diversity. To ensure that all people and communities have the opportunity to benefit from this technology, it’s important to reduce unwanted bias in AI systems.

Beyond following government guidelines and antidiscrimination laws, trustworthy AI developers mitigate potential unwanted bias by looking for clues and patterns that suggest an algorithm is discriminatory, or involves the inappropriate use of certain characteristics. Racial and gender bias in data are well-known, but other considerations include cultural bias and bias introduced during data labeling. To reduce unwanted bias, developers might incorporate different variables into their models.

Synthetic datasets offer one solution to reduce unwanted bias in training data used to develop AI for autonomous vehicles and robotics. If data used to train self-driving cars underrepresents uncommon scenes such as extreme weather conditions or traffic accidents, synthetic data can help augment the diversity of these datasets to better represent the real world, helping improve AI accuracy.

NVIDIA Omniverse Replicator, a framework built on the NVIDIA Omniverse platform for creating and operating 3D pipelines and virtual worlds, helps developers set up custom pipelines for synthetic data generation. And by integrating the NVIDIA TAO Toolkit for transfer learning with Innotescus, a web platform for curating unbiased datasets for computer vision, developers can better understand dataset patterns and biases to help address statistical imbalances.

Learn more about trustworthy AI on NVIDIA.com and the NVIDIA Blog. For more on tackling unwanted bias in AI, watch this talk from NVIDIA GTC and attend the trustworthy AI track at the upcoming conference, taking place March 18-21 in San Jose, Calif, and online.