Editor’s note: We’ve updated our original post on the differences between GPUs and CPUs, authored by Kevin Krewell, and published in December 2009.

The CPU (central processing unit) has been called the brains of a PC. The GPU its soul. Over the past decade, however, GPUs have broken out of the boxy confines of the PC.

GPUs have ignited a worldwide AI boom. They’ve become a key part of modern supercomputing. They’ve been woven into sprawling new hyperscale data centers. Still prized by gamers, they’ve become accelerators speeding up all sorts of tasks from encryption to networking to AI.

And they continue to drive advances in gaming and pro graphics inside workstations, desktop PCs and a new generation of laptops.

What Is a GPU?

While GPUs (graphics processing unit) are now about a lot more than the PCs in which they first appeared, they remain anchored in a much older idea called parallel computing. And that’s what makes GPUs so powerful.

CPUs, to be sure, remain essential. Fast and versatile, CPUs race through a series of tasks requiring lots of interactivity. Calling up information from a hard drive in response to user’s keystrokes, for example.

By contrast, GPUs break complex problems into thousands or millions of separate tasks and work them out at once.

That makes them ideal for graphics, where textures, lighting and the rendering of shapes have to be done at once to keep images flying across the screen.

CPU vs GPU

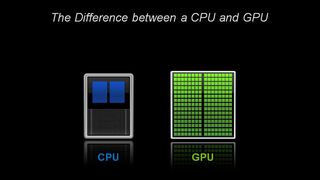

| CPU | GPU |

|---|---|

| Central Processing Unit | Graphics Processing Unit |

| Several cores | Many cores |

| Low latency | High throughput |

| Good for serial processing | Good for parallel processing |

| Can do a handful of operations at once | Can do thousands of operations at once |

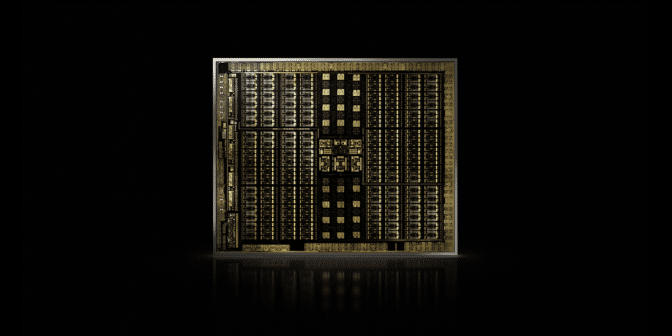

Architecturally, the CPU is composed of just a few cores with lots of cache memory that can handle a few software threads at a time. In contrast, a GPU is composed of hundreds of cores that can handle thousands of threads simultaneously.

GPUs deliver the once-esoteric technology of parallel computing. It’s a technology with an illustrious pedigree that includes names such as supercomputing genius Seymor Cray. But rather than taking the shape of hulking supercomputers, GPUs put this idea to work in the desktops and gaming consoles of more than a billion gamers.

For GPUs, Computer Graphics First of Many Apps

That application — computer graphics — was just the first of several killer apps. And it’s driven the huge R&D engine behind GPUs forward. All this enables GPUs to race ahead of more specialized, fixed-function chips serving niche markets.

Another factor making all that power accessible: CUDA. First released in 2007, the parallel computing platform lets coders take advantage of the computing power of GPUs for general purpose processing by inserting a few simple commands into their code.

That’s let GPUs proliferate in surprising new fields. And with support for a fast-growing number of standards — such as Kubernetes and Dockers — applications can be tested on a low-cost desktop GPU and scaled out to faster, more sophisticated server GPUs as well as every major cloud service provider.

CPUs and the End of Moore’s Law

With Moore’s law winding down, GPUs, invented by NVIDIA in 1999, came just in time.

Moore’s law posits that the number of transistors that can be crammed into an integrated circuit will double about every two years. For decades, that’s driven a rapid increase of computing power. That law, however, has run up against hard physical limits.

GPUs offers a way to continue accelerating applications — such as graphics, supercomputing and AI — by dividing tasks among many processors. Such accelerators are critical to the future of semiconductors, according to John Hennessey and David Patterson, winners of the 2017 A.M. Turing Award and authors of Computer Architecture: A Quantitative Approach the seminal textbook on microprocessors.

GPUs: Key to AI, Computer Vision, Supercomputing and More

Over the past decade that’s proven key to a growing range of applications.

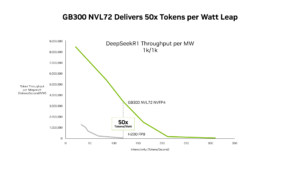

GPUs perform much more work for every unit of energy than CPUs. That makes them key to supercomputers that would otherwise push past the limits of today’s electrical grids.

In AI, GPUs have become key to a technology called “deep learning.” Deep learning pours vast quantities of data through neural networks, training them to perform tasks too complicated for any human coder to describe.

AI and Gaming: GPU-Powered Deep Learning Comes Full Circle

That deep learning capability is accelerated thanks to the inclusion of dedicated Tensor Cores in NVIDIA GPUs. Tensor Cores accelerate large matrix operations, at the heart of AI, and perform mixed-precision matrix multiply-and-accumulate calculations in a single operation. That not only speeds traditional AI tasks of all kinds, it’s now being tapped to accelerate gaming.

In the automotive industry, GPUs offer many benefits. They provide unmatched image recognition capabilities, as you would expect. But they’re also key to creating self-driving vehicles able to learn from — and adapt to — a vast number of different real-world scenarios.

In robotics, GPUs are key to enabling machines to perceive their environment, as you would expect. Their AI capabilities, however, have become key to machines that can learn complex tasks, such as navigating autonomously.

In healthcare and life sciences, GPUs offer many benefits. They’re ideal for imaging tasks, of course. But GPU-based deep learning speeds the analysis of those images. They can crunch medical data and help turn that data, through deep learning, into new capabilities.

In short, GPUs have become essential. They began by accelerating gaming and graphics. Now they’re accelerating more and more areas where computing horsepower will make a difference.

Related Posts

- AI Is Eating Software

- AI Drives the Rise of Accelerated Computing in Data Centers

- What’s the Difference Between Hardware and Software Accelerated Ray Tracing?

- What Is Max-Q?

- What Is a Virtual GPU?

- What’s the Difference Between Ray Tracing and Rasterization?

- What’s the Difference Between Artificial Intelligence, Machine Learning, and Deep Learning?

- What Is NVLink?

- What Is CUDA?