A multi-hospital initiative sparked by the COVID-19 crisis has shown that, by working together, institutions in any industry can develop predictive AI models that set a new standard for both accuracy and generalizability.

Published today in Nature Medicine, a leading peer-reviewed healthcare journal, the collaboration demonstrates how privacy-preserving federated learning techniques can enable the creation of robust AI models that work well across organizations, even in industries constrained by confidential or sparse data.

“Usually in AI development, when you create an algorithm on one hospital’s data, it doesn’t work well at any other hospital,” said Dr. Ittai Dayan, first author on the study, who led AI development at Mass General Brigham and this year founded healthcare startup Rhino Health.

“But by developing our model using federated learning and objective, multimodal data from different continents, we were able to build a generalizable model that can help frontline physicians worldwide,” he said.

Other large-scale federated learning projects are already underway in the healthcare industry, including a five-member study for mammogram assessment and pharmaceutical giant Bayer’s work training an AI model for spleen segmentation.

Beyond healthcare, federated learning can help energy companies analyze seismic and wellbore data, financial firms improve fraud detection models, and autonomous vehicle researchers develop AI that generalizes to different countries’ driving behaviors.

Federated Learning: AI Takes a Village

Companies and research institutions developing AI models are typically limited by the data available to them. This can mean that smaller organizations or niche research areas lack enough data to train an accurate predictive model. Even large datasets can be biased by an organization’s patient or customer demographics, specific data-recording methods or even the brand of scientific equipment used.

To gather enough training data for a robust, generalizable model, most organizations would need to pool data with their peers. But in many cases, data privacy regulations limit the ability to directly share data — like patient medical records or proprietary datasets — on a common supercomputer or cloud server.

That’s where federated learning comes in.

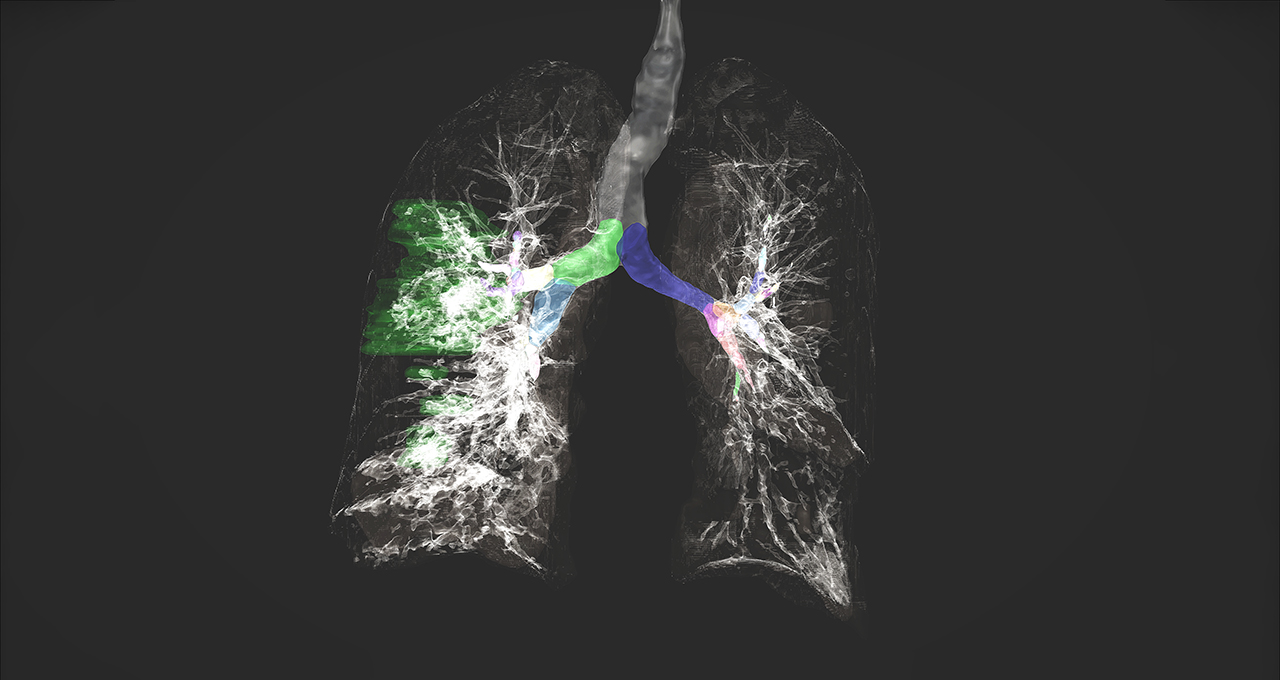

Dubbed EXAM (for EMR CXR AI Model), the new study in Nature Medicine — led by Mass General Brigham and NVIDIA — brought 20 hospitals across five continents together to train a neural network that predicts the level of supplemental oxygen a patient with COVID-19 symptoms may need 24 and 72 hours after arriving to point-of-care settings like the emergency department. It’s among the largest, most diverse clinical federated learning studies to date.

Many Hands Make AI Work

Federated learning enabled the EXAM collaborators to create an AI model that learned from every participating hospital’s chest X-ray images, patient vitals, demographic data and lab values — without ever seeing the private data housed in each location’s private server.

Every hospital trained a copy of the same neural network on local NVIDIA GPUs. During training, each hospital periodically sent only updated model weights to a centralized server, where a global version of the neural network aggregated them to form a new global model.

It’s like sharing the answer key to an exam without revealing any of the study material used to come up with the answers.

“The results of the EXAM initiative show it’s possible to train high performing and generalizable AI models in healthcare without private identifiable data exchanging hands, thus upholding data privacy,” said Dr. Brad Wood, coauthor and director of the NIH Center for Interventional Oncology and Chief of Interventional Radiology at the NIH Clinical Center.

“The findings are impactful well beyond this cross-hospital model for COVID-19 predictions, and showcase federated learning as a promising solution for the field in general,” he continued. “This provides the framework toward more effective and compliant big data sharing, which may be required to realize the potential of AI deep learning in medicine.”

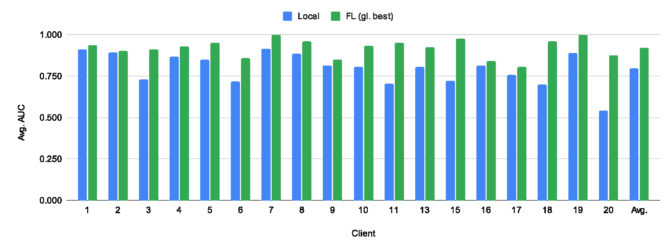

The global EXAM model, shared with all participating sites, resulted in a 16 percent improvement of the AI model’s average performance. Researchers saw an average increase of 38 percent in generalizability when compared to models trained at any single site.

The performance boost was especially dramatic for hospitals with smaller datasets, visible in the chart above.

“Federated learning allows researchers all over the world to collaborate on a common objective: to develop a model that learns from and generalizes to everyone’s data,” said Sira Sriswasdi, co-director of the Center for AI in Medicine at Chulalongkorn University and King Chulalongkorn Memorial Hospital in Thailand, one of the 20 hospitals that collaborated on EXAM. “With NVIDIA GPUs and the NVIDIA Clara software, participating in the study was an easy process that yielded impactful results.”

Hospitals, Startups Pursue Further EXAMination

Bringing together collaborators across North and South America, Europe and Asia, the original EXAM study took just two weeks of training to achieve high-quality prediction of patient oxygen needs, an insight that can help physicians determine the level of care a patient requires.

Since then, its collaborators validated that the AI model may generalize and perform well in settings independent from sites that helped build and train the model. Three additional hospitals in Massachusetts — Cooley Dickinson Hospital, Martha’s Vineyard Hospital and Nantucket Cottage Hospital — tested EXAM and discovered that the neural network performed well on their independent unseen data, too.

Cooley Dickinson Hospital found that the model predicted ventilator need within 24 hours of a patient’s arrival in the emergency room with a sensitivity of 95 percent and a specificity of over 88 percent. Similar results were found in the U.K., at Addenbrookes Hospital in Cambridge.

Mass General Brigham plans to deploy EXAM in the near future, said Dr. Quanzheng Li, scientific director of the MGH & BWH Center for Clinical Data Science, who developed the original model. Along with Lahey Hospital & Medical Center and the U.K.’s NIHR Cambridge Biomedical Research Center, the hospital network is also working with NVIDIA Inception startup Rhino Health to run prospective studies using EXAM.

The original EXAM model was trained retrospectively using records of past COVID-19 patients, so researchers already had the ground-truth data on how much oxygen a patient ended up needing. This prospective research instead applies the AI model to data from new patients coming into the hospital, a further step toward deployment in a real-world setting.

“Federated learning has transformative power to bring AI innovation to the clinical workflow,” said Fiona Gilbert, chair of radiology at the University of Cambridge School of Medicine. “Our continued work with EXAM aims to make these kinds of global collaborations repeatable and more efficient, so that we can meet clinicians’ needs to tackle complex health challenges and future epidemics.”

The EXAM model is publicly available for research use through the NVIDIA NGC software hub. Businesses and research institutions getting started with federated learning can use the NVIDIA AI Enterprise software suite of AI tools and frameworks, optimized to run on NVIDIA-Certified Systems.

Learn more about the science behind federated learning in this paper, and read our whitepaper for an introduction to federated learning using the NVIDIA Clara AI platform.

Subscribe to NVIDIA healthcare news and follow NVIDIA Healthcare on Twitter.