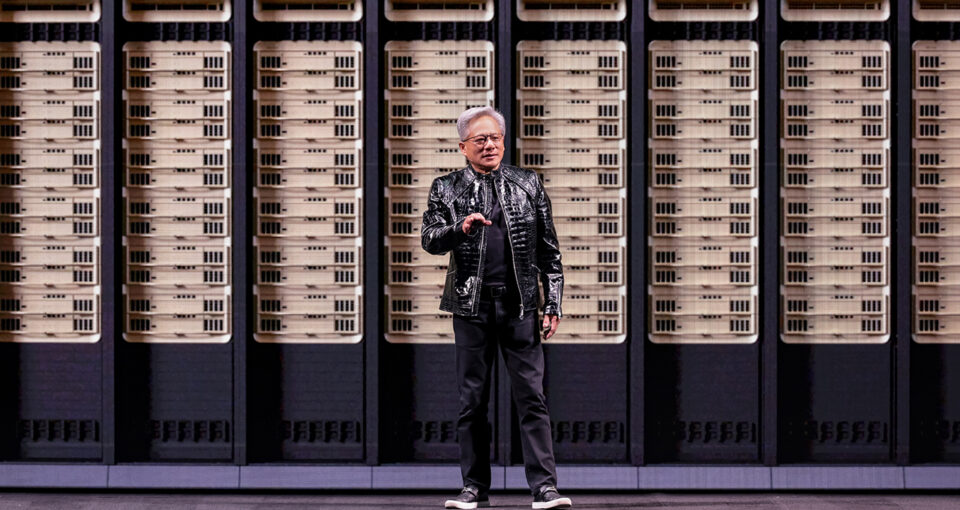

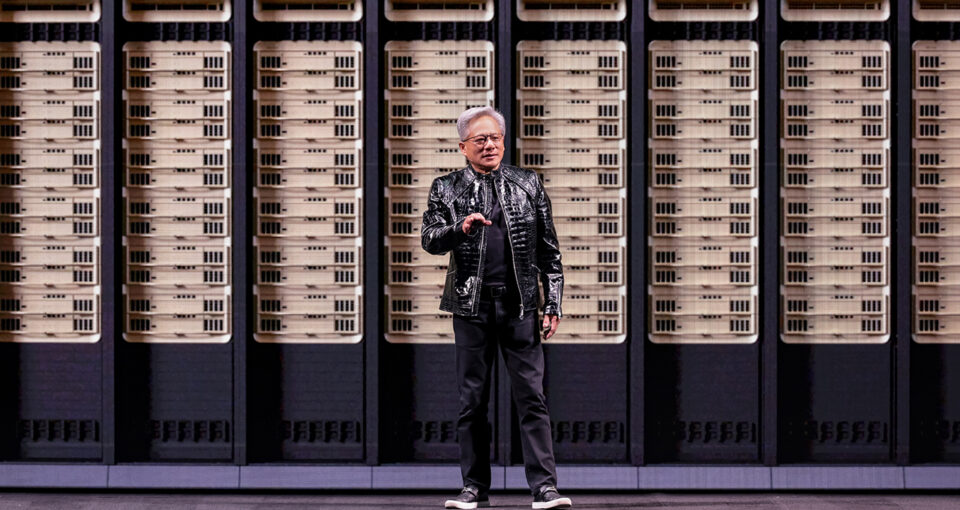

NVIDIA founder and CEO Jensen Huang took the stage at the Fontainebleau Las Vegas to open CES 2026,… Read Article

NVIDIA founder and CEO Jensen Huang took the stage at the Fontainebleau Las Vegas to open CES 2026,… Read Article

The Hao AI Lab research team at the University of California San Diego — at the forefront of pioneering AI model innovation — recently received an NVIDIA DGX B200 system… Read Article

Five finalists for the esteemed high-performance computing award have achieved breakthroughs in climate modeling, fluid simulation and more with the Alps, JUPITER and Perlmutter supercomputers — with two winners taking… Read Article

Where CPUs once ruled, power efficiency — and then AI — flipped the balance. Extreme co-design across GPUs, networking and software now drives the frontier of science…. Read Article

At SC25, NVIDIA unveiled advances across NVIDIA BlueField DPUs, next-generation networking, quantum computing, national research, AI physics and more — as accelerated systems drive the next chapter in AI supercomputing…. Read Article

Across quantum physics, digital biology and climate research, the world’s researchers are harnessing a universal scientific instrument to chart new frontiers of discovery: accelerated computing. At this week’s SC25 conference… Read Article

To power future technologies including liquid-cooled data centers, high-resolution digital displays and long-lasting batteries, scientists are searching for novel chemicals and materials optimized for factors like energy use, durability and… Read Article

NVIDIA Apollo — a family of open models for accelerating industrial and computational engineering — was introduced today at the SC25 conference in St. Louis. Accelerated by NVIDIA AI infrastructure,… Read Article

The race to bottle a star now runs on AI. NVIDIA, General Atomics and a team of international partners have built a high-fidelity, AI-enabled digital twin for a fusion reactor… Read Article