Hybrid cloud has become the dominant architecture for enterprises seeking to extend their compute capabilities by using public clouds while maintaining on-premises clusters that are fully interoperable with their cloud service providers.

Today, we’re reporting advancements in offloading data communication tasks from the CPU to generate a huge boost in performance for VMware vSphere-enabled data centers.

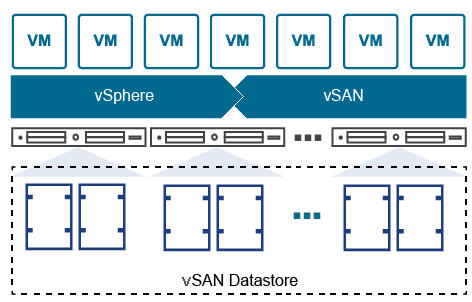

To meet demands, provide services and allocate resources efficiently, enterprise IT teams have deployed hyperconverged architectures that use the same servers for compute and storage. These architectures include three core technologies: software-defined-compute (or server virtualization), software-defined-networking and software-defined storage (SDS). Taken together, these enable a software-defined data center. Also widely adopted: high-performance Ethernet for server-to-server and server-to-storage communication.

SDS aggregates local, direct-attached storage devices to create and share a single storage pool across all hosts in the hyperconverged cluster, utilizing faster flash storage for cache and inexpensive HDD to maximize capacity.

vSAN is VMware’s enterprise storage solution for SDS that supports hyperconverged infrastructure systems and is fully integrated with VMware vSphere as a distributed layer of software within the ESXi hypervisor.

vSAN eliminates the need for external shared storage and simplifies storage configuration through storage policy-based management. Deploying virtual machine storage policies, users can define storage requirements and capabilities.

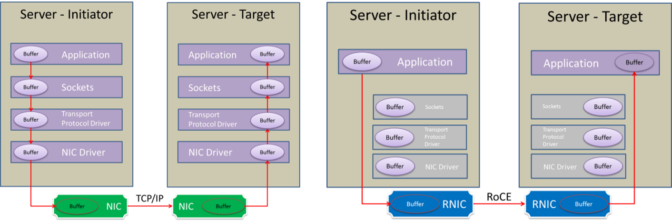

RDMA (remote direct memory access) is an innovative technology that boosts data communication performance and efficiency. RDMA makes data transfers more efficient and enables fast data movement between servers and storage without using the OS or burdening the server’s CPU. Throughput is increased, latency reduced and the CPU is freed to run applications.

RDMA technology is already widely used for efficient data transfer in render farms and large cloud deployments, such as Microsoft Azure, HPC (including machine/deep learning), NVMe-oF and iSER-based storage, NFSoRDMA, mission-critical SQL databases such as Oracle’s RAC (Exadata), IBM DB2 pureScale, Microsoft SQL solutions and Teradata.

The chart above illustrates the efficient application-to-application communication that has led IT managers to deploy RoCE (RDMA over Converged Ethernet). RoCE uses advanced Ethernet adapters to deliver efficient RDMA over Ethernet. Since 2015, it has enabled widespread deployments in mainstream data center applications.

For the last several years, VMware has been adding RDMA support to ESXi, including PVRDMA (paravirtual RDMA) to accelerate data transfers between virtual servers and iSER and NVMe-oF for remote storage acceleration. VMware’s vSANoRDMA is now fully qualified and available as of the ESXi 7.0 U2 release, making it ready for deployments.

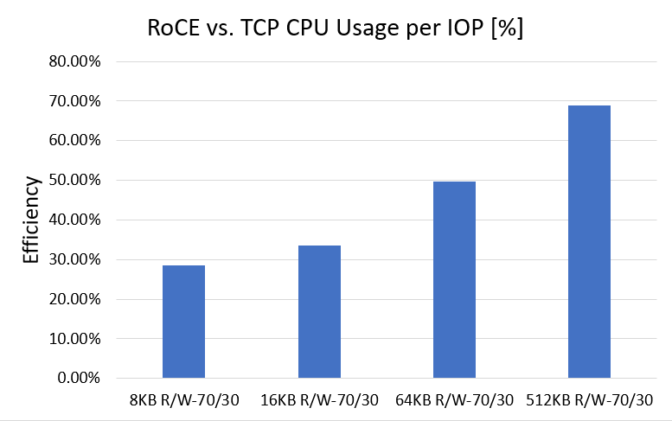

The test ran over an eight-node cluster running vSAN in a RAID1 configuration, with a total raw NVMe storage capacity of 76.8TB, NVIDIA ConnectX-5 100GbE SmartNIC and NVIDIA Spectrum SN2700 100GbE switch. Preliminary benchmark results show that vSAN over RDMA delivers up to 70 percent better CPU efficiency(1) than vSAN over TCP/IP.

Running vSAN over RoCE, which offloads the CPU from performing the data communication tasks, generates a significant performance boost that is critical in the new era of accelerated computing associated with a massive amount of data transfers. As a result, I expect that vSAN over RoCE will eventually replace vSAN over TCP and become the leading transport technology in vSphere-enabled data centers.

To learn more, check out these NVIDIA RDMA RoCE resources:

- How RDMA Became the Fuel for Fast Networks

- NVIDIA Mellanox Ethernet Connectivity Solutions

- Introducing VMware vSAN 7.0 U2

(1) Running the FIO benchmark, the efficiency percentage boost has been calculated by dividing the difference between the CPU usage per IOP when running over TCP/IP vs. RoCE and the CPU usage per IOP when running over RoCE. Note that while CPU efficiency improved by up to 70 percent in these tests, that does not mean that IOPS or throughput will increase by a similar percentage.