Software ate the world, now new silicon is taking a seat at the table.

Ten years ago venture capitalist Marc Andreessen proclaimed that “software is eating the world.”

His once-radical concept — now a truism — is that innovation and corporate value creation lie in software. That led some to believe that hardware matters less.

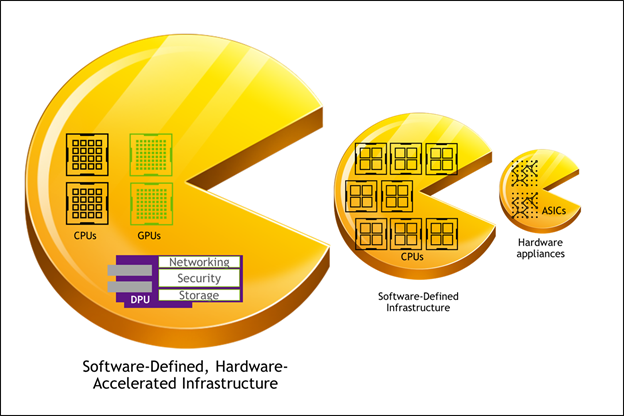

Just the opposite. It’s now clear that the software-defined revolution relies on new chip architectures — GPUs and DPUs — to continue.

One GPU can already replace a dozen or even 100 CPUs for AI and machine learning.

And one DPU can do the work of 20, 50 or even 120 CPU cores in a single chip. Future DPUs will offload the work of up to 300 CPU cores.

Hardware matters more than ever.

Hardware Matters Because AI Matters

To be sure, software remains important.

Everyone — whether in stores, factories, offices or schools — relies on software. We even use software to socialize and date.

But the next decade will be a story of every business becoming not just a software company, but an AI company.

Businesses that don’t embrace AI — the automation of automation — will fall behind.

This rush to AI has big implications.

Commodity CPUs were enough for the software transformation of the previous decade.

The GPU and DPU will be the engines that drive the AI transformation of the next.

Innovators Will Dual-Wield GPUs and DPUs

That’s because today’s workloads are very different.

- Data is growing exponentially.

- Networking speeds in the data center have accelerated 20x — to 200 Gigabits per second, up from 10 Gbps.

- AI has become widespread in enterprises and the cloud.

- Applications run on many different servers at once and are always communicating with each other.

- Moore’s law has ended — CPU performance now increases only linearly and can’t keep up with the growth in everything else.

With all these trends accelerating, the standard CPU is already overwhelmed. AI training workloads and machine learning models can consume CPUs on servers for hours or days.

It’s now common for a third of each server’s CPU cores to run infrastructure tasks such as networking, storage management and security.

Specialized silicon is the solution.

CPUs can then do what they do best: general, single-threaded applications.

| Workload | Characteristics | Acceleration Needed | Best Processor |

|---|---|---|---|

| General applications | Single threaded | None | CPU |

| AI, ML, HPC | Massively parallel | Integer or FP math | GPU |

| Graphics & video | Parallel, codecs | Matrix math, transforms, ray tracing, probability | GPU with ray tracing |

| Networking | Multithreaded, pipelined | Packet processing, routing, encapsulation | DPU |

| Security | Single- or multithreaded | Encryption, inspection, domain isolation | DPU |

Hardware Innovation Fuels Software-Defined Innovation

The software-defined revolution has brought the hardware-software balance full circle. Hardware design is important again.

But it’s no longer in the design of custom ASICs for each appliance or general-purpose CPUs that matters most. Instead, it’s continuous innovation in specialized processors such as GPUs and DPUs.

The rapid pace of innovation in these processors poses challenges for the chip world. Taking advantage of ever-smaller semiconductor process nodes demands more complicated design efforts.

Every time the process shrinks, new design rules come into play. New, more efficient chips need larger design and verification teams.

The result is that chip companies must invest huge sums. Not only in the logical design of their chips. But also in the physical aspects. They need to follow the rules and limits of physics down to the quantum realm.

Over time, only larger chip companies can design at scale. That means creating multiple new chips at each new process node. Other companies that make their own chips can’t afford to design on the newest nodes. They’ll have to settle for older, larger, less efficient processes.

We saw this in CPUs, where only three companies have been able to sustain innovation. They’ve done that by amortizing their CPU design efforts over large numbers of chips and customers.

The same seems likely for GPU and DPUs. Big silicon players will support sustained innovation in software.

Innovators Can Eat Hearty

Armed with innovative GPUs and DPUs, data centers will deploy processors optimized for different tasks. As a result, software, driven by AI, will continue eating the world.

The modern datacenter is software-defined and hardware-accelerated. They go hand in hand.