Researchers are finding the jet fuel for the million-x advances their work now demands.

They face rising mountains of data with soaring computational requirements they can’t surmount relying solely on Moore’s law, the sputtering combustion engine of yesterday’s systems.

So, they’re strapping together a trio of thrusters for the exponential acceleration they need.

Speeding Up and Scaling Out

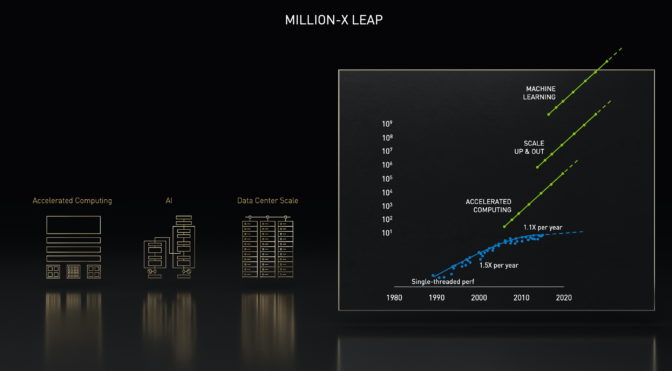

Accelerated computing is one of tech’s three modern motors. Over the past decade, it’s provided 1,000x performance increases, thanks to advances in five generations of GPUs and the full stack of software we’ve built on top of them.

Scaling is a second engine, racking up advances of nearly 100,000x. That’s because the data center is the new unit of computing.

For example, in 2015 it took a single Kepler GPU nearly a month to train ResNet-50, a popular computer vision model. Today, we train that same model in less than half a minute on Selene, the world’s most powerful industrial supercomputer, which packs thousands of NVIDIA Ampere architecture GPUs.

We developed many key technologies to enable this scaling, like our Megatron software, Magnum IO for multi-GPU and multinode processing, and SHARP for in-network computing.

The Dawn of Deep Learning

The third and most transformational force of our time is AI computing.

Last year, deep learning powered a simulation of 305 million atoms over 1-millisecond timescale, showing the inner workings of the SARS-CoV-2 virus. That work marked an over 10-millionfold increase from a then state-of-the-art simulation of 1 million atoms for 20 nanoseconds 15 years earlier.

That’s why the combination of AI and high performance computing is sweeping through the scientific community. Researchers posted nearly 5,000 papers on work in AI+HPC on arXiv last year, up from less than 100 five years ago.

One of the most recent papers was from NVIDIA researchers. It showed a way to combine neural networks with classical physics equations to provide 1,000x speedups in traditional simulations.

Accelerating Drug Discovery

Today, the combination of accelerated computing, massive scaling and AI is advancing science and industrial computing.

No effort could be more vital than speeding drug discovery to treat diseases. It’s challenging work that requires decoding protein structures in 3D to see how they work, then identifying the chemical compounds that stop them from infecting healthy cells.

Traditional methods using X-rays and electron microscopes have decoded only 17 percent of the roughly 25,000 human proteins. DeepMind used an ensemble of AI models in its AlphaFold system last year to make a big leap, predicting the 3D structure of more than 20,000 human proteins.

Similarly, researchers at NVIDIA, Caltech and startup Entos blended machine learning and physics to create OrbNet, speeding up molecular simulations by many orders of magnitude. Leveraging that effort, Entos can accelerate its simulations of chemical reactions between protein and drug candidates 1,000x, finishing in three hours work that would have taken more than three months.

Understanding a Changing Climate

It’s a similar situation in other fields. Scientists hope to run global climate simulations soon with kilometer-scale resolution to help us adapt to changing weather patterns and better prepare for disasters.

But to track clouds and storm patterns accurately, they need to work at the resolution of one meter. That requires a whopping 100 billion times more computing power.

At the pace of Moore’s law, we wouldn’t get there until 2060. So, scientists seeking millionfold leaps are building digital twins of our planet with accelerated computing and AI at scale.

To speed that work, we announced plans to build Earth-2, the world’s most powerful AI supercomputer dedicated to predicting, mitigating and adapting to climate change. It will create a digital twin of Earth in Omniverse.

Industries Spawn Digital Twins

Researchers are already using these techniques to build digital twins of factories and cities.

For example, Siemens Energy used the NVIDIA Modulus AI framework running on dozens of GPUs in the cloud to simulate an entire power plant. It can predict mechanical failures from the corrosive effects of steam, reducing downtime, saving money and keeping the lights on.

It’s a kind of simulation technology that promises more efficient farms, hospitals and transformations in every industry. And that’s why we developed Modulus — it eases the job of creating AI-powered, physically accurate simulations.

It’s one more tool fueled by today’s new engines of computing to enable the next millionfold leap.

Accelerated computing with AI at data center scale will deliver millionfold increases in performance to solve problems such as understanding climate change, discovering drugs, fueling industrial transformations and much more.

To get the big picture, watch NVIDIA CEO Jensen Huang’s GTC keynote address below.