Helping to accelerate work on some of the most challenging problems of our time, NVIDIA announced an AI framework that provides engineers, scientists and researchers a customizable, easy-to-adopt, physics-based toolkit to build neural network models of digital twins.

NVIDIA PhysicsNeMo, a framework for developing physics-ML models, is designed to turbocharge a wide range of fields where AI expertise is scarce but the need for AI and physics-driven digital twin capabilities is growing fast — such as in protein engineering and climate science.

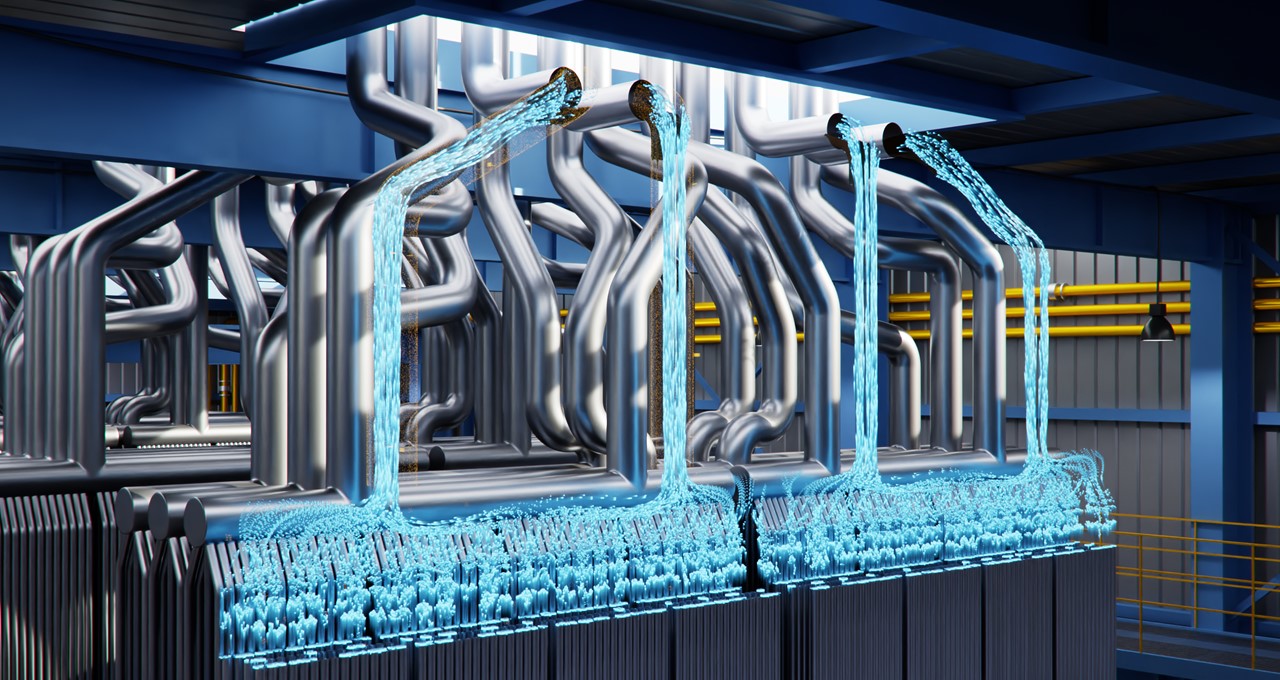

Digital twins have emerged as powerful tools for tackling problems ranging from the molecular level like drug discovery up to global challenges like climate change.

NVIDIA PhysicsNeMo gives scientists a framework to build highly accurate digital reproductions of complex and dynamic systems that will enable the next generation of breakthroughs across a vast range of industries.

Physics-Based Neural Networks

PhysicsNeMo trains neural networks to use the fundamental laws of physics to model the behavior of complex systems in a wide range of fields. The surrogate model can then be used in various applications of digital twins from industrial use cases to climate science.

As in most AI-based approaches, PhysicsNeMo includes a data preparation module that helps manage observed or simulated data. It also accounts for the geometry of the systems it models and the explicit parameters of the space represented by the input geometry.

The key workflow and elements of NVIDIA PhysicsNeMo include:

- Sampling planner, which enables the user to select an approach, such as quasi-random sampling or importance sampling, to improve the trained model’s convergence and accuracy.

- Python-based APIs to take symbolic governing partial differential equations and build physics-based neural networks.

- Curated layers and network architectures that are proven effective for physics-based problems.

- Physics-ML engine, which takes these inputs to train the model using PyTorch and TensorFlow, cuDNN for GPU acceleration and NVIDIA Magnum IO for multi-GPU and multinode scaling.

Fast Turnaround Time

The GPU-accelerated toolkit offers rapid turnaround complementing traditional analysis, enabling faster insights. PhysicsNeMo allows users to explore different configurations and scenarios of a system by assessing the impact of changing its parameters.

PhysicsNeMo high-performance TensorFlow-based implementation optimizes performance by taking advantage of XLA, a domain-specific compiler for linear algebra that accelerates TensorFlow models. It uses the Horovod distributed deep learning training framework for multi-GPU scaling.

Once a model is trained, PhysicsNeMo can do the inference in near real time or interactively. By contrast, traditional analysis must be evaluated one run at a time, and each is computationally expensive.

Easy to Adopt

PhysicsNeMo is customizable and easy to adopt. It offers APIs for implementing new physics and geometry. It’s designed so those just starting with AI-driven digital-twin applications can put it to work fast.

The framework includes step-by-step tutorials for getting started with computational fluid dynamics, heat transfer and more. It also offers a growing list of implementations for application domains such as modeling turbulence, transient wave equations, Navier-Stokes equations, Maxwell’s equation for electromagnetics, inverse problems and other multiphysics problems.

NVIDIA PhysicsNeMo is available now as a free download through the NVIDIA Developer Zone.