Self-driving cars see the world using sensors. But how do they make sense of all that data?

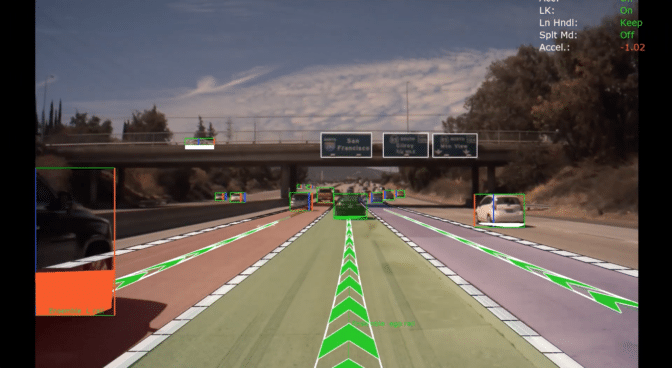

The key is perception, the industry’s term for the ability, while driving, to process and identify road data — from street signs to pedestrians to surrounding traffic. With the power of AI, driverless vehicles can recognize and react to their environment in real time, allowing them to safely navigate.

They accomplish this using an array of algorithms known as deep neural networks, or DNNs.

Rather than requiring a manually written set of rules for the car to follow, such as “stop if you see red,” DNNs enable vehicles to learn how to navigate the world on their own using sensor data.

These mathematical models are inspired by the human brain — they learn by experience. If a DNN is shown multiple images of stop signs in varying conditions, it can learn to identify stop signs on its own.

Two Keys to Self-Driving Car Safety: Diversity and Redundancy

But just one algorithm can’t do the job on its own. An entire set of DNNs, each dedicated to a specific task, is necessary for safe autonomous driving.

These networks are diverse, covering everything from reading signs to identifying intersections to detecting driving paths. They’re also redundant, with overlapping capabilities to minimize the chances of a failure.

There’s no set number of DNNs required for autonomous driving. And new capabilities arise frequently, so the list is constantly growing and changing.

To actually drive the car, the signals generated by the individual DNNs must be processed in real time. This requires a centralized, high-performance compute platform, such as NVIDIA DRIVE AGX.

Below are some of the core DNNs that NVIDIA uses for autonomous vehicle perception.

Pathfinders

DNNs that help the car determine where it can drive and safely plan the path ahead:

- OpenRoadNet identifies all of the drivable space around the vehicle, regardless of whether it’s in the car’s lane or in neighboring lanes.

- PathNet highlights the driveable path ahead of the vehicle, even if there are no lane markers.

- LaneNet detects lane lines and other markers that define the car’s path.

- MapNet also identifies lanes as well as landmarks that can be used to create and update high-definition maps.

Object Detection and Classification

DNNs that detect potential obstacles, as well as traffic lights and signs:

- DriveNet perceives other cars on the road, pedestrians, traffic lights and signs, but doesn’t read the color of the light or type of sign.

- LightNet classifies the state of a traffic light — red, yellow or green.

- SignNet discerns the type of sign — stop, yield, one way, etc.

- WaitNet detects conditions where the vehicle must stop and wait, such as intersections.

The List Goes On

DNNs that can detect the status of the parts of the vehicle and cockpit, as well as facilitate maneuvers like parking:

- ClearSightNet monitors how well the vehicle’s cameras can see, detecting conditions that limit sight such as rain, fog and direct sunlight.

- ParkNet identifies spots available for parking.

These networks are just a sample of the DNNs that make up the redundant and diverse DRIVE Software perception layer.

To learn more about how NVIDIA approaches autonomous driving software, check out the new DRIVE Labs video series.