Outlining a sweeping vision for the “age of AI,” NVIDIA CEO Jensen Huang Monday kicked off this week’s GPU Technology Conference.

Huang made major announcements in data centers, edge AI, collaboration tools and healthcare in a talk simultaneously released in nine episodes, each under 10 minutes.

“AI requires a whole reinvention of computing – full-stack rethinking – from chips, to systems, algorithms, tools, the ecosystem,” Huang said, standing in front of the stove of his Silicon Valley home.

Behind a series of announcements touching on everything from healthcare to robotics to videoconferencing, Huang’s underlying story was simple: AI is changing everything, which has put NVIDIA at the intersection of changes that touch every facet of modern life.

More and more of those changes can be seen, first, in Huang’s kitchen, with its playful bouquet of colorful spatulas, that has served as the increasingly familiar backdrop for announcements throughout the COVID-19 pandemic.

“NVIDIA is a full stack computing company — we love working on extremely hard computing problems that have great impact on the world — this is right in our wheelhouse,” Huang said. “We are all-in, to advance and democratize this new form of computing – for the age of AI.”

This week’s GTC is one of the biggest yet. It features more than 1,000 sessions — 400 more than the last GTC — in 40 topic areas. And it’s the first to run across the world’s time zones, with sessions in English, Chinese, Korean, Japanese, and Hebrew.

Accelerated Data Center

Modern data centers, Huang explained, are software-defined, making them more flexible and adaptable.

That creates an enormous load. Running a data center’s infrastructure can consume 20-30 percent of its CPU cores. And as “east-west traffic, or traffic within a data center, and microservices increase, this load will increase dramatically.

“A new kind of processor is needed,” Huang explained: “We call it the data processing unit.”

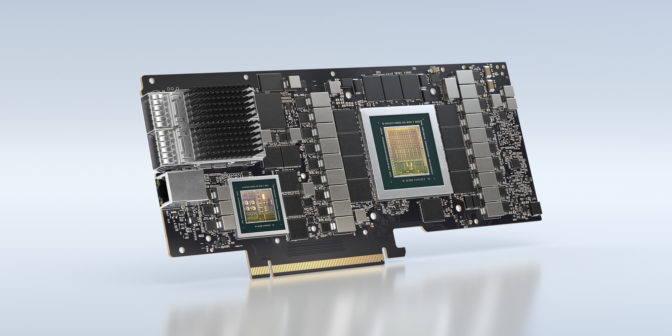

The DPU consists of accelerators for networking, storage, security and programmable Arm CPUs to offload the hypervisor, Huang said.

The new NVIDIA BlueField 2 DPU is a programmable processor with powerful Arm cores and acceleration engines for at-line-speed processing for networking, storage and security. It’s the latest fruit of NVIDIA’s acquisition of high-speed interconnect provider Mellanox Technologies, which closed in April.

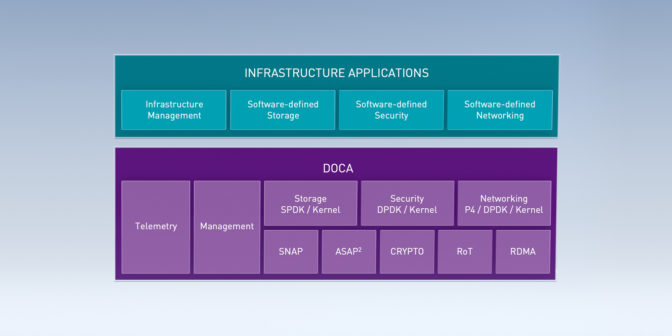

Data Center — DOCA — A Programmable Data Center Infrastructure Processor

NVIDIA also announced DOCA, its programmable data-center-infrastructure-on-a-chip architecture.

“DOCA SDKs let developers write infrastructure apps for software-defined networking, software-defined storage, cybersecurity, telemetry and in-network computing applications yet to be invented,” Huang said.

Huang also touched on a partnership with VMware, announced last week, to port VMware onto BlueField. VMware “runs the world’s enterprises — they are the OS platform in 70 percent of the world’s companies,” Huang explained.

Data Center — DPU Roadmap in ‘Full Throttle’

Further out, Huang said NVIDIA’s DPU roadmap shows advancements coming fast.

BlueField-2 is sampling now, BlueField-3 is finishing and BlueField-4 is in high gear, Huang reported.

“We are going to bring a ton of technology to networking,” Huang said. “In just a couple of years, we’ll span nearly 1,000 times in compute throughput” on the DPU.

BlueField-4, arriving in 2023, will add support for the CUDA parallel programming platform and NVIDIA AI — “turbocharging the in-network computing vision.”

You can get those capabilities now, Huang announced, with the new BlueField-2X. It adds an NVIDIA Ampere architecture GPU to BlueField-2 for in-networking computing with CUDA and NVIDIA AI.

“Bluefield-2X is like having a Bluefield-4, today,” Huang said.

Data Center — GPU Inference Momentum

Consumer internet companies are also turning to NVIDIA technology to deliver AI services.

Inference — which puts fully-trained AI models to work — is key to a new generation of AI-powered consumer services.

In aggregate, NVIDIA GPU inference compute in the cloud already exceeds all cloud CPUs, Huang said.

Huang announced that Microsoft is adopting NVIDIA AI on Azure to power smart experiences on Microsoft Office, including smart grammar correction and text prediction.

Microsoft Office joins Square, Twitter, eBay, GE Healthcare and Zoox, among other companies, in a broad array of industries using NVIDIA GPUs for inference.

Data Center — Cloudera and VMware

The ability to put vast quantities of data to work, fast, is key to modern AI and data science.

NVIDIA RAPIDS is the fastest extract, transform, load, or ETL, engine on the planet, and supports multi-GPU and multi-node.

NVIDIA modeled its API after hugely popular data science frameworks — Pandas, XGBoost and ScikitLearn — so RAPIDS is easy to pick up.

On the industry-standard data processing benchmark, running the 30 complex database queries on a 10TB dataset, a 16-node NVIDIA DGX cluster ran 20x faster than the fastest CPU server.

Yet it’s one-seventh the cost and uses one-third the power.

Huang announced that Cloudera, a hybrid-cloud data platform that lets you manage, secure, analyze and learn predictive models from data, will accelerate the Cloudera Data Platform with NVIDIA RAPIDS, NVIDIA AI and NVIDIA-accelerated Spark.

NVIDIA and VMware also announced a second partnership, Huang said.

The companies will create a data center platform that supports GPU acceleration for all three major computing domains today: virtualized, distributed scale-out and composable microservices.

“Enterprises running VMware will be able to enjoy NVIDIA GPU and AI computing in any computing mode,” Huang said. “

(Cutting) Edge AI

Someday, Huang said, trillions of AI devices and machines will populate the Earth – in homes, office buildings, warehouses, stores, farms, factories, hospitals, airports.

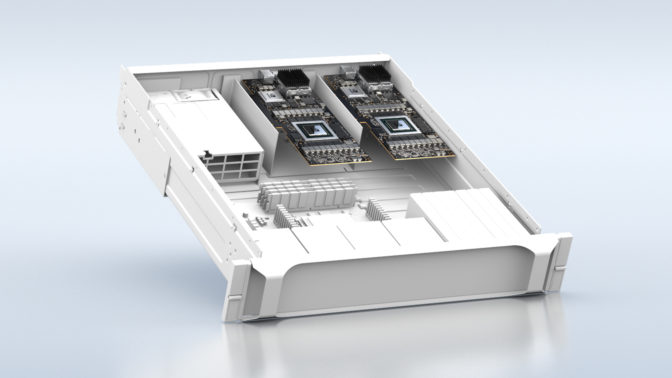

The NVIDIA EGX AI platform makes it easy for the world’s enterprises to stand up a state-of-the-art edge-AI server quickly, Huang said. It can control factories of robots, perform automatic checkout at retail or help nurses monitor patients, Huang explained.

Huang announced the EGX platform is expanding to combine the NVIDIA Ampere architecture GPU and BlueField-2 DPU on a single PCIe card. The updates give enterprises a common platform to build secure, accelerated data centers.

Huang also announced an early access program for a new service called NVIDIA Fleet Command. This new application makes it easy to deploy and manage updates across IoT devices, combining the security and real-time processing capabilities of edge computing with the remote management and ease of software-as-a-service.

Among the first companies provided early access to Fleet Command is KION Group, a leader in global supply chain solutions, which is using the NVIDIA EGX AI platform to develop AI applications for its intelligent warehouse systems.

Additionally, Northwestern Memorial Hospital, the No. 1 hospital in Illinois and one of the top 10 in the nation, is working with Whiteboard Coordinator to use Fleet Command for its IoT sensor platform.

“This is the iPhone moment for the world’s industries — NVIDIA EGX will make it easy to create, deploy and operate industrial AI services,” Huang said.

Edge AI — Democratizing Robotics

Soon, Huang added, everything that moves will be autonomous. AI software is the big breakthrough that will make robots smarter and more adaptable. But it’s the NVIDIA Jetson AI computer that will democratize robotics.

Jetson is an Arm-based SoC designed from the ground up for robotics. That’s thanks to the sensor processors, the CUDA GPU and Tensor Cores, and, most importantly, the richness of AI software that runs on it, Huang explained.

The latest addition to the Jetson family, the Jetson Nano 2GB, will be $59, Huang announced. That’s roughly half the cost of the $99 Jetson Nano Developer Kit announced last year.

“NVIDIA Jetson is mighty, yet tiny, energy-efficient and affordable,” Huang said.

Collaboration Tools

The shared, online world of the “metaverse” imagined in Neal Stephensen’s 1992 cyberpunk classic, “Snow Crash,” is already becoming real, in shared virtual worlds like Minecraft and Fortnite, Huang said.

First introduced in March 2019, NVIDIA Omniverse — a platform for simultaneous, real-time simulation and collaboration across a broad array of existing industry tools — is now in open beta.

“Omniverse allows designers, artists, creators and even AIs using different tools, in different worlds, to connect in a common world—to collaborate, to create a world together,” Huang said.

Another tool NVIDIA pioneered, NVIDIA Jarvis conversational AI (since renamed NVIDIA Riva), is also now in open beta, Huang announced. Using the new SpeedSquad benchmark, Huang showed it’s twice as responsive and more natural sounding when running on NVIDIA GPUs.

It also runs for a third of the cost, Huang said.

“What did I tell you?” Huang said, referring to a catch phrase he’s used in keynotes over the years. “The more you buy, the more you save.”

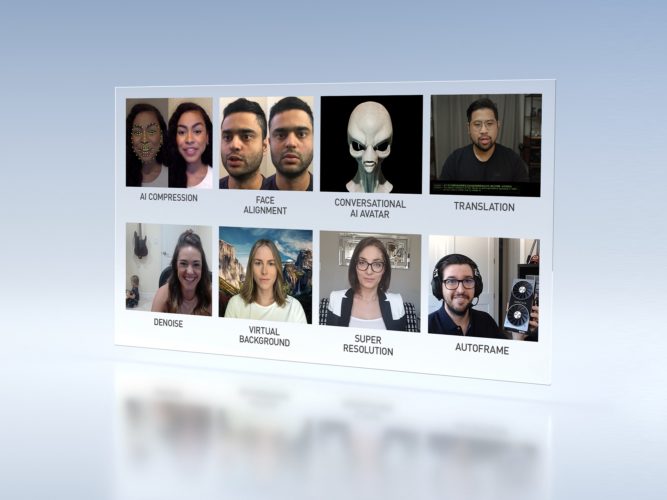

Collaboration Tools — Introducing NVIDIA Maxine

Video calls have moved from a curiosity to a necessity.

For work, social, school, virtual events, doctor visits — video conferencing is now the most critical application for many people. More than 30 million web meetings take place every day.

To improve this experience, Huang announced NVIDIA Maxine, a cloud-native streaming video AI platform for applications like video calls.

Using AI, Maxine can reduce the bandwidth consumed by video calls by a factor of 10. “AI can do magic for video calls,” Huang said.

“With Jarvis and Maxine, we have the opportunity to revolutionize video conferencing of today and invent the virtual presence of tomorrow,” Huang said.

Healthcare

When it comes to drug discovery amidst the global COVID-19 pandemic, lives are on the line.

Yet for years the costs of new drug discovery for the $1.5 trillion pharmaceutical industry have risen. New drugs take over a decade to develop, cost over $2.5 billion in research and development — doubling every nine years — and 90 percent of efforts fail.

New tools are needed. “COVID-19 hits home this urgency,” Huang said.

Using breakthroughs in computer science, we can begin to use simulation and in-silico methods to understand the biological machinery of the proteins that affect disease and search for new drug candidates, Huang explained.

To accelerate this, Huang announced NVIDIA Clara Discovery — a state-of-the-art suite of tools for scientists to discover life-saving drugs.

“Where there are popular industry tools, our computer scientists accelerate them,” Huang said. “Where no tools exist, we develop them — like NVIDIA Parabricks, Clara Imaging, BioMegatron, BioBERT, NVIDIA RAPIDS.”

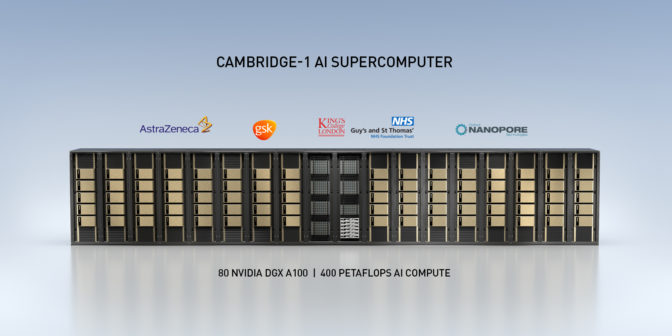

Huang also outlined an effort to build the U.K.’s fastest supercomputer, Cambridge-1, bringing state-of-the-art computing infrastructure to “an epicenter of healthcare research.”

Cambridge-1 will boast 400 petaflops of AI performance, making it among the world’s top 30 fastest supercomputers. It will host NVIDIA’s U.K. AI and healthcare collaborations with academia, industry and startups.

NVIDIA’s first partners are AstraZeneca, GSK, King’s College London, the Guy’s and St Thomas’ NHS Foundation Trust and startup Oxford Nanopore.

NVIDIA also announced a partnership with GSK to build the world’s first AI drug discovery lab.

Arm

Huang wrapped up his keynote with an update on NVIDIA’s partnership with Arm, whose power-efficient designs run the world’s smart devices.

NVIDIA agreed to acquire the U.K. semiconductor designer last month for $40 billion.

“Arm is the most popular CPU in the world,” Huang said. “Together, we will offer NVIDIA accelerated and AI computing technologies to the Arm ecosystem.”

Last year, Huang said, NVIDIA announced it would port CUDA and our scientific computing stack to Arm. Today, Huang announced a major initiative to advance the Arm platform — we’re making investments across three dimensions:

- First, NVIDIA will complement Arm partners with GPU, networking, storage and security technologies to create complete accelerated platforms.

- Second, NVIDIA is working with Arm partners to create platforms for HPC, cloud, edge and PC — this requires chips, systems and system software.

- And third, NVIDIA is porting the NVIDIA AI and NVIDIA RTX engines to Arm.

“Today, these capabilities are available only on x86,” Huang said, “With this initiative, Arm platforms will also be leading-edge at accelerated and AI computing.”