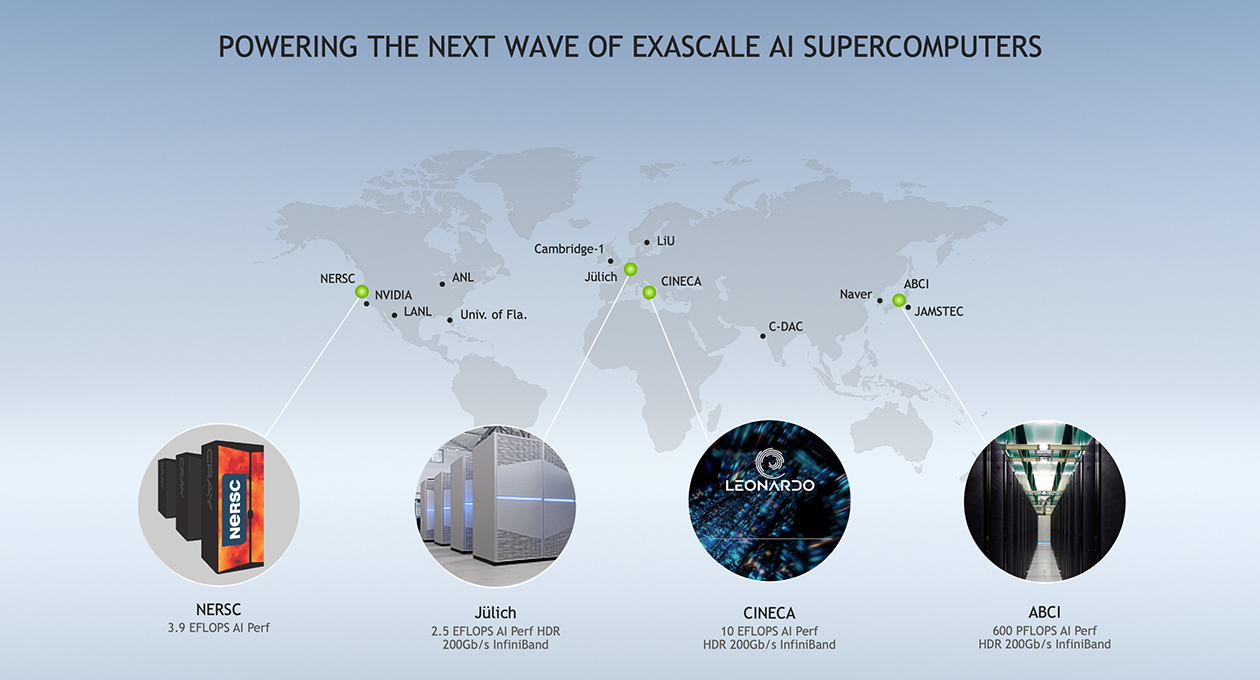

Supercomputing centers worldwide are onboarding NVIDIA Ampere GPU architecture to serve the growing demands of heftier AI models for everything from drug discovery to energy research.

Joining this movement, Fujitsu has announced a new exascale system for Japan-based AI Bridging Cloud Infrastructure (ABCI), offering 600 petaflops of performance at the National Institute of Advanced Industrial Science and Technology.

The debut comes as model complexity has surged 30,000x in the past five years, with booming use of AI in research. With scientific applications, these hulking datasets can be held in memory, helping to minimize batch processing as well as to achieve higher throughput.

To fuel this next research ride, NVIDIA Monday introduced the NVIDIA A100 80GB GPU with HBM2e technology. It doubles the A100 40GB GPU’s high-bandwidth memory to 80GB and delivers over 2 terabytes per second of memory bandwidth.

New NVIDIA A100 80GB GPUs let larger models and datasets run in-memory at faster memory bandwidth, enabling higher compute and faster results on workloads. Reducing internode communication can boost AI training performance by 1.4x with half the GPUs.

NVIDIA also introduced new NVIDIA Mellanox 400G InfiniBand architecture, doubling data throughput and offering new in-network computing engines for added acceleration.

Europe Takes Supercomputing Ride

Europe is leaping in. Italian inter-university consortium CINECA announced the Leonardo system, the world’s fastest AI supercomputer. It taps 14,000 NVIDIA Ampere architecture GPUs and NVIDIA Mellanox InfiniBand networking for 10 exaflops of AI. France’s Atos is set to build it.

Leonardo joins a growing pack of European systems on NVIDIA AI platforms supported by the EuroHPC initiative. Its German neighbor, the Jülich Supercomputing Center, recently launched the first NVIDIA GPU-powered AI exascale system to come online in Europe, delivering the region’s most powerful AI platform. The new Atos-designed Jülich system, dubbed JUWELS, is a 2.5 exaflops AI supercomputer that captured No. 7 on the latest TOP500 list.

Those also getting on board include Luxembourg’s MeluXina supercomputer; IT4Innovations National Supercomputing Center, the most powerful supercomputer in the Czech Republic; and the Vega supercomputer at the Institute of Information Science in Maribor, Slovenia.

Linköping University is planning to build Sweden’s fastest AI supercomputer, dubbed BerzeLiUs, based on the NVIDIA DGX SuperPOD infrastructure. It’s expected to provide 300 petaflops of AI performance for cutting-edge research.

NVIDIA is building Cambridge-1, an 80-node DGX SuperPOD with 400 petaflops of AI performance. It will be the fastest AI supercomputer in the U.K. It’s planned to be used in collaborative research within the country’s AI and healthcare community across academia, industry and startups.

Full Steam Ahead in North America

North America is taking the exascale AI supercomputing ride. NERSC (the U.S. National Energy Research Scientific Computing Center) is adopting NVIDIA AI for projects on Perlmutter, its system packing 6,200 A100 GPUs. NERSC now lays claim to 3.9 exaflops of AI performance.

NVIDIA Selene, a cluster based on the DGX SuperPOD, provides a public reference architecture for large-scale GPU clusters that can be deployed in weeks. The NVIDIA DGX SuperPOD system landed the top spot on the Green500 list of most efficient supercomputers, achieving a new world record in power efficiency of 26.2 gigaflops per watt, and it has set eight new performance milestones for MLPerf inference.

The University of Florida and NVIDIA are building the world’s fastest AI supercomputer in academia, aiming to deliver 700 petaflops of AI performance. The partnership puts UF among leading U.S. AI universities, advances academic research and helps address some of Florida’s most complex challenges.

At Argonne National Laboratory, researchers will use a cluster of 24 NVIDIA DGX A100 systems to scan billions of drugs in the search for treatments for COVID-19.

Los Alamos National Laboratory, Hewlett Packard Enterprise and NVIDIA are teaming up to deliver next-generation technologies to accelerate scientific computing.

All Aboard in APAC

Supercomputers in APAC will also be fueled by NVIDIA Ampere architecture. Korean search engine NAVER and Japanese messaging service LINE are using a DGX SuperPOD built with 140 DGX A100 systems with 700 petaflops of peak AI performance to scale out research and development of natural language processing models and conversational AI services.

The Japan Agency for Marine-Earth Science and Technology, or JAMSTEC, is upgrading its Earth Simulator with NVIDIA A100 GPUs and NVIDIA InfiniBand. The supercomputer is expected to have 39 petaflops of peak AI performance with a maximum theoretical performance of 1.2 petaflops of HPC performance, which today would rank high among the TOP500 supercomputers.

India’s Centre for Development of Advanced Computing, or C-DAC, is commissioning the country’s fastest and largest AI supercomputer, called PARAM Siddhi – AI. Built with 42 DGX A100 systems, it delivers 200 exaflops of AI performance and will address challenges in healthcare, education, energy, cybersecurity, space, automotive and agriculture.

Buckle up. Scientific research worldwide has never enjoyed such a ride.