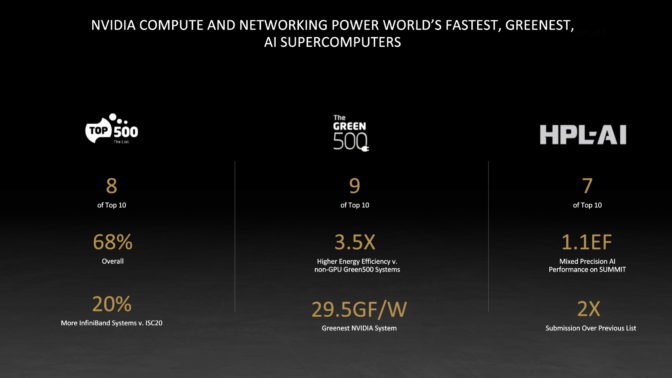

NVIDIA technologies power 342 systems on the TOP500 list released at the ISC High Performance event today, including 70 percent of all new systems and eight of the top 10.

The latest ranking of the world’s most powerful systems shows high performance computing centers are increasingly adopting AI. It also demonstrates that users continue to embrace the combination of NVIDIA AI, accelerated computing and networking technologies to run their scientific and commercial workloads.

For example, the number of systems on the list using InfiniBand jumped 21 percent from last year, increasing its position as the network of choice for handling a rising tide of AI, HPC and simulation data with low latency and acceleration.

In addition, two new systems on the list are what we call superclouds — emerging styles of shared supercomputers with new capabilities at the intersection of AI, high performance computing and the cloud.

Here Comes a Supercloud

Microsoft Azure took public cloud services to a new level, demonstrating work on systems that took four consecutive spots from No. 26 to No. 29 on the TOP500 list. They are parts of a global AI supercomputer called the ND A100 v4 cluster, available on demand in four global regions today.

Each of the systems delivered 16.59 petaflops on the HPL benchmark also known as Linpack, a traditional measure of HPC performance on 64-bit floating point math that’s the basis for the TOP500 rankings.

An Industrial HPC Era Begins

Azure is an example of what NVIDIA CEO Jensen Huang calls “an industrial HPC revolution,” the confluence of AI with high performance and accelerated computing that’s advancing every field of science and industry.

Under the hood, eight NVIDIA A100 Tensor Core GPUs power each virtual instance of the Azure cluster. Each chip has its own HDR 200G InfiniBand link that can create fast connections to thousands of GPUs in Azure.

UK Researchers Go Cloud-Native

The University of Cambridge debuted the fastest academic system in the U.K., a supercomputer that hit No. 3 on the Green500 list of the world’s most energy-efficient systems. It’s another sort of supercloud.

Called Wilkes-3, it’s the world’s first cloud-native supercomputer, letting researchers share virtual resources with privacy and security while not compromising performance. It does this thanks to NVIDIA BlueField DPUs, optimized to execute security, virtualization and other data-processing tasks.

The system uses 320 A100 GPUs linked on an HDR 200G InfiniBand network to accelerate simulations, AI and data analytics for academic research as well as commercial partners exploring the frontiers of science and medicine.

New TOP500 Systems Embrace AI

Many of the new NVIDIA-powered systems on the list show the rising importance of AI in high performance computing for both scientific and commercial users.

Perlmutter, at the National Energy Research Scientific Computing Center (NERSC), hit No. 5 on the TOP500 with 64.59 Linpack petaflops, thanks in part to its 6,144 A100 GPUs.

The system delivered more than half an exaflops of performance on the latest version of HPL-AI. It’s an emerging benchmark of converged HPC and AI workloads that uses mixed-precision math — the basis of deep learning and many scientific and commercial jobs — while still delivering the full accuracy of double-precision math.

AI performance is increasingly important because AI is “a growth area in the U.S. Department of Energy, where proof of concepts are moving into production use,” said Wahid Bhimji, acting lead for NERSC’s data and analytics services group.

HiPerGator AI took No. 22 with 17.20 petaflops and ranked No. 2 on the Green500, making it the world’s most energy-efficient academic supercomputer. It missed the top spot on the Green500 by a whisker — just 0.18 Gflops/watt.

Like 12 others on the latest list, the system uses the modular architecture of the NVIDIA DGX SuperPOD, a recipe that let the University of Florida rapidly deploy one of the world’s most powerful academic AI supercomputers. The system also made it a leading AI university with a stated goal of creating 30,000 AI-enabled graduates by 2030.

MeluXina, in Luxembourg, ranked No. 37 with 10.5 Linpack petaflops. It’s among the first systems to debut on the list from a network of European national supercomputers that will apply AI and data analytics across scientific and commercial applications.

Cambridge-1 ranked No. 42 on the TOP500, hitting 9.68 petaflops, becoming the most powerful system in the U.K. It will serve U.K. healthcare researchers in academic and commercial organizations including AstraZeneca, GSK and Oxford Nanopore.

BerzeLiUs hit No. 83 with 5.25 petaflops, making it Sweden’s fastest system. It runs HPC, AI and data analytics for academic and commercial research on a 200G InfiniBand network linking 60 NVIDIA DGX systems. It’s one of 15 systems on the list based on NVIDIA DGX systems.

Eleven Systems Fuel HPL-AI Momentum

In another sign of the increasing importance of AI workloads, 11 systems on the list reported their scores on HPL-AI, more than 5x the number from last June. Most used a major optimization of the code released in March, the first upgrade since the benchmark was released by researchers at the University of Tennessee in late 2018.

The new software streamlines communications, enabling GPU-to-GPU links that eliminate waiting for a host CPU. It also implements communications as 16-bit code rather than the slower 32-bit code that is the default on Linpack.

“We cut time spent on chip-to-chip communications in half and enabled some other workloads to run in parallel, so the average improvement of the new versus the original code is about 2.7x,” said Azzam Haidar Ahmad, who helped define the benchmark and is now a senior engineer at NVIDIA.

While focused on mixed-precision math, the benchmark still delivers the same 64-bit accuracy of Linpack, thanks to a looping technique in HPL-AI that rapidly refines some calculations.

Summit Hits 1+ Exaflops on HPL-AI

With the optimizations, scores rose significantly over benchmarks reported last year using the early version of the code.

For example, the Summit supercomputer at Oak Ridge National Lab, the first to embrace HPL-AI, announced a score of 445 petaflops on the first version of the code in 2019. Summit’s test this year using the latest version of HPL-AI hit 1.15 exaflops.

Others adopting the benchmark include Japan’s Fugaku supercomputer, the world’s fastest system, NVIDIA’s Selene, the world’s fastest commercial system, and Juwels, Germany’s most powerful supercomputer.

“We’re using the HPL-AI benchmark because it’s a good measure of the mixed-precision work in a growing number of our AI and scientific workloads — and it reflects accurate 64-bit floating point results, too,” said Thomas Lippert, director of the Jülich Supercomputing Center.

GPUs Lead the Green500 Pack

On the Green500 that measures energy efficiency on Linpack, 35 of the top 40 systems run on NVIDIA technologies, including nine of the top 10. Supercomputers on the list that use NVIDIA GPUs are 3.5x more energy efficient than ones that don’t, a consistent and growing trend.

To learn more, tune into the NVIDIA ISC 2021 Special Address Monday, June 28, at 9:30 a.m. PT. You’ll get an in-depth overview of the latest news from NVIDIA’s Marc Hamilton, followed by a live Q&A panel with NVIDIA HPC experts.