Amazon Web Services and NVIDIA will bring the latest generative AI technologies to enterprises worldwide.

Combining AI and cloud computing, NVIDIA founder and CEO Jensen Huang joined AWS CEO Adam Selipsky Tuesday on stage at AWS re:Invent 2023 at the Venetian Expo Center in Las Vegas.

Selipsky said he was “thrilled” to announce the expansion of the partnership between AWS and NVIDIA with more offerings that will deliver advanced graphics, machine learning and generative AI infrastructure.

The two announced that AWS will be the first cloud provider to adopt the latest NVIDIA GH200 NVL32 Grace Hopper Superchip with new multi-node NVLink technology, that AWS is bringing NVIDIA DGX Cloud to AWS, and that AWS has integrated some of NVIDIA’s most popular software libraries.

Huang started the conversation by highlighting the integration of key NVIDIA libraries with AWS, encompassing a range from NVIDIA AI Enterprise to cuQuantum to BioNeMo, catering to domains like data processing, quantum computing and digital biology.

The partnership opens AWS to millions of developers and the nearly 40,000 companies who are using these libraries, Huang said, adding that it’s great to see AWS expand its cloud instance offerings to include NVIDIA’s new L4, L40S and, soon, H200 GPUs.

Selipsky then introduced the AWS debut of the NVIDIA GH200 Grace Hopper Superchip, a significant advancement in cloud computing, and prompted Huang for further details.

“Grace Hopper, which is GH200, connects two revolutionary processors together in a really unique way,” Huang said. He explained that the GH200 connects NVIDIA’s Grace Arm CPU with its H200 GPU using a chip-to-chip interconnect called NVLink, at an astonishing one terabyte per second.

Each processor has direct access to the high-performance HBM and efficient LPDDR5X memory. This configuration results in 4 petaflops of processing power and 600GB of memory for each superchip.

AWS and NVIDIA connect 32 Grace Hopper Superchips in each rack using a new NVLink switch. Each 32 GH200 NVLink-connected node can be a single Amazon EC2 instance. When these are integrated with AWS Nitro and EFA networking, customers can connect GH200 NVL32 instances to scale to thousands of GH200 Superchips

“With AWS Nitro, that becomes basically one giant virtual GPU instance,” Huang said.

The combination of AWS expertise in highly scalable cloud computing plus NVIDIA innovation with Grace Hopper will make this an amazing platform that delivers the highest performance for complex generative AI workloads, Huang said.

“It’s great to see the infrastructure, but it extends to the software, the services and all the other workflows that they have,” Selipsky said, introducing NVIDIA DGX Cloud on AWS.

This partnership will bring about the first DGX Cloud AI supercomputer powered by the GH200 Superchips, demonstrating the power of AWS’s cloud infrastructure and NVIDIA’s AI expertise.

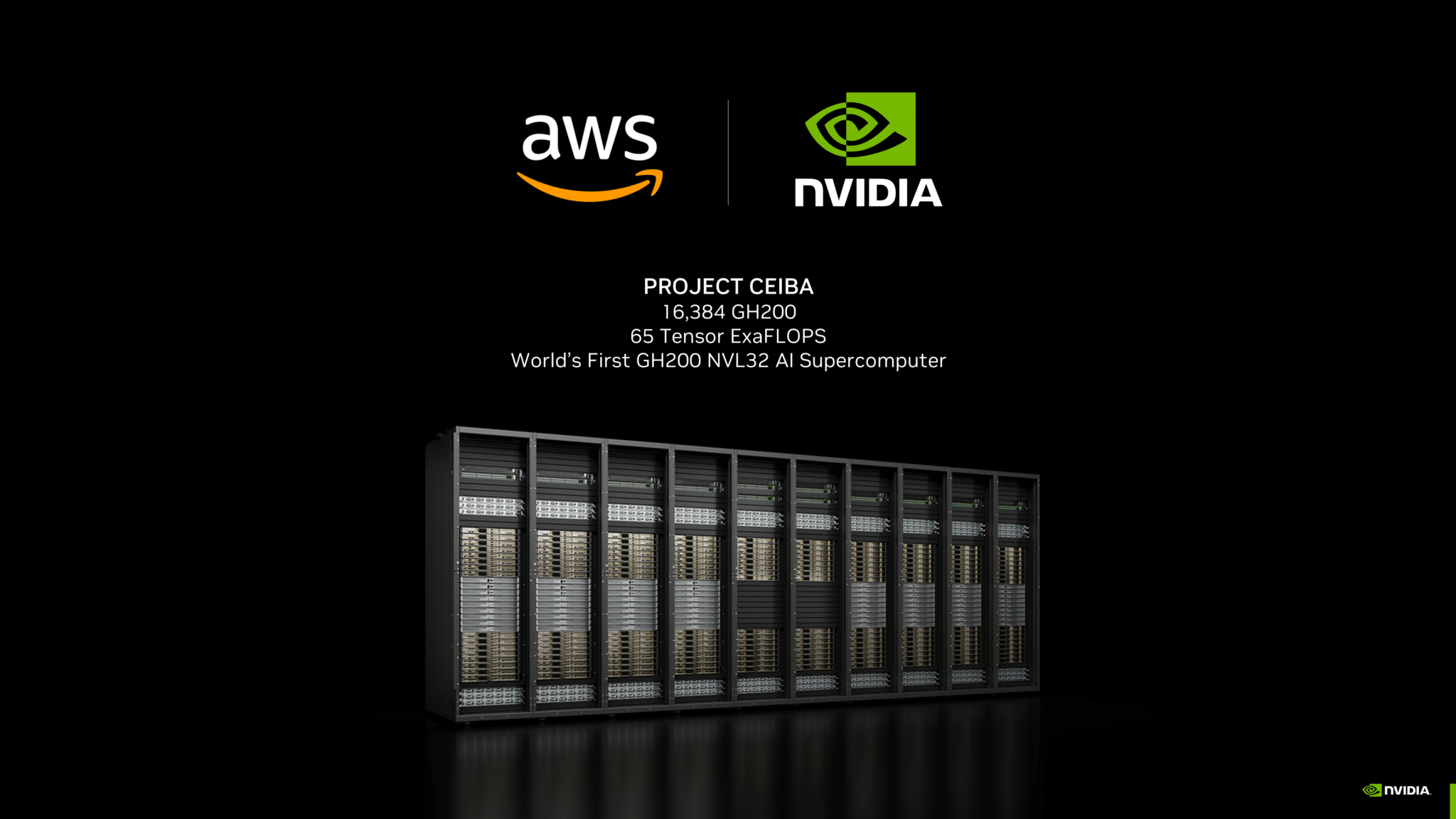

Following up, Huang announced that this new DGX Cloud supercomputer design in AWS, codenamed Project Ceiba, will serve as NVIDIA’s newest AI supercomputer as well, for its own AI research and development.

Named after the majestic Amazonian Ceiba tree, the Project Ceiba DGX Cloud cluster incorporates 16,384 GH200 Superchips to achieve 65 exaflops of AI processing power, Huang said.

Ceiba will be the world’s first GH200 NVL32 AI supercomputer built and the newest AI supercomputer in NVIDIA DGX Cloud, Huang said.

Huang described Project Ceiba AI supercomputer as “utterly incredible,” saying it will be able to reduce the training time of the largest language models by half.

NVIDIA’s AI engineering teams will use this new supercomputer in DGX Cloud to advance AI for graphics, LLMs, image/video/3D generation, digital biology, robotics, self-driving cars, Earth-2 climate prediction and more, Huang said.

“DGX is NVIDIA’s cloud AI factory,” Huang said, noting that AI is now key to doing NVIDIA’s own work in everything from computer graphics to creating digital biology models to robotics to climate simulation and modeling.

“DGX Cloud is also our AI factory to work with enterprise customers to build custom AI models,” Huang said. “They bring data and domain expertise; we bring AI technology and infrastructure.”

In addition, Huang also announced that AWS will be bringing four Amazon EC2 instances based on the NVIDIA GH200 NVL, H200, L40S, L4 GPUs, coming to market early next year.

Selipsky wrapped up the conversation by announcing that GH200-based instances and DGX Cloud will be available on AWS in the coming year.

You can catch the discussion and Selipsky’s entire keynote on AWS’s YouTube channel.