Enhancing Japan’s AI sovereignty and strengthening its research and development capabilities, Japan’s National Institute of Advanced Industrial Science and Technology (AIST) will integrate thousands of NVIDIA H200 Tensor Core GPUs into its AI Bridging Cloud Infrastructure 3.0 supercomputer (ABCI 3.0). The Hewlett Packard Enterprise Cray XD system will feature NVIDIA Quantum-2 InfiniBand networking for superior performance and scalability.

ABCI 3.0 is the latest iteration of Japan’s large-scale Open AI Computing Infrastructure designed to advance AI R&D. This collaboration underlines Japan’s commitment to advancing its AI capabilities and fortifying its technological independence.

“In August 2018, we launched ABCI, the world’s first large-scale open AI computing infrastructure,” said AIST Executive Officer Yoshio Tanaka. “Building on our experience over the past several years managing ABCI, we’re now upgrading to ABCI 3.0. In collaboration with NVIDIA and HPE we aim to develop ABCI 3.0 into a computing infrastructure that will advance further research and development capabilities for generative AI in Japan.”

“As generative AI prepares to catalyze global change, it’s crucial to rapidly cultivate research and development capabilities within Japan,” said AIST Solutions Co. Producer and Head of ABCI Operations Hirotaka Ogawa. “I’m confident that this major upgrade of ABCI in our collaboration with NVIDIA and HPE will enhance ABCI’s leadership in domestic industry and academia, propelling Japan towards global competitiveness in AI development and serving as the bedrock for future innovation.”

ABCI 3.0: A New Era for Japanese AI Research and Development

ABCI 3.0 is constructed and operated by AIST, its business subsidiary, AIST Solutions, and its system integrator, Hewlett Packard Enterprise (HPE).

The ABCI 3.0 project follows support from Japan’s Ministry of Economy, Trade and Industry, known as METI, for strengthening its computing resources through the Economic Security Fund and is part of a broader $1 billion initiative by METI that includes both ABCI efforts and investments in cloud AI computing.

NVIDIA is closely collaborating with METI on research and education following a visit last year by company founder and CEO, Jensen Huang, who met with political and business leaders, including Japanese Prime Minister Fumio Kishida, to discuss the future of AI.

NVIDIA’s Commitment to Japan’s Future

Huang pledged to collaborate on research, particularly in generative AI, robotics and quantum computing, to invest in AI startups and provide product support, training and education on AI.

During his visit, Huang emphasized that “AI factories” — next-generation data centers designed to handle the most computationally intensive AI tasks — are crucial for turning vast amounts of data into intelligence.

“The AI factory will become the bedrock of modern economies across the world,” Huang said during a meeting with the Japanese press in December.

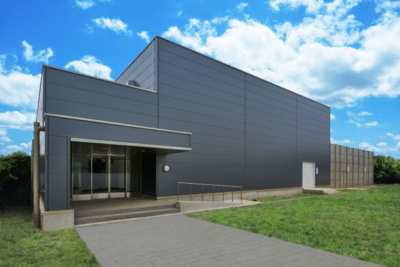

With its ultra-high-density data center and energy-efficient design, ABCI provides a robust infrastructure for developing AI and big data applications.

The system is expected to come online by the end of this year and offer state-of-the-art AI research and development resources. It will be housed in Kashiwa, near Tokyo.

Unmatched Computing Performance and Efficiency

The facility will offer:

- 6 AI exaflops of computing capacity, a measure of AI-specific performance without sparsity

- 410 double-precision petaflops, a measure of general computing capacity

- Each node is connected via the Quantum-2 InfiniBand platform at 200GB/s of bisectional bandwidth.

NVIDIA technology forms the backbone of this initiative, with hundreds of nodes each equipped with 8 NVLlink-connected H200 GPUs providing unprecedented computational performance and efficiency.

NVIDIA H200 is the first GPU to offer over 140 gigabytes (GB) of HBM3e memory at 4.8 terabytes per second (TB/s). The H200’s larger and faster memory accelerates generative AI and LLMs, while advancing scientific computing for HPC workloads with better energy efficiency and lower total cost of ownership.

NVIDIA H200 GPUs are 15X more energy-efficient than ABCI’s previous-generation architecture for AI workloads such as LLM token generation.

The integration of advanced NVIDIA Quantum-2 InfiniBand with In-Network computing — where networking devices perform computations on data, offloading the work from the CPU — ensures efficient, high-speed, low-latency communication, crucial for handling intensive AI workloads and vast datasets.

ABCI boasts world-class computing and data processing power, serving as a platform to accelerate joint AI R&D with industries, academia and governments.

METI’s substantial investment is a testament to Japan’s strategic vision to enhance AI development capabilities and accelerate the use of generative AI.

By subsidizing AI supercomputer development, Japan aims to reduce the time and costs of developing next-generation AI technologies, positioning itself as a leader in the global AI landscape.