A new study underscores the potential of AI and accelerated computing to deliver energy efficiency and combat climate change, efforts in which NVIDIA has long been deeply engaged.

The study, called “Rethinking Concerns About AI’s Energy Use,” provides a well-researched examination into how AI can — and in many cases already does — play a large role in addressing these critical needs.

Citing dozens of sources, the study from the Information Technology and Innovation Foundation (ITIF), a Washington-based think tank focused on science and technology policy, calls for governments to accelerate adoption of AI as a significant new tool to drive energy efficiency across many industries.

AI can help “reduce carbon emissions, support clean energy technologies, and address climate change,” it said.

How AI Drives Energy Efficiency

The report documents ways machine learning is already helping many sectors reduce their impact on the environment.

For example, it noted:

- Farmers are using AI to lessen their use of fertilizer and water.

- Utilities are adopting it to make the electric grid more efficient.

- Logistics operations use it to optimize delivery routes, reducing the fuel consumption of their fleets.

- Factories are deploying it to reduce waste and increase energy efficiency.

In these and many other ways, the study argues that AI advances energy efficiency. So, it calls on policymakers “to ensure AI is part of the solution, not part of the problem, when it comes to the environment.”

It also recommends adopting AI broadly across government agencies to “help the public sector reduce carbon emissions through more efficient digital services, smart cities and buildings, intelligent transportation systems, and other AI-enabled efficiencies.”

Reviewing the Data on AI

The study’s author, Daniel Castro, saw in current predictions about AI a repeat of exaggerated forecasts that emerged during the rise of the internet more than two decades ago.

“People extrapolate from early studies, but don’t consider all the important variables including improvements you see over time in digitalization like energy efficiency,” said Castro, who leads ITIF’s Center for Data Innovation.

“The danger is policymakers could miss the big picture and hold back beneficial uses of AI that are having positive impacts, especially in regulated areas like healthcare,” he said.

“For example, we’ve had electronic health records since the 1980s, but it took focused government investments to get them deployed,” he added. “Now AI brings big opportunities for decarbonization across the government and the economy.”

Optimizing Efficiency Across Data Centers

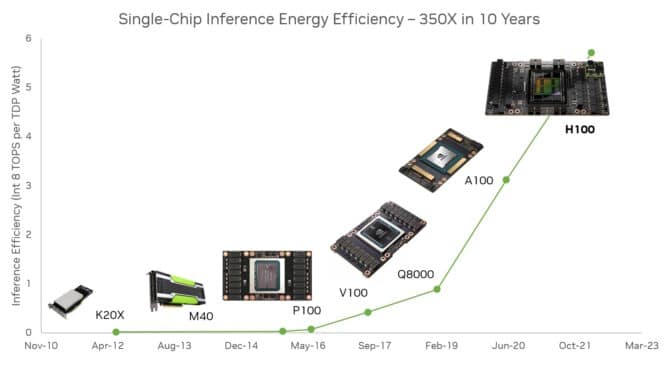

Data centers of every size have a part to play in maximizing their energy efficiency with AI and accelerated computing.

For instance, NVIDIA’s AI-based weather-prediction model, FourCastNet, is about 45,000x faster and consumes 12,000x less energy to produce a forecast than current techniques. That promises efficiency boosts for supercomputers around the world that run continuously to provide regional forecasts, Bjorn Stevens, director of the Max Planck Institute for Meteorology, said in a blog.

Overall, data centers could save a whopping 19 terawatt-hours of electricity a year if all AI, high performance computing and networking offloads were run on GPU and DPU accelerators instead of CPUs, according to NVIDIA’s calculations. That’s the equivalent of the energy consumption of 2.9 million passenger cars driven for a year.

Last year, the U.S. Department of Energy’s lead facility for open science documented its advances with accelerated computing.

Using NVIDIA A100 Tensor Core GPUs, energy efficiency improved 5x on average across four key scientific applications in tests at the National Energy Research Scientific Computing Center. An application for weather forecasting logged gains of nearly 10x.

AI, Accelerated Computing Advance Climate Science

The combination of accelerated computing and AI is creating new scientific instruments to help understand and combat climate change.

In 2021, NVIDIA announced Earth-2, an initiative to build a digital twin of Earth on a supercomputer capable of simulating climate on a global scale. It’s among a handful of similarly ambitious efforts around the world.

An example is Destination Earth, a pan-European project to create digital twins of the planet, that’s using accelerated computing, AI and “collaboration on an unprecedented scale,” said the project’s leader, Peter Bauer, a veteran with more than 20 years at Europe’s top weather-forecasting center.

Experts in the utility sector agree AI is key to advancing sustainability.

“AI will play a crucial role maintaining stability for an electric grid that’s becoming exponentially more complex with large numbers of low-capacity, variable generation sources like wind and solar coming online, and two-way power flowing into and out of houses,” said Jeremy Renshaw, a senior program manager at the Electric Power Research Institute, an independent nonprofit that collaborates with more than 450 companies in 45 countries, in a blog.

Learn more about sustainable computing as well as NVIDIA’s commitment to use 100% renewable energy starting in fiscal year 2025. And watch the video below for more on how AI is accelerating efforts to combat climate change.