Note: This post was updated on Aug. 9, 2022.

What is the metaverse?

The metaverse is the “next evolution of the internet, the 3D internet,” explained NVIDIA CEO Jensen Huang during an address at SIGGRAPH, the world’s largest computer graphics conference

Commercialized two decades ago, the internet was about web pages hyperlinked over a network, Huang said.

A decade ago, Web 2.0 emerged, and “the internet was about cloud services connected to applications that are oftentimes enjoyed on mobile devices.”

“Now, Web 3.0 is here,” Huang said. “The metaverse is the internet in 3D, a network of connected, persistent, virtual worlds.”

The metaverse will extend 2D web pages into 3D spaces and worlds, Huang said. Hyperlinking will “evolve into hyper-jumping between 3D worlds.”

“Like games today, 3D worlds are experienced through 2D displays and TVs,” Huang said. “And, on occasion, with VR and AR glasses.“

Those ideas are already being put to work with NVIDIA Omniverse, a platform for connecting 3D worlds into a shared virtual universe, or metaverse.

“The opportunity of the metaverse is vast — larger than that of the physical world,” NVIDIA Vice President for Omniverse and Simulation Rev Lebaredian told the audience at SIGGRAPH.

“Just like in the infancy of the internet, no one can predict exactly how and how large the metaverse will grow — but today, we know we can lay the foundations,” he said.

“What are metaverse applications?” Huang asked. “They’re already here.”

- Fashion designers, furniture and goods makers, and retailers offer virtual 3D products you can try with augmented reality, he explained.

- Telcos are creating digital twins of their radio networks to optimize and deploy radio towers, Huang said.

- Companies are creating “digital twins” of warehouses and factories to optimize their layout and logistics, he added.

- And “NVIDIA is building a digital twin of the Earth to predict the climate decades into the future.”

“The metaverse will grow organically as the internet did — continuously and simultaneously across all industries, but exponentially, because of computing’s compounding and network effects,” Huang said.

“And, as with the internet, the metaverse is a computing platform that requires a new programming model, a new computing architecture and new standards,” Huang said.

How NVIDIA Omniverse Creates, Connects Worlds Within the Metaverse

So how does Omniverse work?

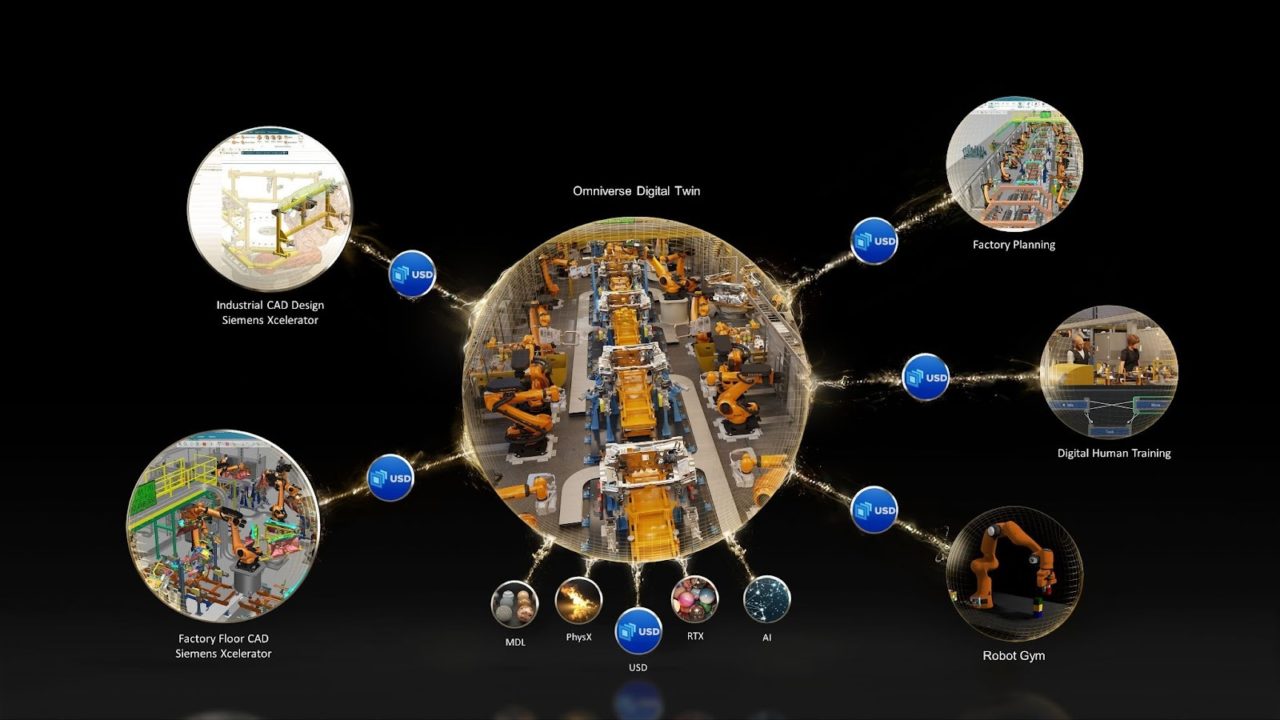

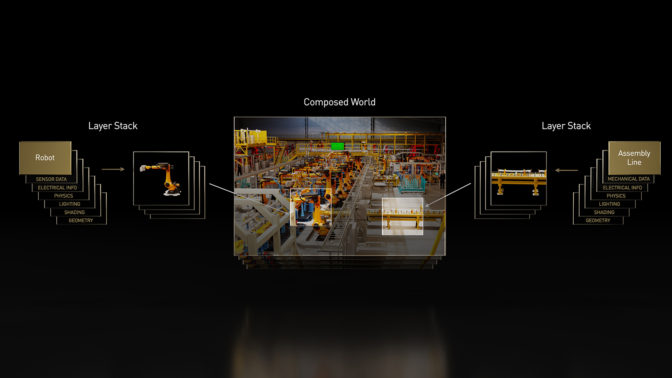

Omniverse is built on USD, or Universal Scene Description, an interchange framework invented by Pixar in 2012.

Released as open-source software in 2016, USD provides a rich, common language for defining, packaging, assembling and editing 3D data for a growing array of industries and applications.

Lebaredian and others say USD is to the emerging metaverse what hypertext markup language, or HTML, was to the web — a common language that can be used, and advanced, to support the metaverse.

Omniverse is a platform built from the ground up to be physically based. Thanks to NVIDIA RTX graphics technologies, it is fully path traced, simulating how each ray of light bounces around a virtual world in real time.

Omniverse simulates physics with NVIDIA PhysX. It simulates materials with NVIDIA MDL, or material definition language.

And Omniverse is fully integrated with NVIDIA AI, which is key to advancing robotics.

Omniverse is cloud native, scales across multiple GPUs, runs on any RTX platform and streams remotely to any device.

You can teleport into Omniverse with virtual reality, and AIs can teleport out of Omniverse with augmented reality.

Metaverse Made Real

NVIDIA released Omniverse to open beta in December 2020 and NVIDIA Omniverse Enterprise in November 2021. Professionals in a wide variety of industries quickly put it to work.

And industrial manufacturing leader Siemens and NVIDIA are already collaborating to accelerate industrial automation.

The partnership brings together Siemens Xcelerator’s vast industrial ecosystem and NVIDIA Omniverse’s AI-enabled, physically accurate, real-time virtual world engine. This will enable full-design-fidelity, live digital twins that connect software-defined AI systems from edge to cloud.

Visual effects pioneer Industrial Light & Magic is testing Omniverse to bring together internal and external tool pipelines from multiple studios.

Omniverse lets them collaborate, render final shots in real time and create massive virtual sets like holodecks.

Multinational networking and telecommunications company Ericsson uses Omniverse to simulate 5G wave propagation in real time, minimizing multi-path interference in dense city environments.

Infrastructure engineering software company Bentley Systems is using Omniverse to create a suite of applications on the platform. Bentley’s iTwin platform creates a 4D infrastructure digital twin to simulate an infrastructure asset’s construction, then monitor and optimize its performance throughout its lifecycle.

The Metaverse Can Help Humans and Robots Collaborate

These virtual worlds are ideal for training robots.

One of the essential features of NVIDIA Omniverse is that it obeys the laws of physics. Omniverse can simulate particles and fluids, materials and even machines, right down to their springs and cables.

Modeling the natural world in a virtual one is a fundamental capability for robotics.

It allows users to create a virtual world where robots — powered by AI brains that can learn from their real or digital environments — can train.

Once the minds of these robots are trained using NVIDIA Isaac Sim, roboticists can load those brains onto an NVIDIA Jetson edge AI module, and connect them to real robots.

Those robots will come in all sizes and shapes — box movers, pick-and-place arms, forklifts, cars, trucks and even buildings.

In the future, a factory will be a robot, orchestrating many robots inside, building cars that are robots themselves.

How the Metaverse, and NVIDIA Omniverse, Enable Digital Twins

NVIDIA Omniverse provides a description for these shared worlds that people and robots can connect to — and collaborate in — to better work together.

It’s an idea that automaker BMW Group is already putting to work.

The automaker produces more than 2 million cars a year. In its most advanced factory, the company makes a car every minute. And each vehicle is customized differently.

BMW Group is using NVIDIA Omniverse to create a future factory, a perfect “digital twin.”

It’s designed entirely in digital and simulated from beginning to end in Omniverse.

The Omniverse-enabled factory can connect to enterprise resource planning systems, simulating the factory’s throughput. It can simulate new plant layouts. It can even become the dashboard for factory employees, who can uplink into a robot to teleoperate it.

The AI and software that run the virtual factory are the same as what will run the physical one. In other words, the virtual and physical factories and their robots will operate in a loop. They’re twins.

No Longer Science Fiction

And the hardware needed to run it is here now.

Computer makers worldwide are building NVIDIA-Certified workstations and laptops, which all have been validated for running GPU-accelerated workloads with optimum performance, reliability and scale.

And in the data center, NVIDIA OVX computer systems, announced in March, can power large-scale digital twins. NVIDIA OVX is purpose-built to operate complex digital twin simulations that will run within NVIDIA Omniverse.

OVX will be used to simulate complex digital twins for modeling entire buildings, factories, cities and even the world.

Back to the Future

So what’s next?

The metaverse is “the next era in the evolution of the internet — a 3D spatial overlay of the web — linking the digital world to our physical world,” Lebaradian said.

In this new iteration of the internet, websites will become interconnected 3D spaces akin to the world we live in and experience every day, he explained.

“Many of these virtual worlds will be reflections of the real world, linked and synchronized in real-time,” he added.

Many of these virtual worlds will be designed for entertainment, socializing and gaming-matching the real world’s laws of physics in some cases, but often choosing to break them to make the experience more “fun.”

Extended reality (XR) devices and robots will act as portals between our physical world and virtual worlds.

“Humans will portal into a virtual world with VR and AR devices, while AIs will portal out to our world via physical robots,” Lebaradian said.

Learn more about the metaverse on the NVIDIA Technical Blog.