Buckle up. NVIDIA CEO Jensen Huang just laid out a singular vision filled with autonomous machines, super-intelligent AIs and sprawling virtual worlds – from silicon to supercomputers to AI software — in a single presentation.

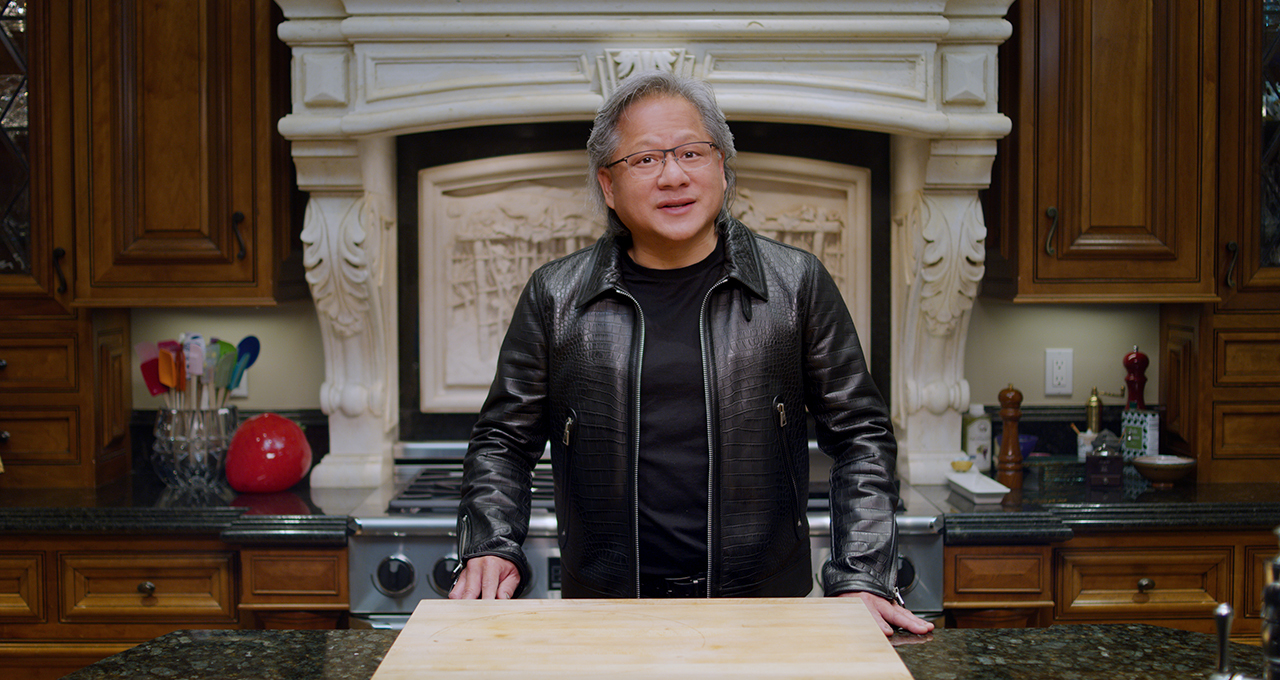

“NVIDIA is a computing platform company, helping to advance the work for the Da Vincis of our time – in language understanding, drug discovery, or quantum computing,” Huang said in a talk delivered from behind his kitchen counter to NVIDIA’s GPU Technology Conference. “NVIDIA is the instrument for your life’s work.”

During a presentation punctuated with product announcements, partnerships, and demos that danced up and down the modern technology stack, Huang spoke about how NVIDIA is investing heavily in CPUs, DPUs, and GPUs and weaving them into new data center scale computing solutions for researchers and enterprises.

He talked about NVIDIA as a software company, offering a host of software built on NVIDIA AI as well as NVIDIA Omniverse for simulation, collaboration, and training autonomous machines.

Finally, Huang spoke about how NVIDIA is moving automotive computing forward with a new SoC, NVIDIA Atlan, and new simulation capabilities.

CPUs, DPUs and GPUs

Huang announced NVIDIA’s first data center CPU, Grace, named after Grace Hopper, a U.S. Navy rear admiral and computer programming pioneer.

Grace is a highly specialized processor targeting largest data intensive HPC and AI applications as the training of next-generation natural-language processing models that have more than one trillion parameters.

When tightly coupled with NVIDIA GPUs, a Grace-based system will deliver 10x faster performance than today’s state-of-the-art NVIDIA DGX-based systems, which run on x86 CPUs.

While the vast majority of data centers are expected to be served by existing CPUs, Gracewill serve a niche segment of computing.“Grace highlights the beauty of Arm,” Huang said.

Huang also announced that the Swiss National Supercomputing Center will build a supercomputer, dubbed Alps, will be powered by Grace and NVIDIA’s next-generation GPU. U.S. Department of Energy’s Los Alamos National Laboratory will also bring a Grace-powered supercomputer online in 2023, NVIDIA announced.

Accelerating Data Centers with BlueField-3

Further accelerating the infrastructure upon which hyperscale data centers, workstations, and supercomputers are built, Huang announced the NVIDIA BlueField-3 DPU.

The next-generation data processing unit will deliver the most powerful software-defined networking, storage and cybersecurity acceleration capabilities.

Where BlueField-2 offloaded the equivalent of 30 CPU cores, it would take 300 CPU cores to secure, offload, and accelerate network traffic at 400 Gbps as BlueField-3— a 10x leap in performance, Huang explained.

‘Three Chips’

Grace and BlueField are key parts of a data center roadmap consisting of 3 chips: CPU, GPU, and DPU, Huang said. Each chip architecture has a two-year rhythm with likely a kicker in between. One year will focus on x86 platforms, the next on Arm platforms.

“Every year will see new exciting products from us,” Huang said. “Three chips, yearly leaps, one architecture.”

Expanding Arm into the Cloud

Arm, Huang said, is the most popular CPU in the world. “For good reason – it’s super energy-efficient and its open licensing model inspires a world of innovators,” he said.

For other markets like cloud, enterprise and edge data centers, supercomputing, and PC, Arm is just starting. Huang announced key Arm partnerships — Amazon Web Services in cloud computing, Ampere Computing in scientific and cloud computing, Marvel in hyper-converged edge servers, and MediaTek to create a Chrome OS and Linux PC SDK and reference system.

DGX – A Computer for AI

Weaving together NVIDIA silicon and software, Huang announced upgrades to NVIDIA’s DGX Station “AI data center in-a-box” for workgroups, and the NVIDIA DGX SuperPod, NVIDIA’s AI-data-center-as-a-product for intensive AI research and development.

The new DGX Station 320G harnesses 320Gbytes of super-fast HBM2e connected to 4 NVIDIA A100 GPUs over 8 terabytes per second of memory bandwidth. Yet it plugs into a normal wall outlet and consumes just 1500 watts of power, Huang said.

The DGX SuperPOD gets the new 80GB NVIDIA A100, bringing the SuperPOD to 90 terabytes of HBM2e memory. It’s been upgraded with NVIDIA BlueField-2, and NVIDIA is now offering it with the NVIDIA Base Command DGX management and orchestration tool.

NVIDIA EGX for Enterprise

Further democratizing AI, Huang introduced a new class of NVIDIA-certified systems, high-volume enterprise servers from top manufacturers. They’re now certified to run the NVIDIA AI Enterprise software suite, exclusively certified for VMware vSphere 7, the world’s most widely used compute virtualization platform.

Expanding the NVIDIA-certified servers ecosystem is a new wave of systems featuring the NVIDIA A30 GPU for mainstream AI and data analytics and the NVIDIA A10 GPU for AI-enabled graphics, virtual workstations and mixed compute and graphics workloads, announced today.

AI-on-5G

Huang also discussed NVIDIA’s AI-on-5G computing platform – bringing together 5G and AI into a new type of computing platform designed for the edge that pairs the NVIDIA Aerial software development kit with the NVIDIA BlueField-2 A100, combining GPUs and CPUs into “the most advanced PCIE card ever created.”

Partners Fujitsu, Google Cloud, Mavenir, Radisys and Wind River are all developing solutions for NVIDIA’s AI-on-5G platform.

NVIDIA AI and NVIDIA Omniverse

Virtual, real-time, 3d worlds inhabited by people, AIs, and robots are no longer science-fiction.

NVIDIA Omniverse is cloud-native, scalable to multiple GPUs, physically accurate, takes advantage of RTX real-time path tracing and DLSS, simulates materials with NVIDIA MDL, simulates physics with NVIDIA PhysX, and fully integrates NVIDIA AI, Huang explained.

“Omniverse was made to create shared virtual 3D worlds,” Huang said. “Ones not unlike the science fiction metaverse described by Neal Stephenson in his early 1990s novel ‘Snow Crash’”

Huang announced that starting this summer, Omniverse will be available for enterprise licensing. Since its release in open beta partners such as Foster and Partners in architecture, ILM in entertainment, Activision in gaming, and advertising powerhouse WPP have put Omniverse to work.

The Factory of the Future

To show what’s possible with Omniverse Huang, along with Milan Nedeljković, member of the Board of Management of BMW AG, showed how a photorealistic, real-time digital model — a “digital twin” of one of BMW’s highly-automated factories — can accelerate modern manufacturing.

“These new innovations will reduce the planning times, improve flexibility and precision and at the end produce 30 percent more efficient planning,” Nedeljković said.

A Host of AI Software

Huang announced NVIDIA Megatron — a framework for training Transformers, which have led to breakthroughs in natural-language processing. Transformers generate document summaries, complete phrases in email, grade quizzes, generate live sports commentary, even code.

He detailed new models for Clara Discovery — NVIDIA’s acceleration libraries for computational drug discovery, and a partnership with Schrodinger — the leading physics-based and machine learning computational platform for drug discovery and material science.

To accelerate research into quantum computing — which relies on quantum bits, or qubits, that can be 0, 1, or both — Huang introduced cuQuantum to accelerate quantum circuit simulators so researchers can design better quantum computers.

To secure modern data centers, Huang announced NVIDIA Morpheus – a data center security platform for real-time all-packet inspection built on NVIDIA AI, NVIDIA BlueField, Net-Q network telemetry software, and EGX.

To accelerate conversational AI, Huang announced the availability of NVIDIA Jarvis (since renamed Riva) – a state-of-the-art deep learning AI for speech recognition, language understanding, translations, and expressive speech.

To accelerate recommender systems — the engine for search, ads, online shopping, music, books, movies, user-generated content, and news — Huang announced NVIDIA Merlin is now available on NGC, NVIDIA’s catalog of deep learning framework containers.

And to help customers turn their expertise into AI, Huang introduced NVIDIA TAO to fine-tune and adapt NVIDIA pre-trained models with data from customers and partners while protecting data privacy.

“There is infinite diversity of application domains, environments, and specializations,” Huang said. “No one has all the data – sometimes it’s rare, sometimes it’s a trade secret.

The final piece is the inference server, NVIDIA Triton, to glean insights from the continuous streams of data coming into customer’s EGX servers or cloud instances, Huang said.

‘Any AI model that runs on cuDNN, so basically every AI model,” Huang said. “From any framework – TensorFlow, Pytorch, ONNX, OpenVINO, TensorRT, or custom C++/python backends.”

Advancing Automotive with NVIDIA DRIVE

Autonomous vehicles are “one of the most intense machine learning and robotics challenges – one of the hardest but also with the greatest impact,” Huang said.

NVIDIA is building modular, end-to-end solutions for the $10 trillion transportation industry so partners can leverage the parts they need.

Huang said NVIDIA DRIVE Orin, NVIDIA’s AV computing system-on-a-chip, which goes into production in 2022, was designed to be the car’s central computer.

Volvo Cars has been using the high-performance, energy-efficient compute of NVIDIA DRIVE since 2016 and developing AI-assisted driving features for new models on NVIDIA DRIVE Xavier with software developed in-house and by Zenseact, Volvo Cars’ autonomous driving software development company.

And Volvo Cars announced during the GTC keynote today that it will use NVIDIA DRIVE Orin to power the autonomous driving computer in its next-generation cars.

The decision deepens the companies’ collaboration to even more software-defined model lineups, beginning with the next-generation XC90, set to debut next year.

Meanwhile, NVIDIA DRIVE Atlan, NVIDIA’s next-generation automotive system-on-a-chip, and a true data center on wheels, “will be yet another giant leap,” Huang announced.

Atlan will deliver more than 1,000 trillion operations per second, or TOPS, and targets 2025 models.

“Atlan will be a technical marvel – fusing all of NVIDIA’s technologies in AI, auto, robotics, safety, and BlueField secure data centers,” Huang said.

Huang also announced the NVIDIA 8th generation Hyperion car platform – including reference sensors, AV and central computers, 3D ground-truth data recorders, networking, and all of the essential software.

Huang also announced that DRIVE Sim will be available for the community this summer.

Just as Omniverse can build a digital twin of the factories that produce cars, DRIVE Sim can be used to create a digital twin of autonomous vehicles to be used throughout AV development.

“The DRIVE digital twin in Omniverse is a virtual space that every engineer and every car in the fleet is connected to,” Huang said.

The ‘Instrument for Your Life’s Work’

Huang wrapped up with four points.

NVIDIA is now a 3-chip company – offering GPUs, CPUs, and DPUs.

NVIDIA is a software platform company and is dedicating enormous investment in NVIDIA AI and NVIDIA Omniverse.

NVIDIA is an AI company with Megatron, Jarvis, Merlin, Maxine, Isaac, Metropolis, Clara, and DRIVE, and pre-trained models you can customize with TAO.

NVIDIA is expanding AI with DGX for researchers, HGX for cloud, EGX for enterprise and 5G edge, and AGX for robotics.

“Mostly,” Huang said. “NVIDIA is the instrument for your life’s work.”