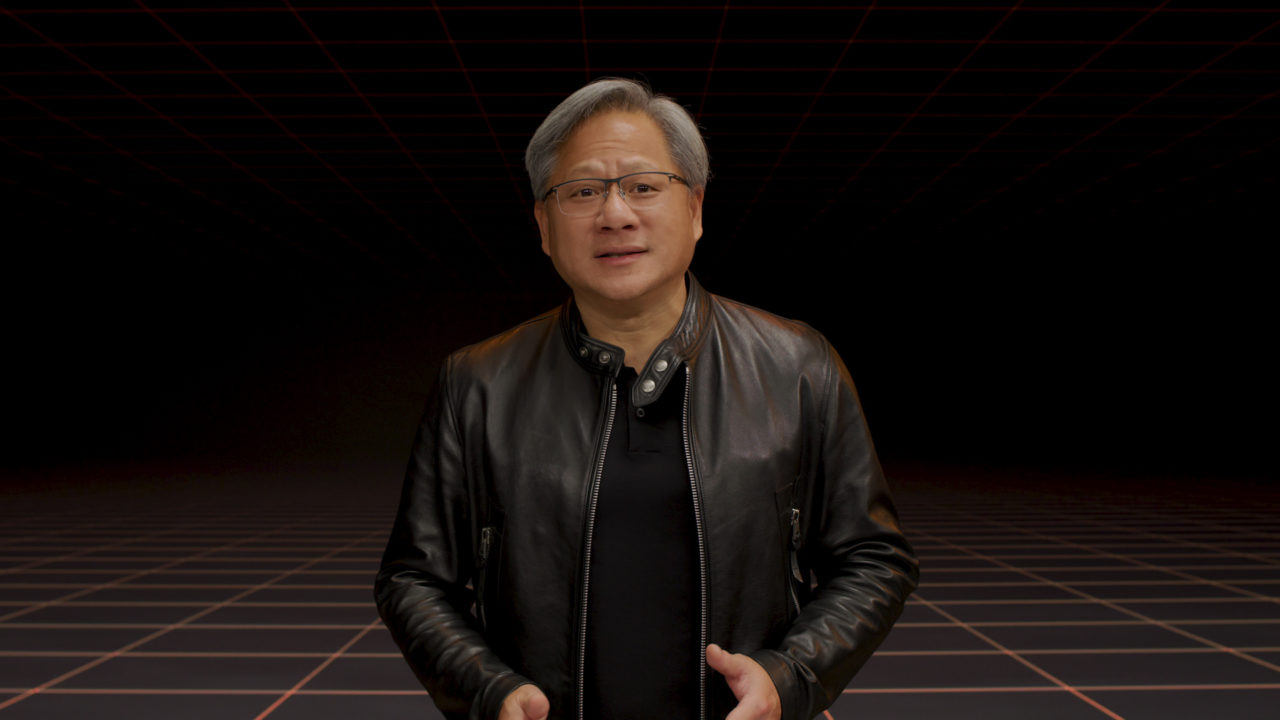

Bringing simulation of real and virtual worlds to everything from self-driving vehicles to avatars to robotics to modeling the planet’s climate, NVIDIA founder and CEO Jensen Huang Tuesday introduced technologies to transform multitrillion-dollar industries.

Huang delivered a keynote at the company’s virtual GTC gathering where he unveiled NVIDIA Omniverse Avatar and NVIDIA Omniverse Replicator, among a host of announcements, demos and far-reaching initiatives.

Huang showed how NVIDIA Omniverse — the company’s virtual world simulation and collaboration platform for 3D workflows — brings NVIDIA’s technologies together.

And he showed a demonstration of Project Tokkio for customer support and Project Maxine for video conferencing with Omniverse Avatar.

“A constant theme you’ll see — how Omniverse is used to simulate digital twins of warehouses, plants and factories, of physical and biological systems, the 5G edge, robots, self-driving cars, and even avatars,” Huang said.

Huang ended by announcing NVIDIA will build a digital twin, called E-2, or Earth Two, to simulate and predict climate change.

‘Full Stack, Data Center Scale, and Open Platform’

Accelerated computing launched modern AI, and the waves it started are coming to science and the world’s industries, Huang said.

That starts with three chips, the GPU, CPU and DPU, and systems — DGX, HGX, EGX, RTX and AGX — spanning from cloud to the edge, he said.

NVIDIA has created 150 acceleration libraries for 3 million developers, from graphics and AI to sciences and robotics.

And NVIDIA is introducing 65 new and updated SDKs at GTC.

“NVIDIA accelerated computing is a full-stack, data-center-scale and open platform,” Huang said.

Huang introduced NVIDIA Quantum-2, “the most advanced networking platform ever built,” and with the BlueField-3 DPU, welcomes cloud-native supercomputing.

Quantum-2 offers the extreme performance, broad accessibility and strong security needed by cloud computing providers and supercomputing centers, he said.

Cybersecurity is a top issue for companies and nations, and Huang announced a three-pillar zero-trust framework to tackle the challenge.

BlueField DPUs isolate applications from infrastructure. NVIDIA DOCA 1.2 — the latest BlueField SDK — enables next-generation distributed firewalls. NVIDIA Morpheus, assuming the interloper is already inside, uses the “superpowers of accelerated computing and deep learning to detect intruder activities,” Huang said.

Omniverse Avatar and Omniverse Replicator

With Omniverse, “we now have the technology to create new 3D worlds or model our physical world,” Huang said.-

To help developers create interactive characters with Omniverse that can see, speak, converse on a wide range of subjects and understand naturally spoken intent, NVIDIA announced Omniverse Avatar.

Huang showed how Project Maxine for Omniverse Avatar connects computer vision, Riva speech AI, and avatar animation and graphics into a real-time conversational AI robot — the Toy Jensen Omniverse Avatar.

He also showed a demo of Project Tokkio, a customer-service avatar in a restaurant kiosk, able to see, converse with and understand two customers.

Huang additionally showed Project Maxine’s ability to add state-of-the-art video and audio features to virtual collaboration and content creation applications.

A demo showed a woman speaking English on a video call in a noisy cafe, but she can be heard clearly without background noise. As she speaks, her words are transcribed and translated in real time into French, German and Spanish. And, thanks to Omniverse, they’re spoken by an avatar able to engage in conversation with her same voice and intonation.

To help developers to create the huge amounts of data needed to train AI, NVIDIA announced Omniverse Replicator, a synthetic-data-generation engine for training deep neural networks.

NVIDIA has developed two replicators: one for general robotics, Omniverse Replicator for Isaac Sim, and another for autonomous vehicles, Omniverse Replicator for DRIVE Sim.

Since its launch late last year, Omniverse has been downloaded 70,000 times by designers at 500 companies.

Omniverse Enterprise is now available starting at $9,000 a year.

AI Models and Systems

Huang introduced Nemo Megatron to train large language models. Such models “will be the biggest mainstream HPC application ever,” he said.

Graphs — a key data structure in modern data science — can now be projected into deep-neural network frameworks with Deep Graph Library, or DGL, a new Python package.

NVIDIA Modulus, introduced Tuesday, builds and trains physics-informed machine learning models that can learn and obey the laws of physics.

An upgrade to Triton, an inference server for all workloads, now inferences forest models and does multi-GPU multi-node inference for large language models.

And Huang introduced three new libraries.

- cuOpt – for the logistics industry.

- cuQuantum – to accelerate quantum computing research.

- cuNumeric – to accelerate NumPy for scientists, data scientists and machine learning and AI researchers in the Python community.

To help deliver services based on NVIDIA’s AI technologies to the edge, Huang announced NVIDIA Launchpad.

NVIDIA is partnering with data center powerhouse Equinix to pre-install and integrate NVIDIA AI into data centers worldwide.

Robotics

NVIDIA’s Isaac ecosystem now has over 700 companies and partners, a number that has grown 5 times in the last 4 years.

Huang announced the NVIDIA Isaac robotics platform can now be easily integrated into the Robot Operating System, or ROS, a widely-used set of software libraries and tools for robot applications.

Isaac Sim, built on Omniverse, is the most realistic robotics simulator ever created, Huang explained.

“The goal is for the robot to not know whether it is inside a simulation or the real world,” Huang said.

To aid this process, Isaac Sim Replicator can generate synthetic data to train robots.

Replicator simulates the sensors, generates data that is automatically labeled, and with a domain randomization engine, creates rich and diverse training data sets, Huang explained.

Autonomous Vehicles

Everything that moves will be autonomous—fully or mostly autonomous, Huang said. “By 2024, the vast majority of new EVs will have substantial AV capability,” he added.

NVIDIA DRIVE is NVIDIA’s full-stack and open platform for autonomous vehicles, and Hyperion 8 is NVIDIA’s latest complete hardware and software architecture.

Its sensor suite includes 12 cameras, nine radars, 12 ultrasonics and one front-facing lidar. All processed by two NVIDIA Orin SoCs.

Huang detailed several new technologies built into Hyperion, including Omniverse Replicator for DRIVE Sim, a synthetic data generator for autonomous vehicles built on Omniverse.

NVIDIA is now running Hyperion 8 sensors, 4d perception, deep learning-based multisensor fusion, feature tracking and a new planning engine.

The inside of the car will be revolutionized, too. The technology of NVIDIA Maxine will reimagine how we interact with our cars.

“With Maxine, your car will become a concierge,” Huang said.

Earth Two

Huang wrapped up by announcing NVIDIA will build Earth Two, or E-2, to simulate and predict climate change.

“All the technologies we’ve invented up to this moment are needed to make Earth Two possible,” Huang said.